Best AI Audio & Voice Tools: Text-to-Speech, Cloning, and Music

This comprehensive guide explores the best AI audio and voice tools available in 2024, covering text-to-speech synthesis, voice cloning technology, and AI music generation. We break down how these tools work in simple terms, compare leading platforms based on features, quality, and pricing, and provide practical guidance for beginners and businesses. You'll learn about the ethical considerations of voice cloning, how to integrate AI audio into your workflows, and what to look for when choosing tools for different applications like podcasting, video production, or content creation. Whether you're a creator looking to enhance your audio content or a business exploring synthetic voice solutions, this guide provides clear, balanced information to help you navigate the evolving landscape of AI audio technology.

Best AI Audio & Voice Tools: Text-to-Speech, Cloning, and Music in 2024

The world of audio production is undergoing a remarkable transformation thanks to artificial intelligence. What was once the exclusive domain of professional studios and voice actors is now accessible to anyone with a computer and an internet connection. AI audio tools can convert text to natural-sounding speech, clone voices with astonishing accuracy, and even compose original music—all with just a few clicks. This technology isn't just about convenience; it's opening up creative possibilities that were previously unimaginable or prohibitively expensive for most people and businesses.

According to industry analysis, the global synthetic voice market is expected to grow significantly as more businesses discover applications for AI-generated audio in content creation, customer service, entertainment, and accessibility (TechRadar, 2024). However, this rapid advancement also brings important questions about ethics, quality, and appropriate use. How good are these tools really? What are their limitations? And perhaps most importantly, how can you use them responsibly and effectively?

In this comprehensive guide, we'll explore the best AI audio and voice tools available in 2024, breaking down complex technology into simple concepts anyone can understand. We'll compare different platforms, explain how they work, discuss practical applications, and address the ethical considerations that come with synthetic media. Whether you're a podcaster, video creator, business owner, educator, or simply curious about this technology, you'll find clear, practical information to help you navigate the world of AI audio.

Understanding AI Audio Technology: How It Works

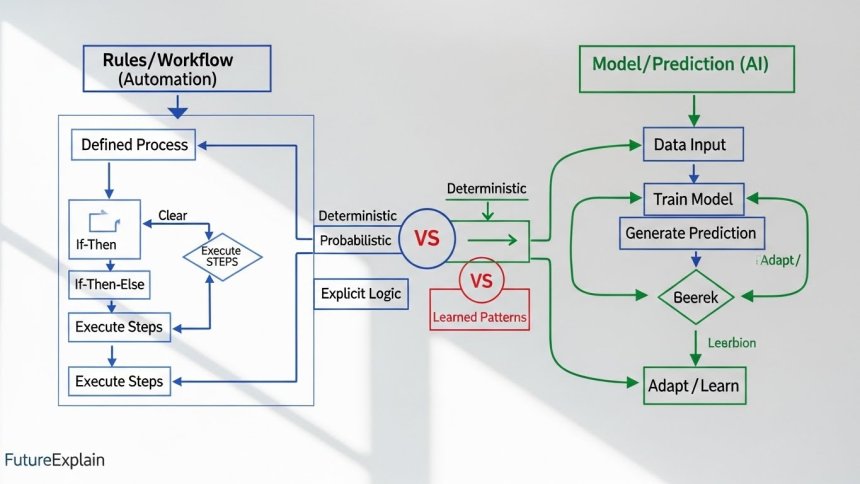

Before diving into specific tools, it helps to understand the basic technology behind AI audio. At its core, these systems use machine learning models trained on massive datasets of human speech or music. By analyzing patterns in this training data, they learn to generate new audio that mimics human qualities.

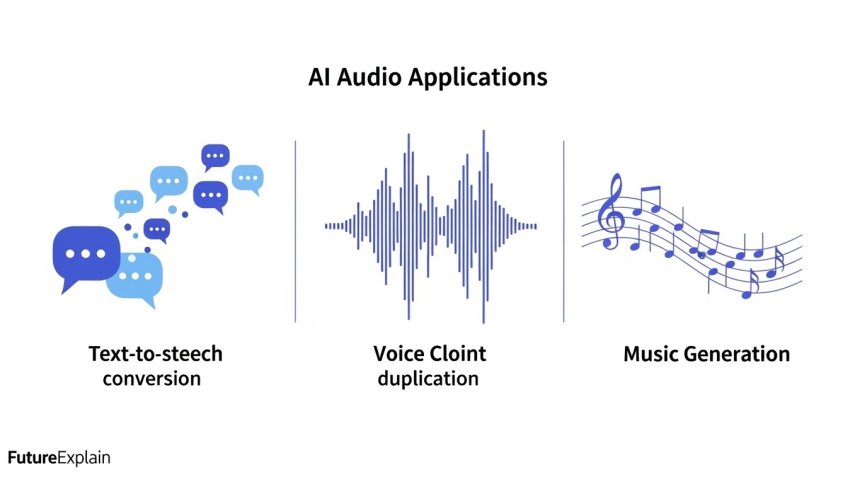

Three main approaches dominate current AI audio technology:

- Text-to-Speech (TTS): Converts written text into spoken audio using synthetic voices

- Voice Cloning: Creates a digital replica of a specific person's voice

- Music Generation: Composes original music based on parameters or examples

Each of these applications uses different technical approaches, but they all share a common foundation in deep learning. Modern TTS systems, for example, often use a two-part process: first converting text to a phonetic representation, then generating audio waveforms using neural networks trained on human speech. The result is synthetic speech that sounds increasingly natural, with proper intonation, pacing, and even emotional expression.

Text-to-Speech (TTS) Tools: Bringing Words to Life

Text-to-speech technology has evolved dramatically from the robotic voices of early computer systems. Today's AI-powered TTS can produce speech that's often indistinguishable from human recording, complete with natural pauses, emphasis, and emotional tone. Here are the leading platforms and what makes them stand out.

ElevenLabs: The Quality Leader

ElevenLabs has gained recognition for producing some of the most natural-sounding synthetic voices available. Their technology excels at capturing subtle vocal qualities like breathiness, vocal fry, and emotional nuance. The platform offers both pre-made voices and the ability to create custom voices with relatively small amounts of training data.

Key Features:

- Extremely natural-sounding speech with emotional control

- Support for 29 languages with proper accents

- Voice cloning with as little as one minute of sample audio

- API access for integration into applications

Best For: High-quality voiceovers, audiobook narration, and applications where voice naturalness is critical.

Murf.ai: The All-in-One Solution

Murf positions itself as a comprehensive voice solution with a focus on business and content creation applications. Beyond basic TTS, it offers voice editing tools, background music integration, and team collaboration features that make it suitable for workflow integration.

Key Features:

- Over 120 voices across 20+ languages

- Built-in audio editor with timeline-based editing

- Voice changer feature to modify existing recordings

- Team collaboration and sharing capabilities

Best For: Content creators, businesses producing regular audio/video content, and teams needing collaborative voiceover tools.

Amazon Polly and Google Text-to-Speech: The Developer Choices

For developers building applications that require speech synthesis, cloud platforms like Amazon Polly and Google Text-to-Speech offer robust API-based solutions with extensive language support and predictable pricing.

Comparison:

- Amazon Polly: Offers neural TTS with particularly good multilingual support and lifelike voices. Well-integrated with other AWS services.

- Google Text-to-Speech: Features WaveNet technology for high-quality speech and excellent integration with Google's ecosystem.

- Microsoft Azure TTS: Strong competitor with good customization options and enterprise support.

Best For: Developers building applications with speech output, enterprises needing scalable solutions, and projects requiring extensive language support.

Voice Cloning Technology: Capabilities and Considerations

Voice cloning represents one of the most advanced and controversial applications of AI audio technology. By training on samples of a specific person's voice, these systems can generate new speech that sounds like that person saying things they never actually said. The implications—both creative and ethical—are significant.

How Voice Cloning Works

Modern voice cloning systems typically use a two-stage process. First, they analyze sample audio to extract vocal characteristics like pitch, timbre, and speaking style. This creates a voice model or "voiceprint." Then, when given new text, they generate speech using this model, applying the learned characteristics to produce output that matches the original voice.

The quality of cloned voices depends on several factors:

- Training data quantity and quality: More diverse, clean audio samples generally produce better results

- Speaker consistency: Voices with consistent characteristics are easier to clone

- Technical approach: Different algorithms have different strengths and limitations

Leading Voice Cloning Platforms

Descript Overdub

Descript's Overdub feature integrates voice cloning directly into their comprehensive audio/video editing platform. This makes it particularly useful for content creators who need to fix mistakes or add missing words without re-recording entire segments.

Key Aspects:

- Requires approximately 30 minutes of training audio for best results

- Integrated with Descript's editing workflow

- Includes consent verification and ethical guidelines

- Subscription-based pricing with different tiers

Resemble AI

Resemble AI focuses specifically on voice cloning and offers both web interface and API access. They emphasize real-time voice generation capabilities and offer features for creating entirely synthetic voices not based on real people.

Key Aspects:

- Real-time voice cloning capabilities

- Ability to create "AI-only" voices not based on real people

- Emphasis on developer tools and API integration

- Enterprise-focused with custom pricing

Ethical Considerations and Best Practices

Voice cloning technology raises important ethical questions that users should consider carefully:

- Consent: Always obtain explicit, informed consent before cloning someone's voice

- Transparency: Clearly disclose when synthetic voices are being used

- Misuse prevention: Implement safeguards against deceptive or harmful applications

- Legal compliance: Understand copyright, publicity rights, and regulatory requirements

Many reputable platforms have implemented consent verification processes and usage guidelines. As a user, it's important to follow both platform policies and broader ethical principles. Consider creating synthetic voices that aren't based on real people when appropriate—some tools offer this option.

AI Music Generation: Composing with Algorithms

AI music generation tools represent another frontier in creative AI applications. These systems can create original musical compositions, generate accompaniment tracks, or help with specific aspects of music production like mastering and sound design.

AIVA (Artificial Intelligence Virtual Artist)

AIVA specializes in generating original classical and cinematic music compositions. Users can select from various pre-trained styles or upload reference tracks to influence the generated music.

Key Features:

- Focus on classical and cinematic styles

- Ability to train on user-provided music

- Copyright ownership of generated compositions (with proper licensing)

- API access for integration

Best For: Film scorers, game developers, and creators needing original instrumental music.

Amper Music (Now part of Shutterstock)

Amper Music, now integrated into Shutterstock's platform, focuses on creating customizable music tracks for videos, podcasts, and other media. Their interface allows for detailed control over musical elements like mood, genre, and instrumentation.

Key Features:

- Intuitive interface for customizing generated music

- Integration with video editing workflows

- Commercial licensing included with subscriptions

- Extensive library of customizable templates

Best For: Video creators, podcasters, and marketers needing royalty-free background music.

Suno AI

Suno AI represents a newer approach that can generate both music and vocals from text descriptions. While still evolving, it showcases the potential for AI to handle complete song creation from lyrical concepts to finished audio.

Key Features:

- Generates complete songs with vocals from text prompts

- Experimental but rapidly improving quality

- Free tier available for experimentation

- Community-driven development and sharing

Best For: Experimentation, songwriting inspiration, and educational purposes.

Practical Applications: Where AI Audio Makes Sense

Understanding when and where to use AI audio tools is as important as knowing which tools are available. Here are practical applications where these technologies offer real value.

Content Creation and Media Production

For podcasters, YouTubers, and other content creators, AI audio tools can significantly streamline production workflows:

- Voiceovers: Generate narration for explainer videos, tutorials, or documentaries

- Correction and editing: Use voice cloning to fix mistakes without re-recording

- Localization: Create multilingual versions of content using different language voices

- Accessibility: Generate audio versions of written content for visually impaired audiences

Many creators use tools like Descript not just for its AI features but for its integrated editing environment that combines traditional and AI-powered workflows.

Business and Enterprise Applications

Businesses are finding numerous applications for AI audio technology:

- Customer service: Generate consistent voice responses for IVR systems

- Training and education: Create voice narration for employee training materials

- Marketing and advertising: Produce voiceovers for campaigns with consistent brand voice

- Accessibility compliance: Generate audio versions of written materials

For enterprise applications, factors like reliability, scalability, and support often outweigh pure voice quality considerations. Cloud platforms like Amazon Polly and Google TTS are popular choices for these use cases.

Creative Arts and Entertainment

In creative fields, AI audio tools serve as both production aids and creative partners:

- Music production: Generate backing tracks, experiment with arrangements, or overcome creative blocks

- Game development: Create character voices, ambient sounds, or dynamic music systems

- Audiobook production: Generate narration for books, particularly for indie authors or backlist titles

- Experimental art: Explore new forms of audio expression and human-AI collaboration

It's important to view these tools as collaborators rather than replacements—they work best when guided by human creativity and judgment.

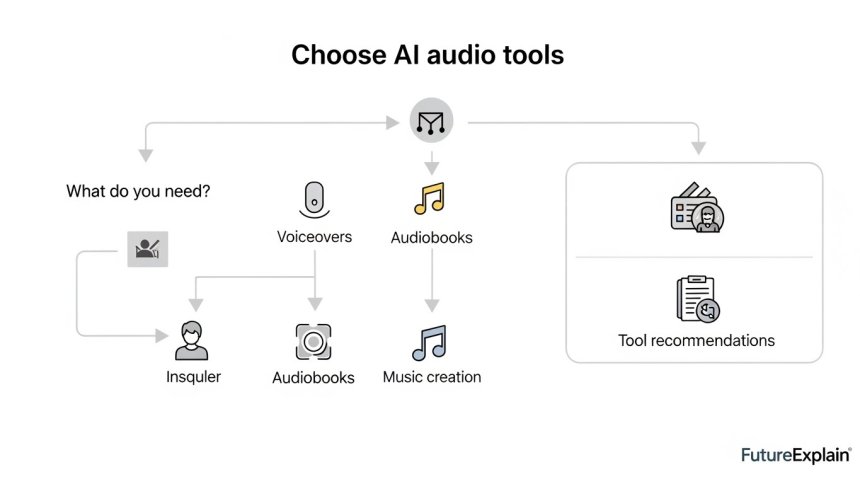

Choosing the Right Tools: A Decision Framework

With so many options available, choosing the right AI audio tools can be overwhelming. This decision framework can help you narrow down your options based on your specific needs.

Step 1: Define Your Primary Use Case

Start by being specific about what you need:

- Basic voiceovers for occasional videos or presentations

- Professional content creation with regular audio production needs

- Application integration requiring API access and scalability

- Voice cloning for specific projects or individuals

- Music composition for background tracks or creative projects

Different tools excel in different areas, so clarity here will immediately eliminate many options.

Step 2: Consider Your Technical Requirements

Evaluate what you need from a technical perspective:

- Quality level: Does it need to be studio-quality or is "good enough" acceptable?

- Language support: How many languages do you need, and are specific accents important?

- Integration: Do you need API access, plugins for specific software, or standalone applications?

- Customization: How much control do you need over voice parameters and output?

Step 3: Evaluate Practical Constraints

Practical considerations often determine what's actually feasible:

- Budget: Free tools vs. subscription models vs. pay-per-use pricing

- Learning curve: How much time can you invest in learning the tool?

- Workflow fit: How well does it integrate with your existing tools and processes?

- Team requirements: Do you need collaboration features or user management?

Step 4: Test Before Committing

Most reputable tools offer free trials or generous free tiers. Take advantage of these to:

- Test quality with your specific content

- Evaluate ease of use and learning curve

- Check integration with your workflow

- Assess output formats and compatibility

Pay particular attention to how the tool handles edge cases specific to your needs, like technical terminology, unusual names, or specific emotional tones.

Quality Assessment: What Makes AI Audio Sound "Good"?

Evaluating AI audio quality involves more than just casual listening. Here are specific factors to consider when assessing different tools.

Naturalness and Expressiveness

The best AI voices sound truly human, not just technically accurate. Look for:

- Appropriate prosody: Natural rhythm, stress, and intonation patterns

- Emotional range: Ability to convey different emotions appropriately

- Consistent character: The voice maintains consistent qualities throughout

- Breath and mouth sounds: Natural-sounding breaths and vocal nuances

Listen carefully for the "uncanny valley" effect—voices that are almost human but have subtle flaws that make them sound artificial.

Intelligibility and Accuracy

Even the most natural-sounding voice isn't useful if it's hard to understand or makes frequent errors:

- Pronunciation accuracy: Correct handling of unusual words, names, and technical terms

- Context awareness: Proper handling of homographs (words spelled the same but pronounced differently based on context)

- Punctuation interpretation: Appropriate pauses and emphasis based on punctuation

- Accent consistency: Maintaining consistent accent features throughout

Technical Quality

The underlying audio quality matters too:

- Audio fidelity: Clean, high-quality audio without artifacts or noise

- Consistency: Stable volume and tone without unexpected variations

- Format options: Availability of different output formats and quality settings

- Processing speed: Reasonable generation times for your needs

Remember that quality requirements vary by application. A voice for a corporate training video has different needs than one for a creative storytelling podcast.

Ethical and Legal Considerations

As with any powerful technology, AI audio tools come with important ethical and legal responsibilities. Being informed about these considerations is essential for responsible use.

Consent and Authorization

Voice cloning raises particular concerns about consent:

- Explicit consent: Always obtain clear, informed consent before cloning someone's voice

- Scope of use: Be specific about how the cloned voice will be used

- Revocability: Respect the right to withdraw consent

- Deceased individuals: Special considerations apply to cloning voices of people who have died

Some platforms have built-in consent verification processes, but ultimate responsibility rests with the user.

Transparency and Disclosure

Audiences have a right to know when they're listening to synthetic media:

- Clear labeling: Disclose when voices are AI-generated

- Context-appropriate disclosure: More prominent disclosure may be needed in sensitive contexts

- Avoiding deception: Never use synthetic voices to deceive or impersonate without clear artistic or satirical context

- Educational responsibility: Help audiences understand synthetic media and its implications

Copyright and Intellectual Property

Intellectual property considerations are complex and evolving:

- Training data rights: Many tools are trained on datasets with complex copyright status

- Output ownership: Understand who owns the rights to generated audio

- Commercial use: Verify that your intended use is permitted under the tool's terms

- Music copyright: AI-generated music involves additional copyright complexities

When in doubt, consult legal advice specific to your jurisdiction and use case.

Future Trends and Developments

The field of AI audio is evolving rapidly. Understanding emerging trends can help you make informed decisions about tool adoption and future-proof your workflows.

Increasing Realism and Customization

Future developments will likely focus on:

- Emotional intelligence: More nuanced emotional expression in synthetic voices

- Personalization: Tools that adapt to individual speaking styles and preferences

- Real-time generation: Faster processing enabling live applications

- Multimodal integration: Combining voice with facial animation and other media

Regulatory and Standards Development

As synthetic media becomes more prevalent, expect:

- Increased regulation: Government oversight of synthetic media applications

- Industry standards: Development of technical and ethical standards

- Detection technology: Better tools for identifying synthetic media

- Watermarking and provenance: Technical solutions for tracking media origins

Democratization and Accessibility

AI audio technology will likely become:

- More accessible: Lower costs and simpler interfaces

- More integrated: Built into common software and platforms

- More specialized: Tools tailored for specific industries and use cases

- More collaborative: Better support for human-AI creative partnerships

Getting Started: Practical First Steps

If you're new to AI audio tools, here's a practical approach to getting started without becoming overwhelmed:

Start with Free Trials

Most major platforms offer free tiers or trials. Create accounts with several that seem promising for your needs and:

- Generate samples with identical text to compare quality

- Test with your actual content, not just demo text

- Evaluate interface ease and learning curve

- Check output formats and compatibility with your tools

Begin with Low-Stakes Projects

Apply AI audio to projects where perfection isn't critical:

- Internal training materials or documentation

- Personal projects or experiments

- Content where the message matters more than production quality

- Projects with tight deadlines or budgets where AI offers clear efficiency advantages

Develop Ethical Guidelines

Before scaling up usage, establish clear guidelines:

- Document your consent and disclosure practices

- Set quality standards for different types of content

- Establish review processes for AI-generated content

- Stay informed about legal and regulatory developments

Conclusion: The Future of Audio is Augmented, Not Automated

AI audio tools represent a powerful new category of creative technology, but they're at their best when viewed as augmentations of human capability rather than replacements. The most effective applications combine AI efficiency with human judgment, creativity, and ethical consideration.

As these tools continue to evolve, staying informed about both technical capabilities and ethical implications will be increasingly important. By approaching AI audio with curiosity, responsibility, and clear purpose, you can harness its potential while navigating its challenges thoughtfully.

Remember that technology should serve human goals, not the other way around. Whether you're using AI audio to enhance accessibility, streamline production, explore creative possibilities, or solve business challenges, keeping human values and needs at the center will lead to the most meaningful and sustainable applications.

Further Reading

Share

What's Your Reaction?

Like

1580

Like

1580

Dislike

15

Dislike

15

Love

420

Love

420

Funny

65

Funny

65

Angry

10

Angry

10

Sad

8

Sad

8

Wow

320

Wow

320

After reading this article, we're implementing an AI audio ethics policy company-wide. The guidelines here gave us a great starting point.

We produce content in endangered languages. AI tools trained on limited audio samples are helping preserve and teach these languages. The ethical implications are positive here.

Colton, that's a beautiful application. For language preservation, collaboration with linguistic communities is essential. Ensure the communities benefit from and control how their language is used. This is an area where technology can support cultural preservation when used respectfully and collaboratively.

The decision framework helped us choose between building in-house vs using APIs. We went with APIs for now but may build custom models later.

We use voice cloning for continuity when voice actors become unavailable (illness, scheduling). With their permission, of course. It's saved several projects.

What about audio post-processing? The raw AI audio often needs compression, normalization, and noise reduction to match our studio quality.

Cole, absolutely. AI-generated audio should go through the same post-processing as recorded audio: compression, EQ, normalization, and sometimes reverb or other effects to match your sound profile. Some tools offer built-in processing, but for professional results, use dedicated audio editing software or plugins.

We're in sports broadcasting and use AI voices for highlight reels when human commentators aren't available. The consistency is good, but we miss the spontaneity.