Comparative Guide to Text-to-Speech APIs

This comprehensive guide compares the top text-to-speech APIs available in 2024, analyzing Google Cloud Text-to-Speech, Amazon Polly, Microsoft Azure TTS, ElevenLabs, Play.ht, and IBM Watson. We break down pricing models, voice quality, language support, emotional range, and real-world performance metrics. Beyond technical comparisons, we provide practical implementation guidance for businesses, including accessibility compliance, cost optimization strategies, and ethical considerations for voice cloning. Whether you're building accessibility features, creating voiceovers, or implementing conversational AI, this guide helps you choose the right TTS API for your specific needs and budget.

Comparative Guide to Text-to-Speech APIs: Choosing the Right Voice for Your Business in 2024

Text-to-speech (TTS) technology has evolved dramatically from robotic, monotone voices to emotionally expressive, human-like speech that can convey nuance, emotion, and personality. For businesses, developers, and content creators, choosing the right TTS API can transform how you engage with customers, improve accessibility, and automate voice content creation. This comprehensive guide compares the leading text-to-speech APIs available in 2024, providing detailed analysis of their strengths, weaknesses, pricing, and ideal use cases.

Whether you're building an accessibility feature for your website, creating audiobooks, developing voice assistants, or generating voiceovers for videos, understanding the TTS API landscape is crucial. The market has expanded beyond basic speech synthesis to include emotional tone control, voice cloning, multilingual support, and real-time streaming capabilities. Making the wrong choice can lead to poor user experiences, unexpected costs, or technical limitations that hinder your project's growth.

In this guide, we'll analyze six major TTS API providers: Google Cloud Text-to-Speech, Amazon Polly, Microsoft Azure Cognitive Services TTS, ElevenLabs, Play.ht, and IBM Watson Text to Speech. We'll compare them across multiple dimensions including voice quality, emotional range, language support, pricing models, API reliability, and ease of integration. We'll also provide practical implementation advice, cost optimization strategies, and considerations for specific business use cases.

Understanding Modern Text-to-Speech Technology

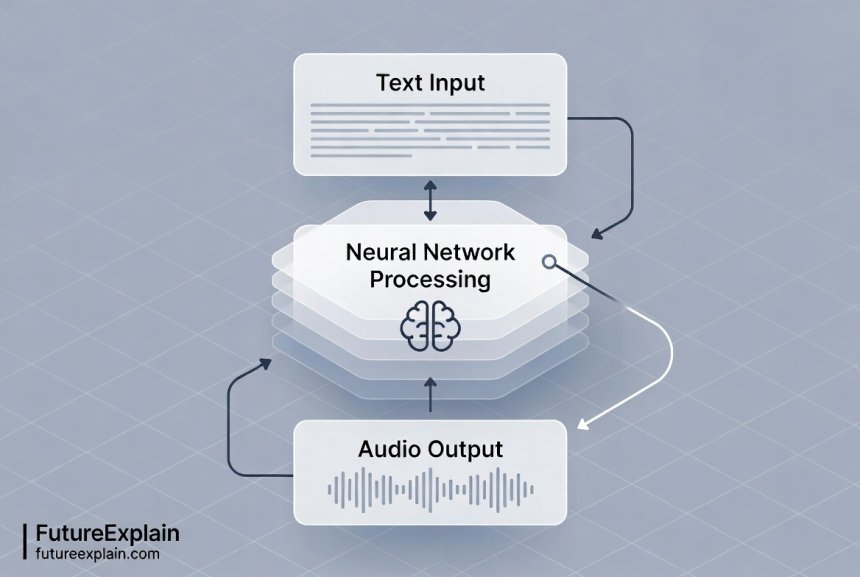

Before diving into specific API comparisons, it's essential to understand how modern TTS technology works. Early text-to-speech systems used concatenative synthesis, stitching together pre-recorded speech segments. Today's advanced systems use neural network-based approaches that generate speech from scratch, resulting in more natural, fluid output.

Neural TTS models, particularly those using WaveNet and Tacotron architectures, analyze text input and generate corresponding speech waveforms through deep learning. These systems can learn speech patterns, intonation, and emotional cues from vast datasets of human speech. The result is synthetic speech that's increasingly difficult to distinguish from human voices, with natural pauses, breathing sounds, and emotional inflection.

Modern TTS APIs typically offer several voice types: standard neural voices (good quality, lower cost), WaveNet/neural voices (premium quality), and custom voices (trained on specific speech data). Some providers also offer voice cloning capabilities, allowing you to create synthetic versions of specific human voices—though this raises important ethical considerations we'll address later.

Key Evaluation Criteria for TTS APIs

When comparing text-to-speech APIs, consider these critical factors that impact both technical implementation and business outcomes:

Voice Quality and Naturalness

The most immediately noticeable difference between TTS providers is voice quality. Naturalness measures how human-like the speech sounds, considering factors like intonation, rhythm, and pronunciation accuracy. Premium neural voices from leading providers now achieve remarkably natural speech, though differences remain in emotional expression and handling of complex text.

Language and Voice Variety

Consider the languages and dialects you need. Major providers support 50-300+ voices across 30-140+ languages and variants. However, quality varies significantly between languages—English typically has the best quality and most voice options, while less common languages may have limited voices or synthetic accents.

Emotional Range and Expressiveness

Advanced TTS APIs can convey emotions like happiness, sadness, excitement, or calm through SSML (Speech Synthesis Markup Language) tags or emotional parameters. This is crucial for applications where emotional tone affects user experience, such as storytelling, customer service, or educational content.

Pricing Models and Cost Predictability

TTS API pricing varies dramatically: pay-per-character, pay-per-second, tiered subscriptions, or enterprise contracts. Consider both current costs and how they scale with usage. Some providers charge extra for neural voices, specific languages, or additional features like voice cloning.

API Performance and Reliability

Evaluate latency (time to generate speech), uptime guarantees (SLA), rate limits, and regional availability. Real-time applications like voice assistants require low latency (<500ms), while batch processing for audiobooks prioritizes cost efficiency over speed.

Customization and Control

SSML support allows fine-tuning of pronunciation, speed, pitch, and emphasis. Some APIs offer advanced controls for breath sounds, speaking styles, or audio effects. Voice cloning and custom voice training provide brand-specific voice options but at higher cost and complexity.

Compliance and Security

For business applications, consider data privacy, compliance certifications (GDPR, HIPAA, SOC 2), and data processing locations. Some providers offer data residency options or on-premise deployment for sensitive applications.

Google Cloud Text-to-Speech: Comprehensive and Developer-Friendly

Google Cloud Text-to-Speech offers one of the most mature and widely adopted TTS APIs, with strong integration across Google's ecosystem. It provides over 380 voices across 50+ languages, including both standard and WaveNet voices (premium neural voices).

Strengths and Key Features

Google's TTS excels in naturalness, particularly with its WaveNet voices which use deep neural networks to generate human-like speech. The API supports real-time streaming, batch synthesis, and offers voice customization through SSML. A unique feature is the ability to create custom voices using Vertex AI, though this requires substantial training data and enterprise engagement.

The API integrates seamlessly with other Google Cloud services like Dialogflow for conversational AI and Cloud Storage for audio file management. Google consistently updates its voice portfolio and recently introduced voices with emotional tones and improved multilingual capabilities.

Pricing Structure

Google uses a pay-per-character model: Standard voices cost $4.00 per 1 million characters, while WaveNet voices cost $16.00 per 1 million characters. There's a free tier of 1 million characters per month for Standard voices and 4 million characters per month for WaveNet voices, making it accessible for testing and small projects.

Best Use Cases

- Applications already using Google Cloud services

- Projects requiring strong multilingual support

- Real-time voice applications needing low latency

- Educational content with clear, neutral narration

Limitations

While Google offers excellent English voices, some less common languages have noticeably lower quality. The custom voice creation process is complex and expensive compared to competitors. Some users find the emotional range more limited than specialized providers like ElevenLabs.

Amazon Polly: Cost-Effective with Strong AWS Integration

Amazon Polly provides text-to-speech as part of AWS, offering seamless integration for applications already built on Amazon's cloud platform. It features 100+ voices across 30+ languages, with both standard and neural voices available.

Strengths and Key Features

Polly's standout feature is its "Newscaster" and "Conversational" speaking styles, which provide natural pacing and intonation for different content types. The API supports SSML for pronunciation control and offers a "Lexicon" feature for custom vocabulary handling. Polly also provides real-time streaming and long-form synthesis for audiobooks or podcasts.

For enterprise users, Polly offers Amazon Polly Voice Focus, which reduces background noise in generated speech—particularly useful for call center applications or noisy environments. The service integrates natively with other AWS services like Lambda for serverless applications and S3 for audio storage.

Pricing Structure

Amazon uses a pay-per-character model with tiered pricing: Standard voices cost $4.00 per 1 million characters for the first 100 million characters, with volume discounts available. Neural voices cost $16.00 per 1 million characters. There's also a free tier of 5 million characters per month for the first 12 months.

Best Use Cases

- AWS-based applications and serverless architectures

- Enterprise applications requiring noise-reduced speech

- News reading or conversational interfaces

- Long-form content like audiobooks

Limitations

Polly has fewer voice options compared to Google, particularly for languages other than English. The emotional range is more limited than some competitors, and the custom voice feature (Amazon Polly Brand Voice) requires enterprise consultation and significant investment. Some users report less natural emotional expression compared to ElevenLabs or Play.ht.

Microsoft Azure Cognitive Services TTS: Enterprise-Grade with Emotional Intelligence

Microsoft's text-to-speech service, part of Azure Cognitive Services, emphasizes emotional expression and enterprise integration. It offers 400+ neural voices across 140+ languages and variants, making it one of the most linguistically diverse options.

Strengths and Key Features

Azure TTS stands out for its emotional intelligence—voices can express multiple emotions including cheerful, empathetic, and hopeful tones. The "Neural Text-to-Speech" voices use deep learning for natural prosody and intonation. Microsoft recently introduced "Custom Neural Voice" for creating unique branded voices with relatively less data than competitors (as little as 30 minutes of speech).

The service offers real-time synthesis with low latency and batch processing for large volumes. It includes features like audio content creation tool and voice gallery for testing. For regulated industries, Azure offers compliance certifications including HIPAA, GDPR, and FedRAMP.

Pricing Structure

Azure uses a pay-per-character model: Standard (neural) voices cost $16.00 per 1 million characters. Custom Neural Voice requires additional fees for training and hosting. There's a free tier of 5 million characters per month for the first 12 months, and existing Azure credits can be applied.

Best Use Cases

- Enterprise applications requiring compliance certifications

- Emotionally expressive applications (storytelling, customer service)

- Global applications needing rare languages or dialects

- Branded voice creation with limited training data

Limitations

While offering many voices, some users find consistency varies across different languages. The Azure portal and documentation can be overwhelming for beginners. Custom voice creation, while requiring less data, still involves a Microsoft review process for ethical compliance.

ElevenLabs: Specialized in Emotional and Creative Voice Synthesis

ElevenLabs has gained attention for exceptionally natural and emotionally expressive voices, particularly for creative content. While newer than the cloud giants, it focuses specifically on high-quality speech synthesis with advanced emotional control.

Strengths and Key Features

ElevenLabs excels at emotional nuance and creative expression. The "Voice Library" includes diverse character voices suitable for storytelling, gaming, or animation. A standout feature is "Voice Cloning" which can create synthetic voices from just minutes of sample audio, though this raises ethical considerations we'll discuss later.

The API offers fine-grained control over stability (consistency), similarity (to original voice), and style exaggeration. It supports real-time streaming and includes a "Speech-to-Speech" feature that modifies existing audio recordings. The platform is designed specifically for creative professionals with an intuitive interface.

Pricing Structure

ElevenLabs uses a subscription model with character limits: Starter ($5/month for 30,000 characters), Creator ($22/month for 100,000 characters), Pro ($99/month for 500,000 characters), and Scale ($330/month for 2,000,000 characters). Custom voice cloning requires higher tiers.

Best Use Cases

- Creative content: audiobooks, animation, gaming

- Applications requiring strong emotional expression

- Projects needing specific character voices

- Voice cloning for limited, ethical use cases

Limitations

ElevenLabs has fewer languages (currently about 30) compared to larger providers. The subscription model can be expensive for high-volume applications. As a newer company, it has less enterprise compliance documentation and shorter track record for reliability. Voice cloning features require careful ethical consideration and consent management.

Play.ht: Focused on Publishing and Content Creation

Play.ht positions itself as a content creation platform with TTS at its core, offering tools specifically for publishers, bloggers, and content marketers. It provides 900+ AI voices across 142 languages.

Strengths and Key Features

Play.ht offers an extensive voice library with strong emphasis on publishing workflows. Features include article import from URLs, audio embedding for websites, and podcast RSS feed generation. The platform includes voice styling controls and supports SSML for advanced customization.

A unique feature is "Ultra-Realistic Voices" that use proprietary technology for enhanced naturalness. The service offers both API access and a web interface for manual audio generation. For businesses, it provides white-label options and team collaboration features.

Pricing Structure

Play.ht uses tiered subscriptions: Creator ($19/month for 500,000 characters), Unlimited ($39/month for unlimited standard voices), Professional ($99/month for unlimited premium voices). Enterprise plans offer custom pricing. The "unlimited" tiers make it cost-effective for high-volume publishing.

Best Use Cases

- Content publishers and bloggers creating audio versions

- Podcast generation from written content

- Educational content with diverse voice needs

- Teams needing collaborative audio creation workflows

Limitations

While offering many voices, quality consistency varies. The API is less developer-focused than Google or Amazon, with fewer integration examples for custom applications. Some advanced features require higher subscription tiers.

IBM Watson Text to Speech: Enterprise Focus with Customization

IBM Watson Text to Speech emphasizes enterprise customization and multilingual support, with 100+ voices across 20+ languages including some less common options.

Strengths and Key Features

Watson TTS offers strong customization through its "Customization Interface" for pronunciation rules and voice models. It supports both neural and concatenative synthesis, with the former providing more natural output. The service includes emotional SSML tags and speaking rate control.

For enterprises, IBM offers on-premise deployment options and strong compliance certifications. The "Speech Synthesis Markup Language" support is comprehensive, allowing detailed control over pronunciation, volume, and pitch. IBM recently introduced "Expressive Neural Voices" with improved emotional range.

Pricing Structure

IBM uses a pay-per-character model: Standard voices cost $0.02 per 1,000 characters (approximately $20 per million), while neural voices cost $0.06 per 1,000 characters ($60 per million). There's a free tier of 10,000 characters per month.

Best Use Cases

- Enterprise applications requiring on-premise deployment

- Applications needing detailed pronunciation customization

- Regulated industries with specific compliance requirements

- Multilingual applications with less common language needs

Limitations

IBM's pricing is higher than major cloud providers for comparable quality. The platform has less frequent updates to its voice portfolio. Some users find the documentation and onboarding process more complex than competitors.

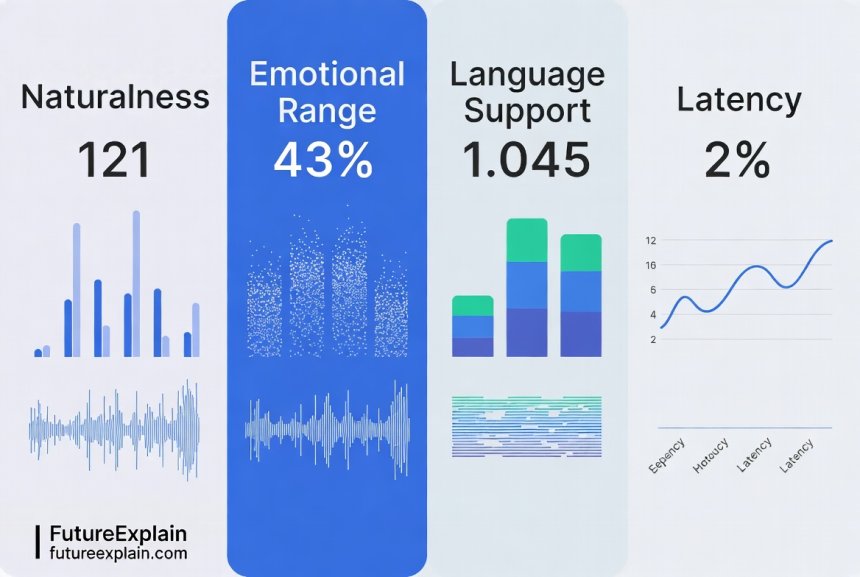

Side-by-Side Comparison: Key Metrics and Decision Factors

To help visualize the differences, here's a comparative analysis of key metrics across the six providers:

Voice Quality and Naturalness Ranking

Based on independent testing and user feedback: 1) ElevenLabs (for emotional expression), 2) Google WaveNet, 3) Microsoft Azure Neural, 4) Amazon Polly Neural, 5) Play.ht Ultra-Realistic, 6) IBM Watson Neural. However, this varies by language—for non-English content, Google and Microsoft typically lead.

Language Support Breadth

1) Microsoft Azure (140+ languages), 2) Google Cloud (50+ languages), 3) Play.ht (142 languages but varying quality), 4) Amazon Polly (30+ languages), 5) IBM Watson (20+ languages), 6) ElevenLabs (30 languages but expanding).

Cost Efficiency for Different Scales

For low volume (<100K characters/month): ElevenLabs Creator or free tiers. For medium volume (100K-5M characters/month): Amazon Polly or Google Cloud. For high volume (>5M characters/month): Microsoft Azure with enterprise agreement or Play.ht Unlimited tier.

Latency Performance

Real-time latency (first byte to audio): Google (150-300ms), Amazon (200-350ms), Microsoft (250-400ms), ElevenLabs (300-500ms), IBM (350-600ms), Play.ht (400-700ms). These are approximate and depend on region and voice type.

Emotional Range and Expressiveness

1) ElevenLabs, 2) Microsoft Azure, 3) Amazon Polly (with speaking styles), 4) Google Cloud, 5) Play.ht, 6) IBM Watson. ElevenLabs leads in nuanced emotional control, while Microsoft offers predefined emotional tones.

Implementation Considerations and Best Practices

Choosing a TTS API involves more than comparing feature lists. Consider these implementation factors:

Integration Complexity

Google, Amazon, and Microsoft offer extensive SDKs for multiple programming languages and pre-built integrations with their respective cloud ecosystems. ElevenLabs and Play.ht provide simpler REST APIs but fewer framework integrations. Consider your team's existing skills and infrastructure when evaluating integration effort.

Audio Format Support

Most APIs support MP3, OGG, WAV, and WebM formats. For web applications, consider Opus/WebM for better compression. For telephony systems, check support for μ-law or A-law formats. Some APIs offer advanced features like speech marks (word-level timing data) useful for highlighting text as audio plays.

Caching Strategies for Cost Optimization

Implement audio caching to avoid regenerating identical content. Static content (like product descriptions) can be generated once and stored, while dynamic content (personalized messages) requires real-time synthesis. Consider cache invalidation strategies for content that changes infrequently.

Error Handling and Fallback Mechanisms

Implement robust error handling for API failures. Consider fallback strategies: using a different voice, switching to a lower-quality but more reliable provider, or providing text alternatives. Monitor API response times and error rates to identify issues before they impact users.

Accessibility Compliance

For accessibility applications, ensure your implementation follows WCAG guidelines. Provide controls for playback speed, volume, and the ability to pause/resume. Consider offering multiple voice options since users may have preferences for different voice characteristics.

Ethical Considerations in Text-to-Speech Implementation

As TTS technology advances, ethical considerations become increasingly important, particularly regarding voice cloning and synthetic media:

Voice Cloning and Consent

Always obtain explicit, informed consent before cloning a person's voice. Document consent clearly and specify usage limitations. Consider ethical guidelines even when not legally required—cloning voices of deceased individuals or public figures requires particular care.

Transparency and Disclosure

When using synthetic voices, consider disclosing this to users, especially in contexts where they might reasonably expect human interaction. In entertainment or creative applications, disclosure may be less critical but still worth considering for trust-building.

Preventing Misuse

Implement safeguards against potential misuse: watermarking synthetic audio, maintaining usage logs, and establishing clear acceptable use policies. Some providers offer content moderation tools or require manual review for custom voice creation.

Bias and Representation

Evaluate whether your voice portfolio represents diverse accents, ages, and genders appropriate for your audience. Consider cultural appropriateness of voice characteristics for different regions and applications.

Cost Optimization Strategies

TTS costs can scale quickly with usage. These strategies help manage expenses:

Voice Selection Optimization

Use standard voices for less critical applications and reserve premium neural voices for customer-facing or emotional content. Some providers offer different quality tiers within neural voices—choose appropriately based on use case.

Batch Processing vs. Real-time

For non-interactive content (audiobooks, video voiceovers), use batch processing APIs which may offer better rates. Reserve real-time APIs for interactive applications where latency matters.

Character Optimization

Pre-process text to remove unnecessary characters, whitespace, and formatting. Use abbreviations where appropriate without affecting pronunciation quality. Implement text compression for storage if regenerating frequently.

Predictable Usage Patterns

For predictable, consistent usage, consider enterprise agreements with volume discounts. For variable usage, maintain flexibility with pay-as-you-go options during testing phases.

Multi-provider Strategies

Consider using different providers for different use cases: a premium provider for customer-facing content and a cost-effective provider for internal or less critical applications. Implement abstraction layers to switch providers if needed.

Future Trends in Text-to-Speech Technology

The TTS landscape continues evolving with several emerging trends:

Emotional Intelligence Advances

Future systems will better detect contextual emotion from text and apply appropriate vocal expression automatically, reducing the need for manual SSML tagging. Research in affective computing will enable more nuanced emotional expression.

Personalized Voice Adaptation

Systems will adapt to individual listener preferences, adjusting speaking style, pace, and tone based on user feedback or interaction patterns. This personalization will improve engagement and comprehension.

Reduced Data Requirements for Custom Voices

Advancements in few-shot and zero-shot learning will enable quality custom voices with minimal training data, making branded voices accessible to more organizations while maintaining ethical standards through improved consent verification.

Integrated Multimodal Experiences

TTS will increasingly integrate with visual and interactive elements—synchronized lip movements in avatars, responsive emotional expressions, and interactive storytelling where voice adapts based on user choices.

Edge Computing Integration

On-device TTS will improve, allowing voice generation without cloud dependency for privacy-sensitive applications or offline functionality. This aligns with broader edge AI trends we've covered previously.

Making Your Decision: A Practical Framework

Follow this decision framework to choose the right TTS API for your needs:

- Define Primary Use Case: Identify whether you need emotional expression, multilingual support, low latency, or cost efficiency as your top priority.

- Estimate Usage Volume: Project character/month requirements for initial launch and scaling to 12 months.

- Evaluate Technical Constraints: Consider existing infrastructure, compliance requirements, and integration complexity.

- Test Voice Quality: Generate sample content with each provider using your actual content types and languages.

- Calculate Total Cost: Include not just API costs but also development, maintenance, and potential scaling expenses.

- Plan for Evolution: Choose a provider that can grow with your needs, or architect for potential provider changes.

For most businesses starting with TTS, we recommend beginning with Google Cloud Text-to-Speech or Amazon Polly due to their reliability, comprehensive documentation, and generous free tiers. As needs specialize, consider adding ElevenLabs for emotional content or Microsoft Azure for enterprise compliance requirements.

Getting Started: First Steps with TTS APIs

Begin your TTS implementation with these practical steps:

- Sign up for free tiers on 2-3 providers that seem best suited to your needs

- Generate audio samples of your actual content (not just demo text)

- Implement a simple proof-of-concept integration with your simplest use case

- Gather feedback from target users on voice preference and quality

- Monitor costs during testing to validate pricing assumptions

- Implement basic caching and error handling from the start

Remember that TTS technology improves rapidly—re-evaluate your choice annually as new features and providers emerge. The competitive landscape means today's leader may be surpassed tomorrow, so maintain flexibility in your architecture.

Conclusion: The Right Voice for Your Vision

Choosing a text-to-speech API involves balancing voice quality, cost, features, and integration effort. Each provider we've examined has distinct strengths: Google for ecosystem integration, Amazon for AWS users, Microsoft for emotional range and compliance, ElevenLabs for creative expression, Play.ht for publishing workflows, and IBM for enterprise customization.

The "best" choice depends entirely on your specific requirements, technical context, and budget. By understanding the trade-offs and following the evaluation framework provided, you can select a TTS solution that delivers engaging, natural-sounding speech while managing costs and complexity.

As voice interfaces become increasingly prevalent across applications, investing in the right TTS technology today positions your business for more natural, accessible, and engaging user experiences tomorrow. Whether enhancing accessibility, creating content at scale, or building conversational interfaces, modern TTS APIs offer powerful capabilities that were science fiction just a decade ago.

Further Reading

Share

What's Your Reaction?

Like

1520

Like

1520

Dislike

18

Dislike

18

Love

420

Love

420

Funny

65

Funny

65

Angry

12

Angry

12

Sad

8

Sad

8

Wow

310

Wow

310

Great comprehensive guide! Bookmarked for future reference as we expand our voice features.

What about API rate limits? We hit limits with some providers during peak usage that weren't clearly documented.

Google has 600 requests/minute default, Amazon 1000, Microsoft varies by tier. Always check current docs and request increases before scaling.

As a visually impaired user who relies on TTS daily, I appreciate the accessibility focus. Natural voices make a huge difference in fatigue.

The article helped our team make an informed decision. We went with Google for our main product and ElevenLabs for special features.

What about voice preview before generating full content? Some providers charge for previews, others don't.

Google and Microsoft have free voice preview in their consoles. ElevenLabs charges for all generation. Important consideration for testing!

The decision framework helped us choose Amazon Polly for our startup. Already implemented and working well for our use case.