Legal Landscape: AI Regulation Overview (2024 Update)

This guide provides a clear overview of the rapidly evolving global landscape of AI regulation as of 2024. It explains why governments are creating new rules for artificial intelligence and breaks down the two major regulatory approaches emerging worldwide: the comprehensive, risk-based model pioneered by the European Union's AI Act, and the more fragmented, sector-specific approach taking shape in the United States. The article offers practical, beginner-friendly guidance for businesses and individuals on how to understand their obligations, with steps to classify AI use, check applicable laws, and build responsible governance. It also looks ahead to future trends, including the growing focus on AI safety standards and international cooperation, empowering readers to navigate this new legal environment with confidence.

Legal Landscape: AI Regulation Overview (2024 Update)

The world of artificial intelligence is entering a new era—one defined not just by technological breakthroughs, but by legal frameworks. For beginners, business owners, and anyone using AI tools, a wave of new regulations can seem like a complex maze. This guide cuts through the complexity to explain the global AI regulation landscape in 2024, focusing on what the new rules mean for you in simple, practical terms.

Governments worldwide are acting to balance the immense benefits of AI with its potential risks, from privacy violations to algorithmic bias. The goal of these regulations is to foster trustworthy, human-centric AI that drives innovation safely. As a user or developer, understanding this landscape is no longer optional; it's a key part of using technology responsibly and successfully.

We will explore the two dominant regulatory models taking shape: the comprehensive, risk-based approach of the European Union AI Act, and the more decentralized, state-led activity in the United States. We will also provide a actionable steps you can take to assess your own compliance needs. By the end, you will have a clear map of the legal terrain and know how to navigate it.

Why Regulate AI? Understanding the Global Push for Rules

Before diving into specific laws, it's important to understand why governments feel the need to regulate AI now. The technology has moved from research labs into our daily lives—influencing job applications, medical diagnoses, news feeds, and creative work. This widespread integration brings undeniable opportunities but also raises significant concerns that existing laws struggle to address[citation:1].

The core motivations for AI regulation include:

- Managing Risk: Preventing tangible harm from AI systems, such as discrimination in hiring, unsafe autonomous vehicles, or manipulation through deepfakes.

- Protecting Rights: Safeguarding fundamental human rights like privacy, non-discrimination, and freedom of expression in the digital space.

- Building Trust: Creating public confidence so that society can embrace AI's benefits. If people don't trust AI, they won't use it.

- Promoting Innovation (Responsibly): Setting clear, predictable rules so companies know what is expected, which can actually fuel investment and innovation within safe boundaries.

As one analysis notes, jurisdictions globally are trying to "strike a balance between encouraging AI innovation and investment, while at the same time attempting to create rules to protect against possible harms"[citation:1]. The challenge is that countries are taking "substantially different approaches," creating a complex patchwork for international businesses[citation:1].

The European Union's AI Act: A Risk-Based Blueprint

The European Union AI Act is the world's first comprehensive horizontal law governing artificial intelligence[citation:9]. It serves as a pioneering blueprint that many other regions are watching closely. Its core philosophy is a risk-based approach, meaning the rules you must follow depend entirely on how risky the EU deems your specific use of AI.

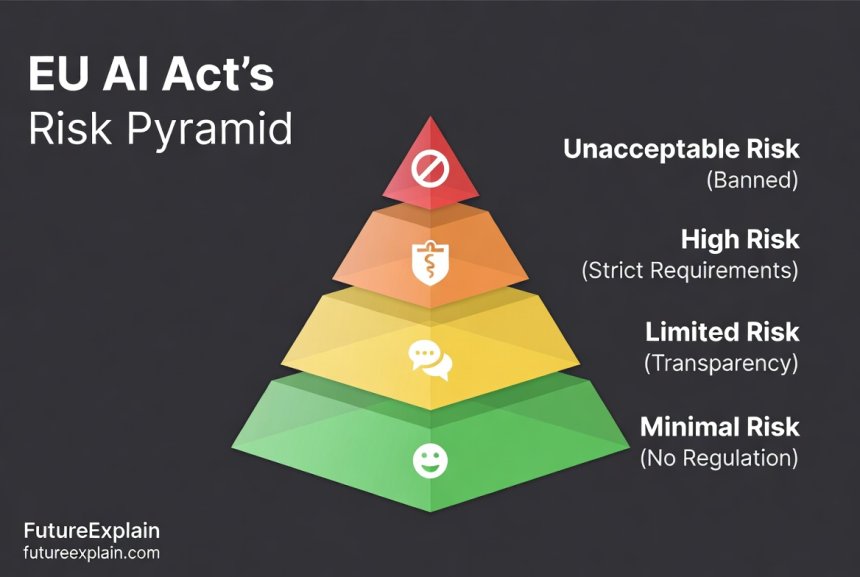

The Four-Tier Risk Pyramid

The EU AI Act categorizes AI systems into four risk levels, each with its own set of obligations.

- Unacceptable Risk (Banned): This tier prohibits AI practices considered a fundamental threat to people's safety and rights. Banned applications include:

- Government-run social scoring systems that classify people based on behavior or personal characteristics[citation:9].

- Certain uses of real-time remote biometric identification (like facial recognition) in public spaces, with very limited exceptions for law enforcement[citation:9].

- AI that manipulates human behavior to bypass free will, or exploits vulnerabilities of specific groups[citation:8].

- High Risk (Stringent Requirements): This is the most regulated category. It covers AI used in critical areas like:

- Essential services (e.g., healthcare, education, access to benefits).

- Employment and worker management (e.g., CV-scanning recruitment tools).

- Safety components of products (e.g., AI in medical devices or cars)[citation:9].

- Limited Risk (Transparency Obligations): This tier includes systems like chatbots or AI that generates content (e.g., ChatGPT, image generators). The main rule here is transparency. Users must be informed they are interacting with an AI. Furthermore, AI-generated content like deepfakes must be clearly labeled as such[citation:9].

- Minimal or No Risk: The vast majority of AI applications, like AI-powered video game characters or spam filters, fall into this category. They face no new restrictions under the AI Act, allowing for free innovation.

Special Rules for General-Purpose AI (GPAI)

A key innovation of the EU AI Act is its separate rules for General-Purpose AI (GPAI) models—like the powerful systems behind ChatGPT or Stable Diffusion. These are foundational models that can be adapted for many different tasks[citation:6].

The Act imposes specific transparency requirements on GPAI providers, such as publishing a detailed summary of the copyrighted data used for training[citation:9]. For the most powerful "high-impact" GPAI models with "systemic risk," there are additional obligations, including mandatory model evaluations, risk assessments, and reporting serious incidents to the European Commission[citation:9].

The EU's approach is designed to be a global standard-setter, much like the GDPR did for data privacy. For any company operating in or targeting the EU market, understanding this risk-based pyramid is the first critical step toward compliance.

The United States: A Decentralized and Evolving Patchwork

In contrast to the EU's centralized framework, the United States has not passed a comprehensive federal AI law. Instead, regulation is emerging through a mix of state-level legislation and actions by existing federal agencies using their current authority[citation:6][citation:8]. This creates a more fragmented, yet dynamic, landscape.

State-Level Leadership

U.S. states have become laboratories for AI regulation. In 2024 alone, 45 states introduced nearly 700 AI-related bills, with 31 states enacting legislation—a more than 300% increase from 2023[citation:6]. Major state laws include:

- Colorado's SB24-205: Often called the nation's first "comprehensive" state AI law, it focuses squarely on preventing discrimination[citation:6]. It regulates "high-risk AI systems" used in consequential decisions like hiring, lending, and healthcare. The law imposes risk assessment and transparency duties, differing for developers and deployers of AI, and requires companies to use "reasonable care" to avoid algorithmic discrimination[citation:6].

- California's Multiple Bills: California, a longtime tech regulator, passed several notable laws in 2024. These include requiring developers to disclose training data used for generative AI (AB 2013), mandating watermarks on AI-generated content (AB 942), and expanding the definition of personal information under its privacy law to include data within AI systems (AB 1008)[citation:6].

- Utah's Transparency Law (SB 149): This law takes a simpler, consumer-focused approach. It requires clear notification when a person is interacting with an AI (like a chatbot or AI phone assistant) in a regulated or consumer context[citation:6].

This patchwork means a business operating across multiple states may need to comply with several different, and sometimes conflicting, sets of rules.

Federal Activity: Executive Orders and Agency Enforcement

At the federal level, the primary driver has been President Biden's October 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of AI[citation:8]. This order directed federal agencies to develop standards, guidelines, and policies around AI safety, security, and equity. It set in motion a government-wide effort, but it does not itself create new binding laws for private companies.

More impactful for businesses has been the enforcement actions of existing federal regulators. Agencies like the Federal Trade Commission (FTC) and the Securities and Exchange Commission (SEC) have made it clear they will apply current consumer protection and securities laws to AI[citation:6][citation:8].

For example, the FTC's "Operation AI Comply" has targeted companies for deceptive AI claims, such as fake review generators or misleading "AI Lawyer" services[citation:6]. The FTC has also required companies to destroy AI models trained on improperly collected data[citation:6]. This signals that even without new AI-specific laws, using AI in unfair or deceptive ways carries significant legal risk.

Looking ahead, the push for a more unified national framework is growing. As noted in one analysis, the inconsistent approaches across jurisdictions create challenges, and "international businesses may face substantially different AI regulatory compliance challenges in different parts of the world"[citation:1].

Global Perspectives: A World of Different Approaches

Beyond the EU and U.S., countries are crafting AI policies that reflect their own legal traditions and economic priorities.

- United Kingdom: The UK has opted for a flexible, sector-led approach. Instead of new overarching legislation, it has tasked existing regulators (for finance, healthcare, etc.) with applying core AI principles within their domains[citation:1][citation:8]. This aims to provide agility but may lead to inconsistency across sectors.

- China: China has moved quickly with sector-specific and targeted regulations. Its Interim Measures for the Management of Generative AI Services focuses on content generated for the public, requiring safety assessments, anti-discrimination measures, and labeling of AI-generated content[citation:1]. This reflects a focus on content control and social stability.

- Singapore and Other Nations: Many countries, including Singapore, are starting with voluntary frameworks and guidelines to encourage ethical AI development while observing global trends before enacting hard law[citation:1][citation:3]. Singapore's Model AI Governance Framework for Generative AI, for instance, is a draft for consultation aimed at fostering trusted development[citation:3].

A key challenge for global businesses is that the very definition of "AI" varies from one jurisdiction to another[citation:1]. What is regulated as AI in the EU might not be considered AI under a U.S. state law, making compliance strategies complex.

A Practical Compliance Guide for Beginners and Businesses

For non-technical users and small businesses, the path to compliance is about taking manageable steps. You don't need to be a legal expert. Follow this basic framework to understand your position.

Step 1: Classify Your AI Use

Start by asking simple questions about how you use AI:

- Is it for internal productivity? (e.g., an AI writing assistant for drafting emails).

- Does it make decisions about people? (e.g., screening job resumes, evaluating loan applications).

- Does it interact with customers or the public? (e.g., a customer service chatbot, an AI-generated marketing image).

- Is it a safety-critical component? (e.g., part of a medical or transportation product).

This simple classification will point you toward the correct risk tier in frameworks like the EU AI Act.

Step 2: Map Your Jurisdictional Reach

Where are your users, customers, or operations located?

- If you have users in the European Union, the EU AI Act likely applies to you.

- If you operate in or target customers in Colorado, California, Utah, or other active states, you must follow their specific laws.

- Even if you're based in one country, if your AI service is accessible elsewhere, you may have cross-border obligations.

Step 3: Implement Foundational Governance

Good governance is the cornerstone of compliance across all regulations. You can start today by:

- Creating an Inventory: Document what AI tools and systems your organization uses, who supplies them, and for what purposes.

- Prioritizing Transparency: Be clear with users when they are interacting with AI. Label AI-generated content. Update your privacy policy to explain AI use.

- Conducting a Basic Impact Check: For any AI that makes significant decisions, ask: Could it produce biased or inaccurate results? Do we have a human reviewing its outputs?

- Choosing Vendors Wisely: When buying AI software, ask the vendor about their compliance posture, especially regarding data used for training and bias mitigation.

As one resource advises, businesses should "monitor regulatory developments and consider whether to submit comments or otherwise be involved in legislative or regulatory rulemaking on issues affecting their interests"[citation:8]. Staying informed is a key part of the process.

The Future of AI Regulation: Trends to Watch

The regulatory landscape will continue to evolve rapidly. Here are key trends that will shape the next few years:

- Harmonization Efforts: The current patchwork is difficult for global trade. Expect increased efforts for international alignment, similar to the UK's global AI Safety Summit in 2023[citation:1]. Standards bodies like ISO are developing AI management system standards (like ISO/IEC 42001) that may become globally accepted benchmarks[citation:4].

- Focus on AI Safety and "Frontier" Models: Regulations will increasingly distinguish between everyday AI and the most powerful "frontier" or general-purpose models. Special rules for testing, risk monitoring, and security for these advanced systems are likely[citation:3][citation:9].

- Liability and Accountability: Laws will clarify who is responsible when an AI causes harm—the developer, the deployer, or the user? The EU is already working on an AI Liability Directive to address this[citation:8].

- Sector-Specific Rules Deepen: Beyond general laws, expect detailed regulations for AI in sensitive sectors like healthcare, finance (with rules like the EU's DORA)[citation:4], and critical infrastructure.

The journey toward stable, clear, and effective AI regulation is just beginning. For individuals and businesses, cultivating a mindset of responsible and informed AI use is the best preparation for whatever comes next.

Conclusion

Navigating AI regulation in 2024 is about understanding a shifting landscape, not memorizing static rules. The core principles emerging globally are risk management, transparency, and accountability. Whether you're following the EU's detailed risk pyramid or complying with a specific U.S. state's anti-bias law, the goal is the same: to harness the power of AI while protecting people's rights and safety.

By starting with the simple steps of classifying your AI use, understanding your geographic obligations, and building basic governance, you can move forward with confidence. The future of AI will be built not just by coders, but by all of us who use, manage, and regulate this transformative technology thoughtfully.

Further Reading

Share

What's Your Reaction?

Like

1750

Like

1750

Dislike

25

Dislike

25

Love

320

Love

320

Funny

40

Funny

40

Angry

15

Angry

15

Sad

10

Sad

10

Wow

210

Wow

210

The final conclusion is spot on: it's about a mindset shift. Responsible AI isn't a bolt-on; it has to be part of the design and culture from the start. Regulations are just forcing functions for what we should have been doing anyway.

As a student studying tech policy, this is going straight into my bookmarks. Perfect blend of high-level concepts and practical advice. The citations to official sources (like the European Parliament page) are also really valuable for my papers.

The FTC's "Operation AI Comply" targeting fake review AI tools is a great example of using existing law. You don't always need new AI laws to tackle AI problems. False advertising is already illegal.

Thanks for a great resource. Shared this with my entire product team. We're building new features and "Classify Your AI Use" is now a mandatory step in our design sprint process.

The article mentions the UK's sector-led approach. As someone in fintech, I actually prefer that. Having our financial regulator set AI rules tailored to banking risks seems more sensible than a one-size-fits-all law.

I work for a non-profit that advocates for digital rights. It's encouraging to see mainstream tech blogs like FutureExplain covering regulation in such an accessible way. Building public understanding is step one to holding companies and governments accountable.