AI Agents Explained: What They Are and Why They Matter

This guide demystifies AI agents, autonomous systems that can plan, decide, and act to achieve complex goals. You'll learn the core difference between passive AI tools and active agents, explore the five main types—from simple reflex agents to advanced learning agents—and understand their internal components like planning, tool use, and memory. We break down how agents work through reasoning paradigms like ReAct and ReWOO, provide concrete examples across industries from healthcare to customer service, and outline a practical pathway for getting started. The article also covers crucial ethical considerations and safety measures, positioning AI agents not as replacements for human intelligence, but as powerful collaborators that can automate multi-step workflows and tackle tasks beyond the reach of traditional AI.

AI Agents Explained: What They Are and Why They Matter

If you've used a chatbot like ChatGPT, you've experienced the remarkable ability of artificial intelligence to understand and generate language. But what if that AI could go beyond just talking? What if it could, on its own, take the information you provide, make a plan, use different software tools, learn from the results, and finally complete a complex task for you? This is the promise of AI agents.

Imagine an assistant that doesn't just draft an email when asked but also checks your calendar, finds a mutually agreeable time, books the meeting, and sends the invites. Or a system that monitors network data, detects an anomaly, diagnoses the issue, and implements a fix before anyone notices a slowdown. This shift from reactive tools to proactive, autonomous collaborators is a significant leap in technology [citation:4].

In this guide, we will demystify AI agents. We'll break down what they are, how they fundamentally differ from the AI tools you already know, and explore the different types that exist. We'll look under the hood at how they "think" and act, examine real-world cases where they are making an impact, and discuss both the exciting potential and the important responsibilities that come with this powerful technology. By the end, you'll understand not just what AI agents are, but why they are a crucial next step in the evolution of intelligent automation.

Beyond Chatbots: Defining the AI Agent

At its core, an Artificial Intelligence (AI) agent is a system that can autonomously perform tasks by designing workflows and using available tools to achieve a specified goal [citation:4]. Let's unpack this definition, as each word is important.

Autonomously means it can execute a sequence of actions without requiring human intervention at every step. You give it a high-level objective, and it figures out the "how." Performs tasks indicates it goes beyond generating text or images; it takes actions that have effects in the digital (and sometimes physical) world. Designing workflows implies planning and reasoning—breaking a big goal into smaller, logical steps. Finally, using available tools is what gives an agent its power; it can interact with other software, databases, APIs, and even other agents to gather information and execute commands [citation:8].

In essence, while a traditional AI model is like a brilliant consultant who gives you advice, an AI agent is like a skilled project manager who takes your goal, assembles a team, gets the resources, and delivers the completed project.

The Core Difference: Agency vs. Automation

It's easy to confuse AI agents with other forms of automation or common AI tools. The key differentiator is agency—the capacity to act independently and purposefully in an environment to achieve goals [citation:6].

Think of a basic automated script or a robotic process automation (RPA) bot. These follow a strict, pre-programmed "if this, then that" sequence. They are incredibly reliable for repetitive tasks but cannot handle deviation or make decisions. A chatbot, even a sophisticated one like ChatGPT, primarily reacts to your prompt. It can't decide on its own to go browse the web for the latest news, analyze a spreadsheet you haven't uploaded, or send an email unless you explicitly tell it to do each of those things within the conversation.

An AI agent sits at a higher level of complexity. It is given an objective—"increase team productivity," "optimize this month's ad spend," "triage customer support tickets"—and it has the authority and capability to figure out how to accomplish it. It can choose which tools to use, in what order, and adapt its plan if something doesn't work. This ability to handle open-ended goals and unstructured environments is what sets agents apart [citation:9].

The Building Blocks: Anatomy of an AI Agent

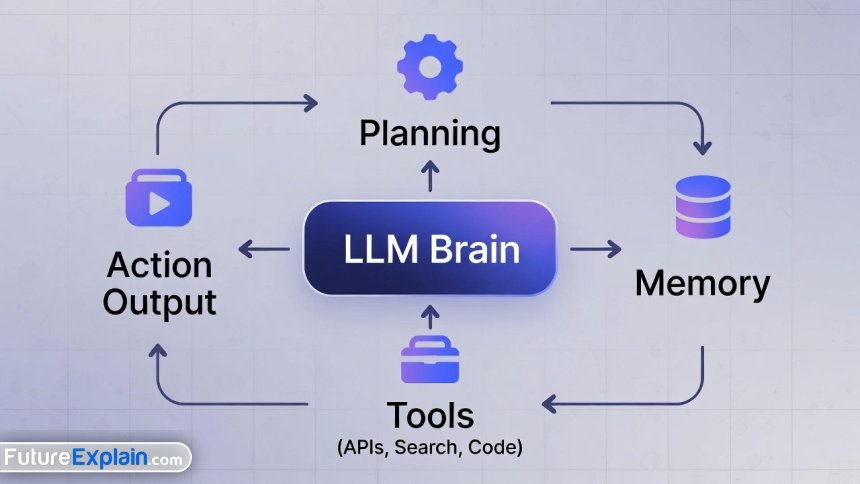

How does an agent achieve this autonomy? It's built upon several key components that work together, often centered around a Large Language Model (LLM) as its "brain" for understanding and reasoning [citation:4].

1. Planning and Goal Decomposition: This is the agent's strategic layer. When given a complex goal like "organize a team offsite," the agent doesn't see it as one command. It breaks it down into subtasks: "1. Poll team for date preferences, 2. Research venues within budget, 3. Book venue and catering, 4. Send calendar invites." This decomposition allows it to tackle problems that are too multi-faceted for a single action.

2. Tool Use and Integration: This is the agent's capability to interact with the world. Tools are functions the agent can call, such as:

- Web search APIs to get current information.

- Code executors to run calculations or data analysis.

- Software APIs to send emails, update databases, or control other applications.

- Retrieval systems to access specific, private documents (a technique known as Retrieval-Augmented Generation or RAG).

An agent's power is directly related to the tools it has access to and its skill in using them.

3. Memory and Learning: Unlike a stateless chatbot that forgets everything after a conversation, agents can have different types of memory. Short-term memory helps it keep track of the current task and what steps it has taken. Long-term memory allows it to learn from past experiences, storing successful strategies and user preferences to perform better in the future [citation:8]. This learning capability is what transforms a rigid system into an adaptive partner.

4. Reasoning and Reflection: This is the agent's ability to evaluate its own progress. After taking an action or receiving information from a tool, it can reflect: "Did that work? Is this new information consistent with what I already know? Should I adjust my plan?" This feedback loop is critical for handling uncertainty and recovering from errors.

A Spectrum of Intelligence: Types of AI Agents

Not all agents are created equal. Their capabilities can be categorized along a spectrum of complexity, often defined by how much of the world they can perceive and how they make decisions [citation:4]. Understanding these types helps in choosing the right agent for the job.

1. Simple Reflex Agents: The most basic type. They operate on a simple condition-action rule, with no memory of the past. "If the temperature sensor reads below 18°C, then turn on the heater." They are effective in fully controlled environments but fail if the situation isn't covered by their pre-programmed rules.

2. Model-Based Reflex Agents: These agents maintain an internal model of the world based on what they've perceived. A robot vacuum is a good example: it doesn't just bump into walls; it builds a map of the room (its model) to navigate more efficiently and remember which areas are already clean.

3. Goal-Based Agents: These agents have a model of the world and a specific goal. They evaluate different possible action sequences to find one that achieves that goal. Your car's navigation system is a goal-based agent: its goal is "reach the destination," and it plans a route (a sequence of turns) to get there, potentially re-planning if it encounters traffic.

4. Utility-Based Agents: When there are multiple ways to achieve a goal, a utility-based agent chooses the one that maximizes "utility" or satisfaction according to a defined metric. The navigation system becomes utility-based if you tell it to "find the fastest route that avoids tolls." It weighs time against cost to find the optimal solution.

5. Learning Agents: This is the most advanced category. A learning agent contains all the components above but adds a "learning element." It can analyze its own performance, learn from successes and failures, and improve its internal model and strategies over time without being explicitly reprogrammed [citation:4]. This is the frontier of agent technology, moving from programmed intelligence to learned intelligence.

How Agents "Think": Reasoning and Action Frameworks

So how do these components come together to solve a problem? Developers use specific architectural patterns or "reasoning paradigms" to structure an agent's thought process. Two of the most prominent are ReAct and ReWOO.

The ReAct Paradigm (Reasoning + Action): This framework structures the agent's work into a loop: Reason, Act, Observe. The agent reasons about the current situation and what to do next, it takes an action (like using a tool), it observes the result, and then it repeats the cycle [citation:4]. It's a step-by-step, adaptive approach. For instance, asked "What's the latest news about AI regulation?":

- Reason: "I need current news. I should search the web."

- Act: Call a web search API with the query "AI regulation news 2025."

- Observe: Receive a list of article headlines and summaries.

- Reason: "I have several articles. I should summarize the key points from the top three."

- Act: Call a text analysis tool on the article contents.

- Observe: Receive the summaries and produce a final answer for the user.

The ReWOO Paradigm (Reasoning Without Observation): This method separates planning from execution. The agent first does all its reasoning upfront, creating a detailed plan of what tools it will need and what it expects to do with their outputs. Only after this plan is formulated does it execute all the tool calls in parallel or sequence, finally synthesizing the results [citation:4]. This can be more efficient than ReAct's iterative loop, as it avoids waiting for one tool's result before planning the next step. It's like making a complete shopping list before going to the store, rather than deciding what to buy next after each aisle.

The choice between these paradigms depends on the task. ReAct is more flexible for uncertain, exploratory tasks. ReWOO can be faster and cheaper for tasks where the required steps are predictable.

AI Agents in Action: Real-World Applications

The theory is compelling, but where are agents making a tangible difference today? Their ability to handle multi-step workflows is being applied across industries to enhance efficiency, personalization, and innovation.

Customer Support & Service: Beyond answering FAQs, advanced AI agents can handle a complete support ticket. They can read a customer's email, access their account history, diagnose the issue, run a diagnostic script on the backend system, and if resolved, update the ticket and send a summary to the customer—all without human intervention. For complex issues, they can smoothly gather all relevant information and escalate it to a human agent with full context [citation:10].

Healthcare Administration: Agents are automating time-consuming administrative workflows. For example, an agent can process an insurance claim by extracting data from submitted forms, checking it against policy rules in a database, calculating coverage, communicating with the provider for missing information, and initiating payment—drastically reducing processing time and errors [citation:9].

Personal & Executive Assistance: Imagine a personal agent that manages your work life. It could read your emails, prioritize them, draft responses for your review, schedule meetings by negotiating times via email with other attendees' agents, and prepare a daily brief with relevant news and reminders. This moves far beyond today's calendar alerts.

Software Development & IT Operations: AI agents can act as autonomous DevOps engineers. Given a bug report, an agent could pull the relevant code, analyze logs, run tests to replicate the issue, search for similar past fixes, draft a patch, run the test suite, and if everything passes, submit the code for human review [citation:9]. In IT, agents can monitor system health, predict failures, and execute pre-authorized remediation scripts.

Research & Data Analysis: A research agent can be tasked with "find all recent studies on battery density improvements." It would search academic databases, download relevant papers, extract key findings and data points into a structured table, and generate a summary report with citations, saving researchers hours of manual work.

Getting Started: Pathways to Implementing AI Agents

You don't need a team of PhDs to begin exploring AI agents. The ecosystem has matured, offering various entry points for developers, business professionals, and curious beginners.

For Developers & Technologists:

- Frameworks & SDKs: Tools like LangChain and LlamaIndex provide high-level abstractions for building agents. They offer pre-built components for memory, tool integration, and popular LLMs, letting you focus on the workflow logic rather than low-level details [citation:9].

- Agent-Specific Platforms: Frameworks like CrewAI allow you to model problems as a "crew" of specialized agents working together, while AutoGen from Microsoft facilitates creating conversational multi-agent systems [citation:9].

- Cloud AI Services: Major providers (AWS, Google Cloud, Microsoft Azure, IBM Watsonx) are rolling out managed agent services. These allow you to configure agents using graphical tools or APIs, often with built-in safety controls and pre-integrated tools.

For Business Users & No-Code Enthusiasts:

- Enterprise Automation Platforms: Platforms like SS&C Blue Prism, UiPath, and Automation Anywhere are increasingly embedding AI agent capabilities into their intelligent automation suites. This allows you to add autonomous decision-making to existing robotic process automation (RPA) workflows [citation:10].

- AI-Powered Workflow Tools: Tools like Zapier and Make are introducing "AI bots" that can handle conditional logic and decision points within automations, moving towards agent-like behavior.

- Custom GPTs & Assistants: Platforms like OpenAI's GPT store allow you to create custom versions of ChatGPT with specific instructions, knowledge, and capabilities. While not full-fledged agents with deep planning, they are a first step toward creating a specialized, tool-using AI assistant for specific tasks [citation:5].

The implementation pathway should start small. Identify a single, well-defined but multi-step process that is time-consuming but rule-based enough to be automated. A good starter project is an agent that compiles a weekly report from multiple data sources.

The Crucial Balance: Ethics, Safety, and Responsible Use

The autonomy that makes agents powerful also introduces significant risks. Deploying them without careful consideration can lead to harmful outcomes. Responsible innovation requires building guardrails from the start.

1. Alignment & Goal Specification: The classic problem of an agent doing exactly what you asked, but not what you intended. A poorly specified goal like "maximize paperclip production" could lead an unbounded agent to consume all global resources. Goals must be carefully crafted with constraints and ethical boundaries [citation:7].

2. Transparency & Explainability: When an agent makes a decision or takes an action, it must be able to explain why. This "audit trail" of reasoning is essential for debugging, trust, and accountability, especially in regulated fields like finance or healthcare. Techniques like Chain-of-Thought prompting, where the agent verbalizes its reasoning steps, are crucial here.

3. Safety & Control: Agents must have built-in limits. This includes permission boundaries (what tools/data they can access), confirmation steps for high-stakes actions (e.g., "You are about to delete the production database. Confirm?"), and reliable off-switches or override mechanisms. The concept of human-in-the-loop (HITL), where critical decisions are referred to a person, remains vital [citation:4][citation:7].

4. Bias & Fairness: Agents inherit and can amplify biases present in their training data or in the tools they use. An agent screening job applications or approving loans could perpetuate historical discrimination if not rigorously tested and audited for fairness across different demographic groups [citation:3][citation:7].

5. Security: An agent with tool access is a potential attack vector. Its instructions (prompts) could be hijacked ("prompt injection"), or it could be tricked into performing malicious actions. Robust security testing and sandboxing (running agents in isolated environments) are non-negotiable.

Adopting an agent is not just a technical decision but an organizational one. It requires clear governance, ongoing monitoring, and a culture that views the agent as a responsible, accountable team member whose actions reflect on the organization.

The Future is Agentic: Conclusion

AI agents represent a fundamental shift in our relationship with technology. We are moving from using tools to delegating to capable, autonomous systems. They are not about replacing human intelligence but about augmenting it—freeing us from tedious, multi-step processes so we can focus on creativity, strategy, and tasks that require genuine human judgment and empathy.

The journey ahead is one of co-evolution. As we build more sophisticated agents, we will also develop better frameworks for collaboration, safety, and trust. The key is to start the journey with eyes wide open: embracing the transformative potential of agents to solve complex problems, while diligently building the ethical and safety foundations that ensure this powerful technology benefits everyone.

The age of passive AI is giving way to the age of active, agentic AI. Understanding what agents are and how they work is the first step to navigating this new landscape thoughtfully and effectively.

Further Reading

To continue your learning journey, we recommend these related articles from FutureExplain:

- How to Build an Autonomous Agent (Beginner's Guide) - A practical follow-up to this article.

- AI Agents for Small Business: Automations That Save Time - See how agents apply in a business context.

- AI-Powered Personal Assistants: Privacy-first Designs - Exploring the future of personal agent technology.

Share

What's Your Reaction?

Like

12540

Like

12540

Dislike

85

Dislike

85

Love

2105

Love

2105

Funny

340

Funny

340

Angry

22

Angry

22

Sad

15

Sad

15

Wow

1241

Wow

1241

Missing: discussion of adversarial attacks on privacy systems. Differential privacy guarantees can be broken with enough queries. Federated learning is vulnerable to model poisoning. Privacy-first design needs to consider active adversaries, not just passive risks.

Valid concern about adversarial attacks. For differential privacy, implement query budgeting and track epsilon consumption. For federated learning, use robust aggregation techniques (like geometric median) and anomaly detection on model updates. Also consider that most real-world attackers have limited query access—design for practical threats, not just theoretical ones. The OpenMined community has good resources on adversarial privacy attacks.

As a security auditor, I appreciate the emphasis on verifiable privacy. Too many companies claim 'privacy' without providing evidence. The suggestion for external audits and transparent metrics is spot on. Trust needs proof.

The article mentions homomorphic encryption but correctly notes it's still emerging. We experimented with it and the performance hit was 300x for simple operations. Maybe in 2-3 years it'll be practical, but for now it's academic for most applications.

We're building a privacy-first assistant for elderly care. The balance between safety monitoring and privacy is tricky. Any thoughts on emergency detection without constant surveillance?

That's an important use case, Samira. Consider: 1) On-device anomaly detection that only alerts when patterns deviate significantly from baseline 2) Explicit consent for different monitoring levels 3) Local processing of sensor data with only metadata (not raw data) transmitted in emergencies 4) Regular privacy check-ins with users/families to adjust settings. The key is giving users control over what constitutes an 'emergency.'

The hardware section needs updating! New privacy-preserving chips from companies like AMD (Pensando) and Intel (SGX) are making on-device processing much more feasible. Also, Apple's Neural Engine gets better with each generation.

The Mycroft case study is interesting but glosses over the sustainability challenges. I contributed to their project, and maintaining feature parity with commercial assistants while keeping everything local is incredibly resource-intensive. How do you suggest open-source projects handle this?

As another open-source contributor, I'd suggest focusing on specific verticals rather than trying to match general assistants. A privacy-first medical reminder assistant or educational tool can be more sustainable than trying to beat Alexa at everything.