Managing Model Bias: Techniques and Checklists

This guide provides a comprehensive overview of managing model bias in AI systems. You'll learn about the different types of bias, how to measure fairness, and practical techniques for mitigation. We include a step-by-step checklist covering data collection, model development, testing, and deployment, plus tools like AI Fairness 360 and Fairlearn. Whether you're a beginner or a professional, this article equips you with the knowledge to build fairer, more responsible AI.

Managing Model Bias: Techniques and Checklists

Artificial intelligence is no longer a futuristic concept—it’s a daily reality that influences hiring, lending, healthcare, and countless other high‑stakes decisions. When AI systems work fairly, they can unlock tremendous efficiency and insight. But when they inherit or amplify human biases, they can perpetuate discrimination, deepen social inequalities, and erode trust.

Managing model bias isn’t just a technical challenge; it’s a fundamental requirement for responsible AI. This guide is designed for beginners, non‑technical users, and professionals who want to understand what bias looks like, how to measure it, and what practical steps they can take to mitigate it. We’ll walk through the types of bias, key fairness metrics, proven mitigation techniques, and a detailed checklist you can apply to your own projects. By the end, you’ll have a clear roadmap for building fairer, more trustworthy AI systems.

What Is AI Bias?

AI bias refers to systematic, unfair discrimination embedded in an AI model’s predictions or decisions. It often arises because the model has learned patterns from historical data that reflect existing prejudices, or because the design of the algorithm itself inadvertently favors certain groups. Bias can manifest in many ways: a loan‑approval model that unfairly rejects applicants from certain neighborhoods, a hiring tool that favors male‑sounding names, or a facial‑recognition system that struggles to recognize people with darker skin tones.

Bias can enter an AI system at any stage of its lifecycle—from data collection and labeling to model training, evaluation, and deployment. Understanding where bias comes from is the first step toward managing it. Common sources include:

- Data bias: The training data over‑represents some groups or contains historical prejudices[reference:0].

- Algorithmic bias: The model’s architecture or optimization process inadvertently introduces unfairness[reference:1].

- Human decision bias: Subjective choices made by developers, labelers, or stakeholders seep into the system[reference:2].

- Generative AI bias: Models that create text, images, or video reproduce stereotypes present in their training data[reference:3].

These biases are not always obvious, which is why proactive detection and mitigation are essential. For a deeper look at how AI can be misused, see our article on AI myths vs reality.

Types of AI Bias

Researchers have categorized bias into several distinct types, each requiring a slightly different approach to mitigation. Knowing these categories helps you pinpoint where in your pipeline bias might be lurking.

- Historical bias: Pre‑existing inequalities in the data used for training. If past decisions were biased, the model will likely reproduce them[reference:4].

- Representation bias: The training data does not accurately reflect the population the model is meant to serve, leading to poor performance for underrepresented groups[reference:5].

- Measurement bias: The features used to train the model are imperfect proxies for the real‑world concepts they’re meant to capture (e.g., using credit score as a proxy for financial responsibility)[reference:6].

- Aggregation bias: Applying a one‑size‑fits‑all model to a diverse population without accounting for subgroup variations[reference:7].

- Evaluation bias: The metrics used to assess the model’s performance are not equally valid for all groups, masking unfair outcomes[reference:8].

- Deployment bias: The context in which the model is deployed differs from the context in which it was trained, leading to unintended consequences[reference:9].

Recognizing these bias types is a critical skill for anyone involved in AI development. For a broader discussion of AI’s societal impact, explore our category on AI Ethics & Safety.

Why Managing Model Bias Is Critical

The consequences of unchecked bias extend far beyond technical errors—they can harm individuals, damage businesses, and widen social gaps.

- Discrimination: Biased AI can deny people equal access to jobs, loans, housing, or healthcare based on race, gender, age, or other protected characteristics[reference:10].

- Reinforcement of stereotypes: AI systems that generate biased content or make skewed predictions can perpetuate harmful stereotypes[reference:11].

- Loss of trust: When users discover bias in an AI system, they lose confidence in the technology, hindering its adoption[reference:12].

- Legal and ethical risks: Organizations that deploy biased AI may face lawsuits, regulatory fines, and reputational damage[reference:13].

- Economic costs: Biased algorithms can lead to flawed business decisions, reduced profitability, and lost customers[reference:14].

These risks make bias management a non‑negotiable part of responsible AI development. Our earlier article Is Artificial Intelligence Safe? covers related safety and ethical concerns.

Bias Detection and Measurement

You can’t fix what you don’t measure. Detecting bias requires defining what “fairness” means in your context and using appropriate metrics to quantify deviations.

Key Fairness Metrics

- Demographic parity: Checks whether the model’s positive prediction rate is similar across different demographic groups.

- Equalized odds: Ensures that the model has similar false‑positive and false‑negative rates across groups.

- Disparate impact: Measures the ratio of positive outcomes for a protected group versus a privileged group.

- Individual fairness: Assesses whether similar individuals receive similar predictions.

Tools for Bias Detection

Several open‑source toolkits make bias detection accessible even for non‑experts:

- AI Fairness 360 (AIF360): IBM’s comprehensive toolkit that provides dozens of fairness metrics and mitigation algorithms[reference:15].

- Fairlearn: Microsoft’s library for assessing and improving fairness of AI systems.

- Aequitas: An audit toolkit that helps evaluate bias and fairness in datasets and models.

- Google’s What‑If Tool: A visual interface for probing model behavior and fairness.

These tools can be integrated into your development pipeline to automatically check for bias during training and evaluation. For a hands‑on guide to using such tools, see our tutorial on Tool Usage Guides.

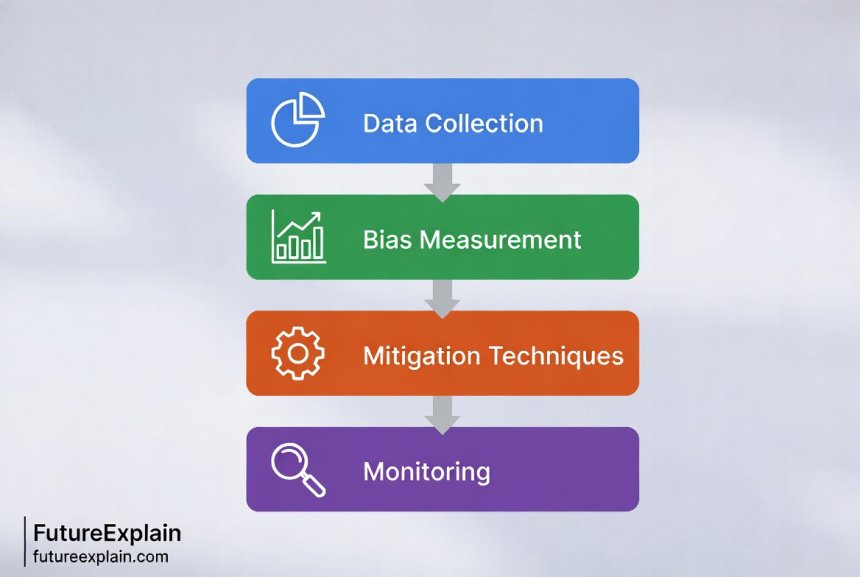

Techniques for Mitigating Bias

Once bias is detected, you can apply a variety of techniques to reduce it. These methods are often grouped into three categories: pre‑processing, in‑processing, and post‑processing.

Pre‑processing Techniques

These methods modify the training data before the model sees it:

- Reweighting: Adjust the weight of examples from different groups to balance their influence.

- Resampling: Oversample underrepresented groups or undersample overrepresented groups.

- Feature transformation: Transform features to reduce their correlation with sensitive attributes (e.g., race or gender).

- Synthetic data generation: Create artificial data points to fill gaps in underrepresented categories.

In‑processing Techniques

These methods modify the learning algorithm itself to encourage fairness:

- Fairness constraints: Add mathematical constraints to the optimization process that penalize unfair behavior.

- Adversarial debiasing: Train the main model alongside an “adversary” that tries to predict the sensitive attribute from the model’s predictions; the main model is penalized if the adversary succeeds, pushing it to learn fairer representations[reference:16].

- Regularization: Add a fairness‑related term to the loss function that discourages biased predictions.

Post‑processing Techniques

These methods adjust the model’s predictions after they are made:

- Threshold tuning: Apply different decision thresholds for different groups to achieve equalized odds.

- Output calibration: Calibrate prediction scores to ensure fairness across groups.

- Rejection option: Refrain from making a prediction when the model’s confidence is low or when bias is suspected.

Choosing the right technique depends on your specific use case, data, and fairness goals. Often, a combination of approaches works best. For a deeper dive into algorithmic fairness, read our article on Ethical AI Explained.

Checklist for Managing Model Bias

A structured checklist ensures you don’t overlook critical steps. The following checklist, inspired by industry best practices[reference:17], covers the entire AI lifecycle.

Planning & Preparation

- □ Build a diverse team: Include technical experts, domain specialists, ethicists, and end‑user representatives to spot bias early[reference:18].

- □ Establish AI ethics guidelines: Define clear principles for fairness, transparency, and accountability.

- □ Engage decision‑makers: Secure leadership buy‑in to align ethical goals with business priorities.

Data Collection & Preparation

- □ Gather balanced datasets: Ensure data represents all relevant demographic groups. Use oversampling, undersampling, or synthetic data if needed[reference:19].

- □ Clean and document data: Record data origins, transformations, and potential bias sources[reference:20].

- □ Apply debiasing tools: Use tools like AI Fairness 360 or Fairlearn to identify and reduce bias during preprocessing[reference:21].

Model Development

- □ Choose bias‑aware algorithms: Select models that support fairness constraints or adversarial debiasing[reference:22].

- □ Design neutral features: Avoid features that act as proxies for sensitive attributes (e.g., zip code as a proxy for race).

- □ Incorporate fairness constraints: Add fairness criteria directly into the training process.

Testing & Validation

- □ Conduct bias audits: Evaluate model performance across demographic groups using fairness metrics[reference:23].

- □ Test edge cases: Check how the model behaves for rare or borderline inputs.

- □ Solicit external feedback: Have stakeholders from diverse backgrounds review the model’s outputs.

Deployment & Monitoring

- □ Deploy with caution: Start with a limited pilot to observe real‑world behavior.

- □ Implement continuous monitoring: Track fairness metrics in production and set up alerts for drift.

- □ Maintain audit trails: Keep logs of model decisions to enable retrospective analysis.

- □ Update models regularly: Retrain models with new data and re‑evaluate fairness periodically.

Tools for Bias Detection and Mitigation

Thankfully, you don’t have to build everything from scratch. Several mature toolkits can accelerate your bias‑management efforts.

- AI Fairness 360 (AIF360): IBM’s open‑source toolkit that offers a wide array of fairness metrics and mitigation algorithms[reference:24]. It’s designed for industrial usability and includes tutorials for credit scoring, healthcare, and other domains.

- Fairlearn: A Python library from Microsoft that provides metrics for assessing unfairness and algorithms for mitigating it.

- Aequitas: Developed by the University of Chicago, this toolkit helps audit datasets and models for bias and compliance with policy standards.

- Google’s What‑If Tool: An interactive visual tool that lets you explore model behavior, test fairness, and simulate “what‑if” scenarios.

- Fairness‑Indicators: Part of TensorFlow Model Analysis, this library makes it easy to compute and visualize fairness metrics.

Integrating these tools into your MLOps pipeline can make bias detection and mitigation a routine part of your workflow. For more on automating AI workflows, see our guide on Automating Workflows with Low‑Code AI Platforms.

Integrating Bias Management into Your Workflow

Bias management shouldn’t be a one‑time check; it should be woven into every stage of your AI development process. Here’s how to make it part of your culture:

- Start early: Discuss fairness requirements during project kickoff, not after the model is built.

- Automate checks: Use CI/CD pipelines to run fairness metrics alongside accuracy metrics.

- Educate your team: Provide training on bias types, fairness metrics, and mitigation techniques.

- Create accountability: Designate a person or team responsible for overseeing fairness efforts.

- Engage with stakeholders: Regularly seek feedback from the communities affected by your AI systems.

This proactive approach turns bias management from a reactive fix into a core competency. For a broader perspective on preparing for an AI‑driven future, read How to Prepare for an AI‑Driven Future.

Conclusion

Managing model bias is a multifaceted challenge that requires technical skill, organizational commitment, and continuous vigilance. By understanding the types of bias, measuring fairness with appropriate metrics, applying mitigation techniques, and following a structured checklist, you can significantly reduce the risk of deploying unfair AI.

Remember, the goal isn’t perfection—it’s progress. Every step you take toward fairer AI builds trust, reduces harm, and makes your systems more robust and inclusive. Start small, iterate, and keep learning. The journey to responsible AI is a marathon, not a sprint.

Further Reading

- Ethical AI Explained: Why Fairness and Bias Matter – A deeper dive into the ethical foundations of AI fairness.

- How to Use AI Responsibly (Beginner Safety Guide) – Practical tips for using AI tools safely and ethically.

- Model Cards and Responsible Model Documentation – Learn how to document your models transparently.

Share

What's Your Reaction?

Like

1250

Like

1250

Dislike

12

Dislike

12

Love

340

Love

340

Funny

45

Funny

45

Angry

8

Angry

8

Sad

5

Sad

5

Wow

210

Wow

210

Are there certifications or standards (like ISO) for AI fairness that companies can aim for?

The landscape is still evolving. IEEE has P7003 for algorithmic bias considerations. ISO/IEC is developing standards under SC42 (AI). In the EU, the proposed AI Act will create de facto standards. For now, following frameworks like NIST's AI Risk Management Framework and documenting your process thoroughly is the best practice.

This article makes me realize how many of our "apolitical" tech decisions actually have deep ethical implications. Eye-opening.

The adversarial debiasing explanation was the clearest I've read. It finally clicked for me.

How often should we re-audit a model in production for bias? Quarterly? Annually?

It depends on how fast your data and the world change. For a stable system, quarterly might suffice. For a dynamic system (e.g., social media trends, fast-moving markets), monthly or even continuous monitoring is better. Set up automated fairness dashboards if possible. The key is to have a trigger for re-auditing when you detect significant data drift or performance changes.

I wish there was more discussion about bias in generative AI (like DALL-E or ChatGPT). That feels like a different beast compared to classification models.

Great point, Rosalie. Generative AI bias is a massive and growing area. While the core principles (data, representation, measurement) still apply, the techniques differ. We're planning a dedicated article on that topic soon! In the meantime, check out our article on the <a href="/ethics-of-ai-generated-media-copyright-and-attribution">Ethics of AI-Generated Media</a>.

What's the best way to handle a situation where different fairness metrics contradict each other? For example, improving one metric might worsen another.

That's a classic challenge in fairness research, Zariyah. There's often no single "right" answer. The best practice is to: 1) Consult with domain experts and stakeholders about which fairness criteria matter most for your application. 2) Use multi-objective optimization techniques to explore trade-offs. 3) Be transparent about the choices you made and the trade-offs accepted in your model documentation.