TinyML & Edge AI: Running Models on Devices

TinyML and Edge AI bring artificial intelligence directly to small devices without needing constant internet connections. This comprehensive guide explains how these technologies work, why they're transforming industries from healthcare to smart homes, and what practical benefits they offer for privacy, speed, and accessibility. Learn about the hardware requirements, model optimization techniques, real-world applications, and how beginners can start experimenting with on-device AI using accessible tools and platforms. Discover why running AI models locally on devices represents one of the most significant shifts in how artificial intelligence integrates into our daily lives.

TinyML & Edge AI: Running Models on Devices

Imagine your smartphone understanding your voice commands without sending anything to the internet. Picture a fitness tracker that detects your exercise patterns while using minimal battery. Envision security cameras that recognize familiar faces without uploading video to the cloud. These aren't futuristic concepts—they're real applications of TinyML and Edge AI, technologies that bring artificial intelligence directly to the devices you use every day.

In this comprehensive guide, we'll explore what happens when AI moves from massive cloud servers to the small, everyday devices around us. We'll demystify the technical concepts, show you practical applications, and explain why this shift represents one of the most important developments in artificial intelligence today.

What Are TinyML and Edge AI?

Let's start with clear definitions. TinyML (Tiny Machine Learning) refers to machine learning models that are small enough—typically under 1MB—to run on microcontrollers and low-power devices. These are the smallest computers you can find, often costing just a few dollars and consuming minimal power.

Edge AI (Edge Artificial Intelligence) is a broader term that encompasses running AI models on devices at the "edge" of the network—closer to where data is generated and used. This includes everything from smartphones and laptops to industrial sensors and smart home devices.

Think of it this way: if traditional cloud AI is like sending mail to a central processing facility, Edge AI is like having a local post office in your neighborhood, and TinyML is like having a personal assistant who handles everything right at your desk.

Why Move AI from Cloud to Edge?

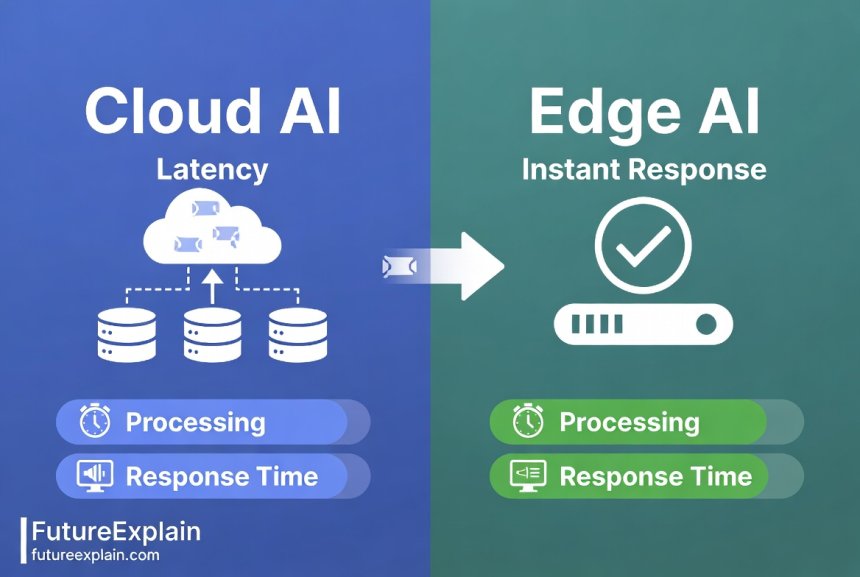

The traditional approach to AI involves sending data to powerful cloud servers, processing it there, and sending results back. This works well for many applications but has significant limitations that Edge AI addresses:

1. Privacy and Security

When AI processes data locally on your device, sensitive information never leaves your possession. Your voice recordings, health data, or video footage stays on the device rather than traveling across the internet to unknown servers. This is particularly important for healthcare devices, security systems, and personal assistants.

2. Speed and Latency

Processing happens instantly when it's done locally. There's no round-trip delay to the cloud, which can be critical for applications like autonomous vehicles (needing to detect obstacles in milliseconds), industrial robotics, or real-time translation during conversations.

3. Reliability Without Internet

Edge AI works anywhere, regardless of internet connectivity. Smart agricultural sensors in remote fields, underwater monitoring devices, or wearable health monitors in areas with poor connectivity can all function independently.

4. Reduced Bandwidth and Costs

By processing data locally, Edge AI dramatically reduces the amount of data that needs to be transmitted. A security camera running person-detection AI might only send alerts when something important happens, rather than streaming 24/7 video to the cloud.

5. Energy Efficiency

Local processing can be more energy-efficient than constant data transmission, especially for battery-powered devices. Sending data wirelessly consumes significant power, while optimized local processing can extend battery life dramatically.

The Hardware Behind TinyML and Edge AI

Running AI models on small devices requires specialized hardware and optimization. Here are the key components that make this possible:

Microcontrollers: The TinyML Foundation

Microcontrollers are complete computing systems on a single chip. They typically include a processor, memory, and input/output peripherals. Popular platforms for TinyML include:

- Arduino Nano 33 BLE Sense: Includes sensors for motion, sound, and light detection

- ESP32: Affordable with built-in Wi-Fi and Bluetooth

- Raspberry Pi Pico: Versatile with programmable I/O

- Google Coral Dev Board: Specifically designed for Edge AI with a dedicated Tensor Processing Unit (TPU)

Neural Processing Units (NPUs)

Specialized chips designed specifically for running neural networks efficiently. They perform the matrix calculations that AI models require much faster and with less power than general-purpose processors. Many modern smartphones now include NPUs for on-device AI features.

Field-Programmable Gate Arrays (FPGAs)

These chips can be reprogrammed after manufacturing to optimize for specific AI workloads, offering flexibility and efficiency for specialized applications.

How AI Models Get "Tiny"

Standard AI models designed for powerful servers are far too large and computationally intensive for small devices. Making them suitable for Edge AI involves several optimization techniques:

1. Model Quantization

This reduces the precision of numbers used in calculations. Instead of using 32-bit floating-point numbers (which offer high precision but require more memory and computation), quantized models might use 8-bit integers. This can reduce model size by 75% with minimal accuracy loss for many applications.

2. Pruning

Like trimming unnecessary branches from a tree, pruning removes connections in neural networks that contribute little to the final result. Research shows that many neural networks are significantly over-parameterized, and careful pruning can reduce size by 90% or more while maintaining accuracy.

3. Knowledge Distillation

A small "student" model learns to mimic a larger, more accurate "teacher" model. The student captures the essential patterns without the complexity, resulting in a much smaller model that performs nearly as well.

4. Architecture Search

Automated techniques search for neural network architectures specifically designed for efficiency on target hardware. These models are built from the ground up to be small and fast rather than being shrunk from larger models.

5. Hardware-Aware Optimization

Models are optimized for specific hardware characteristics, taking advantage of unique features of different processors and memory architectures.

Each optimization technique involves trade-offs between size, speed, accuracy, and development complexity. Tools like TensorFlow Lite, PyTorch Mobile, and ONNX Runtime provide frameworks for converting and optimizing models for edge deployment. The choice depends on your specific constraints—a voice command model might prioritize speed, while a medical diagnosis model would prioritize accuracy even at the cost of larger size.

Real-World Applications Changing Industries

TinyML and Edge AI are already transforming numerous industries. Here are compelling examples of how these technologies work in practice:

Healthcare and Wearables

Smartwatches and fitness trackers use TinyML for:

- Activity recognition: Detecting walking, running, swimming, or specific exercises

- Fall detection: Identifying when someone has fallen and may need assistance

- Heart rate anomaly detection: Spotting irregular patterns that might indicate health issues

- Sleep stage analysis: Monitoring sleep quality without cloud processing

Smart Homes and IoT

Edge AI enables:

- Voice-controlled devices that work without internet connectivity

- Intelligent security cameras that only record when they detect people or unusual activity

- Energy optimization systems that learn household patterns to control heating/cooling efficiently

- Smart appliances that adjust operation based on usage patterns

Industrial and Agricultural Automation

In remote or challenging environments:

- Predictive maintenance sensors detect equipment failures before they happen

- Precision agriculture devices monitor crop health and soil conditions

- Quality control systems inspect products on manufacturing lines

- Environmental monitoring stations track air/water quality

Accessibility Technology

Edge AI powers assistive devices like:

- Real-time captioning for conversations without internet

- Object recognition for visually impaired users

- Gesture-controlled interfaces for users with mobility limitations

Getting Started with TinyML: A Beginner's Pathway

You don't need to be an expert to start experimenting with TinyML. Here's a practical pathway for beginners:

Step 1: Understand the Basics

Before diving into TinyML, ensure you understand artificial intelligence basics and how machine learning works. You don't need deep expertise, but familiar concepts will help tremendously.

Step 2: Choose Your Hardware

Start with an affordable, beginner-friendly board like:

- Arduino Nano 33 BLE Sense (~$30): Excellent for sensor-based projects

- ESP32-CAM (~$10): Adds camera capabilities

- Raspberry Pi 4 (~$35): More powerful for complex projects

Step 3: Learn Through Platforms

Several platforms make TinyML accessible:

- Edge Impulse: Web-based platform for collecting data and training models without coding

- TinyML Foundation Courses: Free educational resources

- Google's TensorFlow Lite Micro: For those with some programming experience

Step 4: Start with Pre-Trained Models

Begin by deploying existing models before training your own. Many platforms offer pre-trained models for common tasks like:

- Keyword spotting (detecting specific words in audio)

- Image classification (recognizing objects in images)

- Anomaly detection (identifying unusual patterns in sensor data)

Step 5: Build Your First Project

Start simple! A good first project might be:

- A device that turns lights on when it detects clapping

- A plant monitor that alerts you when soil is dry

- A simple voice command system for basic controls

Challenges and Limitations of Edge AI

While Edge AI offers tremendous benefits, it's important to understand its current limitations:

1. Model Complexity Constraints

The most sophisticated AI models (like large language models with billions of parameters) simply cannot run on tiny devices with current technology. Edge AI excels at focused, specialized tasks rather than general intelligence.

2. Development Complexity

Optimizing models for edge deployment requires expertise in both machine learning and embedded systems—a combination that's still relatively rare.

3. Hardware Diversity

With countless different microcontrollers and edge devices, creating models that work well across different hardware platforms can be challenging.

4. Update and Maintenance

Updating AI models on deployed devices (especially in remote locations) presents logistical challenges compared to cloud-based models that can be updated centrally.

5. Data Scarcity for Training

Edge devices often operate in unique environments, making it difficult to collect sufficient training data that represents all possible conditions the device will encounter.

The Future of TinyML and Edge AI

Several exciting developments are shaping the future of on-device AI:

1. Federated Learning

This approach allows devices to collaboratively learn a shared model while keeping all training data local. Your smartphone learns from your usage patterns, shares only model updates (not personal data) with a central server, which combines updates from millions of devices to improve the global model.

2. Hybrid Edge-Cloud Architectures

Most practical systems will use a combination of edge and cloud processing. Simple tasks happen locally for speed and privacy, while complex analysis or rare events might be sent to the cloud. This balanced approach offers the best of both worlds.

3. Specialized Hardware Proliferation

We're seeing more devices with AI-accelerator chips built in, from smartphones to smart speakers to automobiles. This hardware democratization will make Edge AI capabilities available in more products at lower costs.

4. Standardization and Interoperability

Industry efforts like the Open Neural Network Exchange (ONNX) format are making it easier to deploy models across different hardware platforms, reducing development complexity.

5. Energy Harvesting Integration

Future devices might power themselves from ambient light, vibration, or temperature differences, combined with ultra-low-power Edge AI processing, enabling truly autonomous, maintenance-free deployments.

Privacy Implications and Ethical Considerations

Edge AI's privacy benefits are significant, but they come with responsibilities:

Transparency About Capabilities

Companies should clearly communicate what processing happens locally versus what gets sent to the cloud. Users deserve to understand where their data is processed and stored.

Consent for Data Collection

Even when data stays on-device, users should understand what information is being collected and analyzed. Proper consent mechanisms remain important.

Security of On-Device Processing

While keeping data local enhances privacy, it also means securing the devices themselves becomes more critical. Physical access to a device with local AI capabilities could potentially allow extraction of sensitive information or models.

Bias in Deployed Models

Edge AI models must be carefully tested for bias before deployment, as updating them can be more difficult than cloud-based models. Once deployed to thousands of devices, fixing biased behavior becomes a significant challenge.

Comparing Edge AI to Other Approaches

Understanding where Edge AI fits in the broader technology landscape helps clarify its role:

Edge AI vs. Cloud AI

As we've discussed, Edge AI prioritizes speed, privacy, and offline operation, while Cloud AI offers virtually unlimited computational power and easier updates. The future lies in intelligent combination of both approaches.

Edge AI vs. No-Code Automation

No-code platforms often leverage cloud AI services, making them easier to use but dependent on internet connectivity. Edge AI requires more technical expertise but offers greater independence and control.

Edge AI vs. Intelligent Automation

Intelligent automation typically refers to business process automation that might combine both edge and cloud components. Edge AI often serves as the sensing and immediate response layer within broader intelligent automation systems.

Practical Guide: Evaluating When to Use Edge AI

How do you decide whether your project needs Edge AI? Consider these questions:

Use Edge AI When:

- You need instant response times (under 100 milliseconds)

- Privacy concerns prevent cloud data transmission

- The device operates in areas with unreliable or no internet

- Bandwidth costs for cloud transmission would be prohibitive

- You need to maximize battery life on portable devices

- The AI task is well-defined and doesn't require general intelligence

Stick with Cloud AI When:

- You need the most powerful models available

- Rapid model updates and improvements are essential

- The application benefits from aggregated learning across many users

- You lack embedded systems development expertise

- Initial development speed is more important than long-term operational costs

Resources for Learning More

Ready to dive deeper into TinyML and Edge AI? Here are excellent next steps:

Online Courses and Tutorials

- Harvard's TinyML Course (free on edX)

- Edge Impulse Fundamentals (free hands-on tutorials)

- TensorFlow Lite Micro Examples (official documentation with code samples)

Development Boards and Kits

- Arduino TinyML Kit: Complete starter kit with hardware and tutorials

- Seeed Studio Grove AI Kit: Modular system for rapid prototyping

- NVIDIA Jetson Nano: More powerful for complex computer vision projects

Communities and Forums

- TinyML Foundation: Industry group with events and resources

- Edge AI and Vision Alliance: Focus on visual applications

- r/TinyML on Reddit: Community discussions and project sharing

Conclusion: The Democratization of AI

TinyML and Edge AI represent a fundamental shift in how artificial intelligence integrates with our world. By moving intelligence to the devices themselves, we're creating systems that are more private, responsive, resilient, and accessible. This isn't just a technical improvement—it's changing who can use AI and for what purposes.

From farmers in remote fields monitoring crops to elderly individuals using fall-detection devices, from factories optimizing production to households saving energy, Edge AI makes artificial intelligence practical in ways that cloud-only approaches cannot. As the technology continues to advance—with more efficient models, better hardware, and easier development tools—we'll see even more creative applications emerge.

The most exciting aspect may be how Edge AI democratizes artificial intelligence development. With affordable hardware and accessible platforms, students, hobbyists, and small businesses can now experiment with and deploy AI solutions that were previously only available to large corporations with cloud budgets. This expansion of who can build with AI will likely drive the next wave of innovation in the field.

As you consider how AI might enhance your projects or business, remember that the cloud isn't the only option—and often isn't the best one. By understanding when and how to leverage Edge AI, you can create solutions that are not just intelligent, but also practical, private, and perfectly suited to their environment.

Further Reading

Share

What's Your Reaction?

Like

2150

Like

2150

Dislike

12

Dislike

12

Love

430

Love

430

Funny

85

Funny

85

Angry

8

Angry

8

Sad

3

Sad

3

Wow

310

Wow

310

The democratization aspect is what excites me most. Making AI accessible to smaller developers and hobbyists will drive innovation in ways big companies never would.

As a product manager for IoT devices, this article perfectly summarizes the decisions we face daily. The evaluation framework at the end is going straight into our product planning documents.

The healthcare examples are life-changing. My grandmother uses a fall detection device, and knowing it works locally gives our family much more peace of mind about her privacy.

I've been working with Raspberry Pi for years but never explored the AI capabilities. This article inspired me to try TensorFlow Lite on my next project.

Great to hear, Donovan! The Coral USB Accelerator pairs beautifully with Raspberry Pi for computer vision projects if you want to explore more advanced capabilities while keeping costs reasonable.

The future developments section got me excited. Hybrid edge-cloud architectures make so much sense for most real-world applications.

I appreciate the balanced view showing both benefits and limitations. Too many articles hype edge AI as a solution for everything when it really has specific sweet spots.