Ethical Guardrails for Generative Media (Images & Video)

This comprehensive guide explores ethical guardrails for AI-generated images and video, providing practical implementation strategies for developers and content creators. We cover the fundamental principles of responsible generative media, including safety filters, content moderation systems, bias detection and mitigation techniques, and deployment best practices. Learn about different types of guardrails (input filtering, output validation, real-time monitoring), implementation considerations for various use cases, and maintenance strategies for long-term safety. The article includes practical checklists, comparative analysis of different approaches, and real-world examples of both successful implementations and cautionary tales. Whether you're building generative AI applications or using existing tools, this guide helps you implement effective ethical safeguards.

Introduction: The Need for Guardrails in Generative Media

As AI-generated images and video become increasingly sophisticated and accessible, the importance of implementing ethical guardrails has never been more critical. These guardrails—the technical and procedural safeguards that prevent harmful, biased, or inappropriate content—serve as essential boundaries in the rapidly expanding universe of generative media. From deepfake detection to bias mitigation, ethical guardrails represent the practical implementation of responsible AI principles.

The evolution of generative AI has been nothing short of revolutionary. In just a few years, we've moved from simple pattern recognition to systems that can create photorealistic images, generate convincing video sequences, and produce artistic content indistinguishable from human creation. However, this power comes with significant responsibility. Without proper guardrails, generative media tools can amplify societal biases, generate harmful content, violate privacy, and undermine trust in digital media.

This guide provides a comprehensive, practical approach to implementing ethical guardrails for AI-generated images and video. Whether you're a developer building generative AI applications, a content creator using these tools, or a decision-maker implementing AI solutions, understanding these guardrails is essential for responsible innovation.

Understanding the Ethical Landscape

Core Ethical Principles for Generative Media

Before diving into technical implementations, it's crucial to understand the foundational ethical principles that should guide guardrail development. These principles, adapted from frameworks like the EU's Ethics Guidelines for Trustworthy AI and IEEE's Ethically Aligned Design, include:

- Beneficence and Non-maleficence: AI systems should promote well-being and prevent harm

- Autonomy and Human Oversight: Humans should maintain meaningful control over AI systems

- Justice and Fairness: Systems should avoid unfair bias and promote equitable outcomes

- Explicability: Systems should be transparent and understandable

- Privacy and Data Governance: Respect for privacy and proper data handling

- Accountability: Clear responsibility for AI system outcomes

These principles translate into practical requirements for generative media systems. For example, the principle of non-maleficence requires content filters to prevent generation of harmful imagery, while fairness demands bias detection in training data and outputs.

The Spectrum of Potential Harms

Generative media can cause harm through multiple vectors. Understanding these potential harms is the first step in designing effective guardrails:

- Direct Harms: Generation of violent, explicit, or hateful content

- Bias and Discrimination: Systematic underrepresentation or stereotyping of certain groups

- Misinformation and Manipulation: Creation of convincing but false media (deepfakes)

- Privacy Violations: Generation of content using personal data without consent

- Intellectual Property Issues: Unauthorized use of copyrighted material or styles

- Psychological Impacts: Content that causes emotional distress or reinforces harmful stereotypes

Visuals Produced by AI

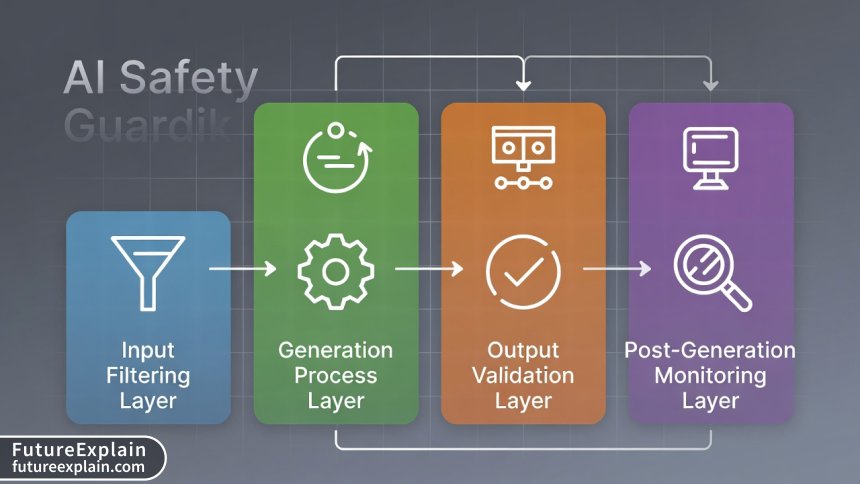

Technical Guardrails: A Layered Approach

Effective ethical guardrails employ a multi-layered defense strategy, similar to cybersecurity approaches. Each layer provides redundancy, ensuring that if one safety measure fails, others continue to provide protection.

Layer 1: Input Filtering and Validation

The first line of defense occurs at the input stage, where user prompts and parameters are evaluated before generation begins. This layer includes:

- Prompt Analysis: Natural language processing to detect harmful, biased, or inappropriate requests

- Content Policy Enforcement: Rules-based systems that block requests violating terms of service

- Context Awareness: Understanding the intended use case to apply appropriate restrictions

- User Reputation Systems: Track records that influence filtering strictness

Implementation Checklist for Input Filtering:

- ✓ Maintain an updated blocklist of prohibited terms and concepts

- ✓ Implement semantic analysis, not just keyword matching

- ✓ Consider context (medical vs. artistic use cases need different filters)

- ✓ Provide clear feedback when requests are blocked

- ✓ Allow appeals for false positives with human review

- ✓ Log all blocked requests for continuous improvement

Layer 2: Generation Process Controls

During the actual generation process, several technical controls can be implemented:

- Model Architecture Constraints: Technical limitations built into the model itself

- Latent Space Steering: Guidance during generation to avoid certain content regions

- Differential Privacy: Techniques to prevent memorization of training data

- Watermarking: Imperceptible markers to identify AI-generated content

Layer 3: Output Validation and Filtering

After generation, content undergoes validation to ensure it meets safety standards:

- Content Classification Models: AI systems trained to detect harmful content

- Bias Detection Algorithms: Analysis of representation and stereotypes

- Quality Thresholds: Minimum standards for coherence and appropriateness

- Human-in-the-Loop Review: Manual inspection for high-risk or ambiguous cases

Layer 4: Post-Generation Monitoring

Even after content passes initial validation, ongoing monitoring is essential:

- Usage Analytics: Tracking how generated content is actually used

- Feedback Collection: Systems for users to report problematic content

- Periodic Audits: Regular reviews of system outputs and decisions

- Adaptive Learning: Systems that improve based on new information about harms

Specific Guardrails for Different Content Types

Image Generation Guardrails

Image generation systems require specialized guardrails addressing visual content's unique challenges:

Common Image Guardrail Techniques:

- NSFW Detectors: Classifiers trained to identify explicit content with high accuracy (typically 95%+ for major platforms)

- Face and Person Detection: Systems to prevent generation of real individuals without consent

- Copyrighted Style Detection: Algorithms to identify模仿 of protected artistic styles

- Diversity Metrics: Analysis of generated images for representation across demographics

- Reality Distortion Checks: Detection of physically impossible or medically inaccurate imagery

Recent advances in image guardrails include multimodal systems that understand both the prompt and the generated image in context. For example, a system might allow medical illustrations in educational contexts while blocking similar imagery in entertainment contexts.

Video Generation Guardrails

Video generation introduces additional complexity with temporal dimensions and audio components:

- Temporal Consistency Checks: Ensuring content doesn't violate policies across frames

- Audio-Content Alignment: Verification that audio matches visual content appropriately

- Deepfake Detection: Specialized algorithms to identify manipulated video content

- Scene Transition Analysis: Monitoring for policy violations across scene changes

- Real-time Generation Controls: For interactive or streaming generation systems

Bias Mitigation Strategies

Bias in generative media manifests in numerous ways, from stereotypical representations to systematic exclusion. Effective bias mitigation requires a multi-pronged approach:

Training Data Curation

The foundation of unbiased generation lies in the training data:

- Diverse Data Collection: Intentional inclusion of underrepresented groups and perspectives

- Bias Auditing: Systematic analysis of training datasets for representation gaps

- Data Balancing: Techniques to ensure equitable representation in training samples

- Cultural Consultation: Involving diverse experts in dataset development

Generation-Time Bias Controls

During the generation process, several techniques can mitigate bias:

- Prompt Engineering Guidelines: Best practices for creating unbiased prompts

- Representation Quotas: Optional systems to ensure minimum diversity in outputs

- Stereotype Detection: Real-time identification of stereotypical portrayals

- Cultural Context Awareness: Systems that understand cultural nuances and sensitivities

Post-Hoc Bias Correction

When bias is detected in outputs, correction mechanisms include:

- Alternative Generation: Creating additional options with better representation

- Content Warnings: Alerting users to potential biases in generated content

- Bias Score Reporting: Quantitative metrics on representation in outputs

- User Education: Guidance on recognizing and addressing bias in AI outputs

Visuals Produced by AI

Implementation Considerations

Technical Implementation Options

Implementing guardrails requires choosing appropriate technical approaches based on your specific needs:

Implementation Decision Framework:

- Assess Risk Level: Determine potential harm severity (low, medium, high)

- Identify Use Case Constraints: Consider latency requirements, cost limitations, accuracy needs

- Evaluate Technical Capabilities: Assess available expertise, infrastructure, budget

- Choose Implementation Pattern:

- Pre-trained solutions (fastest deployment)

- Fine-tuned models (balance of customizability and development time)

- Custom-built systems (maximum control but highest cost)

- Plan for Iteration: Design for continuous improvement based on feedback

Performance and Cost Considerations

Guardrails inevitably impact system performance and cost. Key considerations include:

- Latency Impact: Each safety layer adds processing time (typically 100-500ms per layer)

- Computational Cost: Running safety models requires additional GPU/CPU resources

- False Positive Rates: Overly strict filters may block legitimate content

- Maintenance Overhead: Regular updates needed for evolving threats and standards

Cost Analysis Example: A medium-scale image generation service (10,000 images/day) might incur:

- Basic filtering: $200-500/month in additional compute

- Moderate guardrails (including bias detection): $800-1,500/month

- Comprehensive safety system: $2,000-5,000+/month

- Human review for ambiguous cases: Additional $3,000-10,000/month depending on volume

Regulatory and Compliance Aspects

Current Regulatory Landscape

As of 2025, the regulatory environment for generative media includes:

- EU AI Act: Categorizes certain generative AI systems as high-risk with specific requirements

- US Executive Orders: Guidelines for safe and trustworthy AI development

- Industry Standards: Voluntary frameworks from IEEE, ISO, and industry groups

- Sector-Specific Regulations: Additional requirements for healthcare, finance, education applications

Compliance Strategies

Effective compliance requires proactive approaches:

- Documentation Systems: Maintain records of safety measures, testing results, and incident responses

- Audit Trails: Comprehensive logging of generation requests, filtering decisions, and modifications

- Transparency Reports: Regular public disclosure of safety practices and outcomes

- Third-Party Assessments: Independent evaluations of safety systems and practices

Case Studies: Lessons from Real Implementations

Success Story: Educational Platform Implementation

A major online education platform implemented guardrails for their AI illustration tool with impressive results:

- Challenge: Needed safe image generation for K-12 educational content

- Solution: Multi-layer system including age-appropriate filters, educational context recognition, and teacher oversight features

- Results: 99.7% appropriate content rate, 0.2% false positive rate, high teacher satisfaction scores

- Key Insight: Context-aware filtering outperformed generic content filters by 40% in accuracy

Cautionary Tale: Social Media Filter Failure

A viral content platform experienced significant issues with inadequate guardrails:

- Problem: Over-reliance on automated filters without human review escalation

- Consequence: Both excessive blocking of legitimate content and failure to catch sophisticated policy violations

- Resolution Implemented hybrid human-AI review system with continuous feedback loops

- Lesson Learned: No purely automated system can handle all edge cases effectively

Future Trends and Emerging Challenges

Technological Developments

The guardrail landscape continues to evolve with technological advances:

- Federated Learning for Safety: Collaborative model improvement without sharing sensitive data

- Explainable AI for Moderation: Systems that explain why content was filtered or allowed

- Real-time Adaptation: Guardrails that adjust based on emerging threats and patterns

- Cross-Modal Safety: Integrated systems understanding relationships between text, image, and video

Emerging Ethical Challenges

New applications create novel ethical questions:

- Synthetic Media in Journalism: Balancing illustrative value with authenticity concerns

- Therapeutic Applications: Using generative media for mental health with appropriate safeguards

- Cultural Heritage Recreation: Generating representations of lost artifacts and sites

- Personalized Content at Scale: Ethical implications of mass-customized media

Maintenance and Continuous Improvement

Effective guardrails require ongoing maintenance and improvement:

Monitoring Systems

- Performance Metrics: Regular measurement of false positive/negative rates, latency, coverage

- User Feedback Channels: Structured systems for collecting and analyzing user reports

- Threat Intelligence

- Benchmarking: Comparison against industry standards and competitor approaches

Update Cycles

- Daily: Blocklist updates for emerging threats

- Weekly: Model performance reviews and tuning

- Monthly: Comprehensive system audits and reporting

- Quarterly: Strategy reviews and major updates

- Annually: Complete system reassessment and roadmap planning

Getting Started: Practical First Steps

For organizations beginning their guardrail implementation journey:

- Conduct Risk Assessment: Identify your specific risks based on use cases and audience

- Start with Pre-built Solutions: Leverage existing APIs and services before building custom systems

- Implement Basic Filtering: Deploy fundamental content policy enforcement

- Establish Review Processes: Create human oversight for edge cases and appeals

- Build Measurement Systems: Track effectiveness and identify improvement areas

- Iterate and Expand: Gradually add more sophisticated guardrails based on needs and capabilities

Conclusion: Balancing Innovation and Responsibility

Ethical guardrails for generative media represent a necessary evolution in AI development—one that balances tremendous creative potential with essential safeguards. As these technologies become more integrated into our digital lives, the implementation of effective, thoughtful guardrails will determine whether generative media empowers positive change or amplifies existing harms.

The journey toward responsible generative AI is continuous, requiring ongoing attention to emerging challenges, evolving standards, and stakeholder needs. By implementing layered guardrail systems, maintaining human oversight, and committing to continuous improvement, we can harness the power of generative media while protecting against its risks.

Further Reading:

Share

What's Your Reaction?

Like

15210

Like

15210

Dislike

145

Dislike

145

Love

1832

Love

1832

Funny

423

Funny

423

Angry

89

Angry

89

Sad

56

Sad

56

Wow

2120

Wow

2120

Cultural context is so important. Content that's acceptable in one culture might be offensive in another. Global platforms really struggle with this balance.

As someone working in mental health tech, I appreciate the mention of therapeutic applications. We need specialized guardrails for sensitive use cases.

The false positive problem is real. We've had legitimate educational content blocked by overzealous filters. The appeal process is critical.

I wish there was more open-source tooling for this. Most good safety systems are proprietary and expensive. The small developer community needs better options.

Excellent point! We're tracking several promising open-source projects. Hugging Face's safety tools and some academic releases are becoming more robust. We'll cover this in an upcoming tools review.

The regulatory compliance section saved us hours of research. We're expanding to European markets and needed to understand the AI Act requirements.

What about audio generation? The article focuses on images/video but audio AI (voice cloning, music generation) has its own ethical challenges.