Robotics and AI: How Perception Meets Action

This comprehensive guide explains how artificial intelligence transforms robotics from simple automated machines into intelligent systems that can perceive, understand, and act in complex environments. We break down the three key stages of AI robotics: perception (how robots see and sense the world), decision-making (how AI processes this information), and action (how robots physically interact with their environment). You'll learn about computer vision, sensor fusion, machine learning algorithms for control, and real-world applications from manufacturing to healthcare. The article also covers practical considerations for businesses, safety implications, and future trends in intelligent robotics—all explained in simple, beginner-friendly language without technical jargon.

Robotics and AI: How Perception Meets Action

When we imagine robots, we often picture machines from science fiction—humanoid assistants that see, think, and act with human-like intelligence. While today's real-world robots aren't quite at that level yet, artificial intelligence is transforming robotics in remarkable ways. The most significant advancement is how AI enables robots to move beyond simple, repetitive tasks and adapt to complex, changing environments. This transformation happens through what engineers call the "perception-action cycle"—the continuous loop of sensing, understanding, deciding, and acting that makes intelligent robotics possible.

In this comprehensive guide, we'll explore how AI bridges the gap between robotic perception and action. We'll start with the basics of how robots perceive their environment, then examine how AI processes this information to make decisions, and finally see how these decisions translate into physical actions. Whether you're a business owner considering robotics solutions, a student exploring career paths, or simply curious about future technology, this article will give you a clear understanding of one of the most exciting intersections of AI and robotics.

The Foundation: What Makes Robotics Different with AI?

Traditional industrial robots, which have been used in factories since the 1960s, operate on fixed programming. They perform the exact same motions repeatedly with incredible precision but lack adaptability. If something changes in their environment—like a part being slightly out of position—they'll continue their programmed motion and likely fail or cause damage. These robots are blind to their surroundings and cannot adjust to unexpected situations.

AI-enhanced robotics changes this fundamental limitation. By integrating artificial intelligence, robots gain the ability to:

- Perceive and understand their environment through sensors

- Make decisions based on what they perceive

- Adapt their actions to changing conditions

- Learn from experience and improve over time

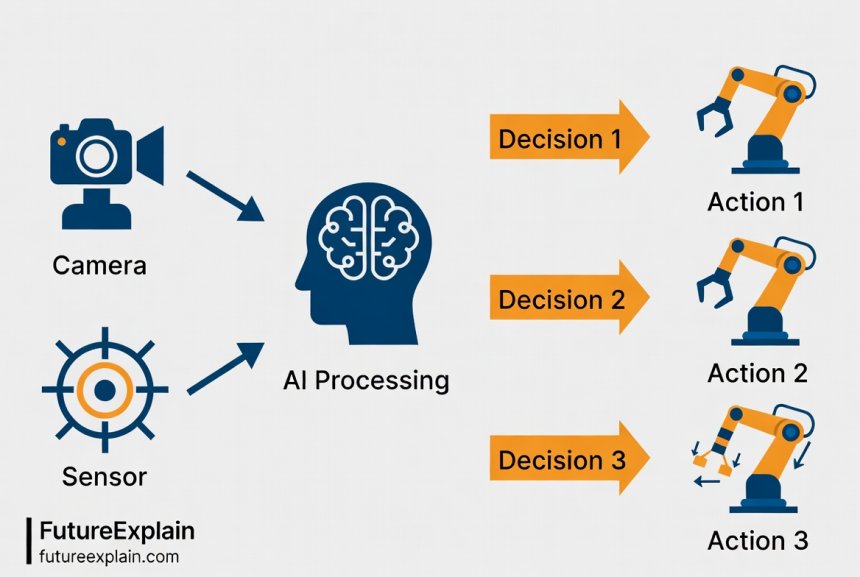

This shift represents what experts call "cognitive robotics"—machines that don't just follow instructions but can interpret situations and determine appropriate actions. The key innovation is the seamless integration of perception systems (eyes and ears), processing systems (brain), and actuation systems (arms and legs).

Stage 1: Perception—How Robots See and Sense the World

Perception is the foundation of intelligent robotics. Without accurate perception, robots cannot understand their environment, and thus cannot make appropriate decisions. Modern AI-powered robots use multiple sensing technologies that work together—a concept called "sensor fusion."

Computer Vision: The Robot's Eyes

Computer vision is one of the most important perception technologies in robotics. Through cameras and sophisticated AI algorithms, robots can:

- Identify objects and their positions

- Read text and symbols

- Recognize people and gestures

- Detect obstacles and navigate around them

- Assess quality and detect defects

Modern computer vision systems often use deep learning models trained on millions of images. For example, a robot in an Amazon warehouse uses computer vision to identify and locate thousands of different products, while a surgical robot uses it to distinguish between different types of tissue. The advancement from traditional computer vision (which relied on manually programmed features) to deep learning-based vision represents a major leap in robotic capabilities.

Other Sensing Technologies

Beyond cameras, robots use various sensors to perceive their environment:

- LiDAR (Light Detection and Ranging): Uses laser pulses to create precise 3D maps of surroundings. Essential for autonomous vehicles and drones.

- Radar: Especially useful for detecting objects at distance and in poor weather conditions.

- Ultrasonic sensors: Measure distance using sound waves, commonly used for obstacle detection.

- Force/torque sensors Measure forces applied during contact, crucial for delicate manipulation tasks.

- Tactile sensors: Provide touch feedback, allowing robots to handle fragile objects appropriately.

The combination of these sensors gives robots a comprehensive understanding of their environment. For instance, an autonomous mobile robot in a factory might use LiDAR for navigation, cameras for identifying specific objects, and force sensors to ensure it doesn't crush items it picks up.

Stage 2: Decision-Making—The AI Brain Processes Information

Once a robot has gathered sensory information, the next challenge is processing this data to make decisions. This is where artificial intelligence truly shines. The perception data—whether visual, distance measurements, or force readings—feeds into AI systems that must interpret what it means and decide what action to take.

From Raw Data to Understanding

The first step in decision-making is converting raw sensor data into meaningful information. A camera captures pixels, but the AI system must recognize these pixels as representing specific objects with particular properties. This process involves several AI techniques:

- Object detection and recognition: Identifying what objects are present and where they're located

- Semantic segmentation: Classifying each pixel in an image (road, vehicle, pedestrian, etc.)

- 3D reconstruction: Building three-dimensional understanding from 2D images or sensor data

- Sensor fusion: Combining data from multiple sensors into a coherent model of the environment

Advanced AI models can now perform these tasks with remarkable accuracy. For example, NVIDIA's Isaac robotics platform includes perception AI that can identify hundreds of different objects in real-time, even when partially obscured or in poor lighting conditions.

Planning and Decision Algorithms

Once the robot understands its environment, it must decide what to do. This involves several types of AI algorithms:

- Path planning: Determining the best route from point A to point B while avoiding obstacles

- Task planning: Breaking down complex tasks into sequences of simpler actions

- Reinforcement learning: Learning optimal behaviors through trial and error

- Predictive models: Anticipating how the environment or objects might change

These decision-making systems must balance multiple factors: efficiency, safety, energy consumption, and task requirements. They often work in hierarchical structures, with high-level planners setting goals and low-level controllers determining precise motions.

Stage 3: Action—How Robots Physically Interact with the World

The final stage of the perception-action cycle is physical action. Once a decision is made, the robot must execute it accurately and safely in the real world. This involves controlling motors, actuators, and manipulators to achieve desired movements and interactions.

Motion Control and Execution

Robotic action ranges from simple movements (like a robotic arm picking up an object) to complex coordinated motions (like a humanoid robot walking while carrying something). Key aspects include:

- Precision control: Moving to exact positions with millimeter or even micron accuracy

- Force control: Applying just the right amount of force—enough to grip an object but not crush it

- Compliant control: Allowing some flexibility in movement to accommodate uncertainties

- Coordinated control: Managing multiple joints or motors working together

Modern control systems often use AI to adapt in real-time. For instance, if a robot encounters unexpected resistance while inserting a part, AI control algorithms can adjust the force and trajectory rather than continuing blindly with the planned motion.

Adaptation and Learning in Action

One of the most powerful aspects of AI in robotics is the ability to learn and improve actions over time. Through techniques like reinforcement learning and imitation learning, robots can:

- Refine their movements based on what works best

- Adapt to wear and tear in their own mechanisms

- Learn new tasks by observing humans

- Compensate for changes in the objects they handle

For example, a robot learning to pour liquid might initially make spills but gradually learn the right angle and speed for different containers. This learning capability is what transforms robots from tools that need constant reprogramming to partners that can adapt to new situations.

Real-World Applications: Perception-Action Integration in Practice

To understand how perception meets action in real robotics, let's examine several practical applications across different industries.

Manufacturing and Logistics

Modern factories and warehouses showcase some of the most advanced perception-action integration. Companies like Amazon, FedEx, and automotive manufacturers use AI-powered robots for:

- Bin picking: Robots that can identify and pick specific items from mixed bins—a task that requires sophisticated perception to recognize objects in cluttered environments and delicate manipulation to grasp them properly.

- Assembly verification: Robots that visually inspect assembled products while simultaneously making adjustments if components are misaligned.

- Autonomous mobile robots (AMRs): Vehicles that navigate busy warehouses, perceiving people and obstacles while planning optimal routes to transport goods.

These applications demonstrate the complete perception-action cycle: sensors gather data about the environment, AI systems interpret this data and make decisions, and robotic systems execute the required physical actions.

Healthcare and Surgery

Medical robotics represents another frontier where precise perception-action integration is critical. Surgical robots like the da Vinci system enhance a surgeon's capabilities with:

- 3D high-definition vision systems that provide superior visualization

- Tremor filtration and motion scaling for precise control

- Force feedback that allows surgeons to "feel" tissue resistance

- AI assistance that can highlight anatomical structures or suggest optimal incision paths

Research systems go even further, with some experimental surgical robots capable of performing certain suturing or cutting motions autonomously under surgeon supervision. These systems must perceive tissue properties, plan appropriate actions, and execute them with sub-millimeter precision—all while adapting to physiological movements like breathing.

Agriculture and Food Production

Agricultural robotics faces particularly challenging perception-action requirements due to unstructured outdoor environments. Yet AI-powered robots are now:

- Harvesting fruits and vegetables by identifying ripeness through computer vision and gently picking without damage

- Weeding crops by distinguishing between crops and weeds, then precisely removing weeds

- Monitoring crop health through multispectral imaging and applying treatments only where needed

These applications require robust perception systems that work in varying lighting and weather conditions, decision algorithms that account for plant growth patterns, and delicate manipulation systems that handle living organisms gently.

The Technical Architecture: How Perception and Action Systems Connect

Understanding the technical architecture behind perception-action integration helps explain why this has only recently become practical. Modern robotic systems typically follow a layered architecture:

Hardware Layer: Sensors and Actuators

At the base level are the physical components: cameras, LiDAR units, motors, grippers, and other hardware. These have improved dramatically in recent years—cameras have higher resolution and faster frame rates, sensors have become smaller and more accurate, and actuators have become more powerful and efficient.

Middleware: ROS and Communication Frameworks

Robot Operating System (ROS) has become a standard framework for connecting different components. It allows perception modules, decision algorithms, and control systems to communicate efficiently, even if they're running on different computers or processors within the robot.

Perception Software Stack

This layer includes all the algorithms for processing sensor data: computer vision models, sensor fusion algorithms, localization and mapping systems. These often run on specialized hardware like GPUs or AI accelerators to handle the computational load.

Decision and Planning Layer

Here, higher-level AI systems interpret the processed perception data, maintain a world model, and plan actions. This includes task planners, path planners, and behavior controllers.

Control Layer

The lowest software level directly controls hardware—sending precise commands to motors, reading encoder feedback, and ensuring safety limits aren't exceeded.

This layered architecture allows different components to be developed independently while ensuring they work together seamlessly. It also enables robots to be more modular and upgradable—new perception algorithms can be added without redesigning the entire system.

Challenges and Limitations in Current Systems

Despite impressive advances, significant challenges remain in creating robots that seamlessly integrate perception and action:

Perception Limitations

Robots still struggle with certain perception tasks that humans handle effortlessly:

- Understanding transparent or reflective surfaces (like glass doors)

- Recognizing objects in extreme lighting conditions

- Interpreting ambiguous situations or partial information

- Distinguishing between similar objects in cluttered environments

Decision-Making Complexity

The "curse of dimensionality" makes comprehensive decision-making challenging—as environments become more complex, the number of possible situations grows exponentially, making it impossible to pre-program responses to everything.

Action Precision and Adaptability

Physical interaction with the real world introduces complexities that simulation can't fully capture:

- Variations in friction and material properties

- Wear and tear on mechanical components

- Unmodeled dynamics (like flexible objects or fluids)

- Need for real-time adaptation to unexpected contact

Integration Challenges

Perhaps the biggest challenge is seamless integration across the entire perception-action cycle. Latency (delay) between perception, decision, and action can cause instability, especially in dynamic environments. Ensuring all components work reliably together remains an engineering challenge.

Safety Considerations in AI-Powered Robotics

As robots become more autonomous and capable, safety becomes increasingly important. The integration of perception and action introduces both new safety challenges and new safety opportunities.

Inherent Safety Through Better Perception

Ironically, AI-powered perception actually makes robots safer in many ways. Traditional robots operated in cages because they couldn't detect people. Modern robots with advanced perception can:

- Detect humans in their workspace and slow down or stop

- Recognize unsafe conditions (like objects in dangerous positions)

- Monitor their own status and predict potential failures

- Adapt actions to avoid causing damage

New Safety Challenges

However, autonomous decision-making introduces new safety considerations:

- How to ensure AI decisions are safe in unpredictable situations

- How to balance safety with efficiency (overly cautious robots may not be useful)

- How to verify and validate AI systems that learn and change over time

- How to handle edge cases that weren't encountered during training

Safety Standards and Best Practices

The robotics industry is developing new standards for AI-powered robots. Key principles include:

- Risk assessment: Systematic evaluation of potential hazards

- Functional safety: Designing systems that remain safe even if components fail

- Transparency and explainability: Making AI decisions understandable to human operators

- Human oversight: Keeping humans in the loop for critical decisions

These safety considerations are particularly important as robots move from controlled industrial settings into more dynamic environments like homes, hospitals, and public spaces.

Business Implications: What This Means for Companies

The integration of perception and action through AI has significant implications for businesses considering robotics adoption:

Cost-Benefit Analysis

AI-powered robots typically have higher upfront costs than traditional automation but offer greater flexibility and adaptability. The business case depends on:

- Variability of tasks: Higher variability favors AI solutions

- Change frequency: Environments that change often benefit from adaptable robots

- Task complexity: Complex manipulation or decision tasks require AI capabilities

- Integration requirements: How easily robots can be integrated with existing systems

Implementation Considerations

Businesses implementing AI robotics should consider:

- Skill requirements: AI robotics requires different skills than traditional automation (more software/AI expertise)

- Data requirements: AI systems need training data, which may require initial setup and collection

- Maintenance: AI systems may need ongoing tuning and updates as conditions change

- Scalability: How well solutions scale from pilot projects to full deployment

Return on Investment

The ROI for AI-powered robotics comes from several areas:

- Increased flexibility: Handling product variations without reprogramming

- Improved quality: Better perception leads to fewer errors

- Reduced downtime: Adaptive systems can handle some anomalies without stopping

- New capabilities: Tasks previously impossible to automate become feasible

Future Trends: Where Perception-Action Integration Is Heading

The field of AI robotics continues to advance rapidly. Several trends are shaping the future of how perception meets action in robotics:

Multimodal Perception Integration

Future robots will integrate even more sensing modalities—combining vision, touch, sound, and even smell for richer environmental understanding. Research in multimodal AI is driving this trend, enabling robots to build more comprehensive world models.

Embodied AI and Learning in the Real World

Rather than training AI entirely in simulation, there's growing emphasis on "embodied AI"—systems that learn through actual physical interaction with the world. This approach, sometimes called "robotics gym," allows AI to learn the complexities of real-world physics and interactions.

Cloud Robotics and Shared Learning

Cloud connectivity allows robots to share what they learn. If one robot encounters a new situation or learns a better way to perform a task, that knowledge can be shared with other robots. This concept of "collective robot learning" could dramatically accelerate capability development.

Human-Robot Collaboration

Advanced perception-action integration enables closer human-robot collaboration. Robots that can better perceive human intentions and adapt their actions accordingly will work alongside humans more effectively. This aligns with trends in human-AI collaboration across various domains.

Edge AI and Real-Time Processing

As AI processors become more powerful and efficient, more perception and decision-making can happen directly on the robot ("at the edge") rather than relying on cloud connectivity. This reduces latency and improves reliability, especially for time-critical actions.

Getting Started with AI Robotics: Practical First Steps

For those interested in exploring AI robotics, whether as a business investment or personal learning, here are practical starting points:

Educational Pathways

Several online platforms offer courses in AI robotics:

- Coursera's "Robotics Specialization" from University of Pennsylvania

- edX's "Robotics MicroMasters" from University of Pennsylvania

- Udacity's "Robotics Software Engineer" nanodegree

- ROS (Robot Operating System) tutorials and documentation

Beginner-Friendly Platforms

Several platforms make AI robotics accessible to beginners:

- LEGO Mindstorms and VEX Robotics: Educational platforms with visual programming

- Arduino and Raspberry Pi: Low-cost hardware for building simple robots

- Google's Coral: AI accelerator boards that can be added to robotics projects

- Microsoft's Project Bonsai: Platform for building AI-powered control systems

Business Evaluation Tools

For businesses considering AI robotics:

- Start with a specific, well-defined problem rather than general automation

- Consider partnering with robotics integrators who specialize in AI solutions

- Look for modular solutions that can start small and expand

- Plan for the data collection and training phase in implementation timelines

Conclusion: The Transformative Power of Integrated Perception and Action

The integration of perception and action through artificial intelligence represents one of the most significant advances in robotics since the first industrial robots were introduced. By enabling robots to see, understand, decide, and act in coordinated ways, AI transforms them from blind machines following fixed programs into adaptive systems that can handle complexity and change.

This capability is already creating value across industries—from manufacturing and logistics to healthcare and agriculture. As the technology continues to advance, we can expect robots to become even more capable, working alongside humans in increasingly sophisticated ways. The key to successful adoption lies in understanding both the capabilities and limitations of current systems, approaching implementation with clear goals and realistic expectations, and prioritizing safety and reliability alongside functionality.

The future of robotics is not about creating machines that replace humans, but about developing systems that augment human capabilities and handle tasks that are dangerous, difficult, or dull. The seamless integration of perception and action through AI is what makes this vision increasingly practical and powerful.

Further Reading

Share

What's Your Reaction?

Like

1250

Like

1250

Dislike

8

Dislike

8

Love

320

Love

320

Funny

45

Funny

45

Angry

12

Angry

12

Sad

5

Sad

5

Wow

210

Wow

210

Great article! I'm sharing this with my product team as we design our next generation of service robots.

The business implications section should be required reading for any executive considering robotics investment.

As an AI ethics researcher, I appreciate that you included safety considerations. Too many articles focus only on capabilities.

I'd love more detail on how robots handle force control and compliance. That seems like a major differentiator for delicate tasks.

The layered architecture explanation was very clear. It shows why robotics requires cross-disciplinary teams.

This article helped me understand why our company's robotics implementation failed last year. We tried to use traditional robots for variable tasks.