Chain-of-Thought and Reasoning Techniques in LLMs

Chain-of-Thought (CoT) reasoning is a breakthrough technique that helps large language models think step by step, dramatically improving their problem-solving abilities. This comprehensive guide explains how CoT works in simple terms, showing why making AI 'show its work' leads to better answers. You'll learn practical CoT prompting techniques, from basic step-by-step reasoning to advanced methods like Tree of Thoughts and Self-Consistency. We compare different reasoning approaches with real examples, provide troubleshooting tips for when techniques fail, and explore business applications where reasoning AI creates real value. Whether you're new to AI or looking to improve your prompt engineering skills, this guide makes advanced reasoning techniques accessible and practical.

Chain-of-Thought and Reasoning Techniques in LLMs: Making AI Think Step by Step

Have you ever asked an AI a complex math problem and received a wrong answer, only to realize it skipped several logical steps? Or wondered why sometimes AI gives brilliant, nuanced responses while other times it seems to miss the obvious? The secret often lies in how we ask AI to think—specifically, whether we encourage it to reason step by step. This is where Chain-of-Thought (CoT) and reasoning techniques transform AI from a pattern-matching tool into something that genuinely appears to reason.

Chain-of-Thought prompting is more than just a technical trick—it's a fundamental shift in how we interact with large language models (LLMs). By asking AI to "think aloud" or "show your work," we tap into their capacity for logical reasoning that often remains hidden in standard prompting. Research shows that CoT can improve AI performance on complex reasoning tasks by 30-60%, turning mediocre results into surprisingly competent solutions.

In this comprehensive guide, we'll demystify Chain-of-Thought reasoning and explore the growing family of reasoning techniques that are revolutionizing how we use AI. Whether you're a business professional looking to get better results from AI tools, a student learning prompt engineering, or simply curious about how AI "thinks," you'll discover practical techniques you can use immediately.

What Is Chain-of-Thought Reasoning? (And Why It Matters)

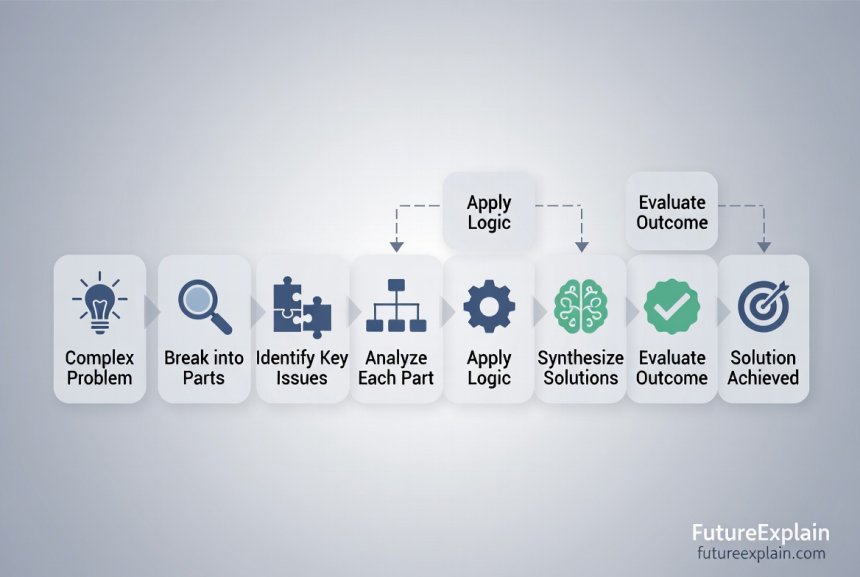

Chain-of-Thought (CoT) prompting is a technique where we ask a large language model to break down its reasoning into intermediate steps before arriving at a final answer. Instead of jumping directly to a conclusion, the AI is encouraged to think through the problem methodically, much like a human would when solving a complex puzzle.

The term "Chain-of-Thought" was popularized in a 2022 research paper by Google researchers who discovered that when LLMs are prompted to generate a series of reasoning steps, their accuracy on mathematical, commonsense, and symbolic reasoning tasks improves dramatically. This wasn't just about getting the right answer—it was about making the AI's thinking process transparent and verifiable.

Think of it this way: When you learned math in school, your teacher didn't just want the final answer—they wanted to see your work. This served two purposes: it helped you catch mistakes in your reasoning, and it allowed the teacher to understand how you were thinking. Chain-of-Thought does exactly the same for AI. By making the model articulate its reasoning steps, we get:

- Better accuracy: The model is less likely to make intuitive leaps that bypass important logic

- Transparency: We can see where the reasoning succeeds or fails

- Debugging capability: When answers are wrong, we can identify exactly which step went astray

- Educational value: The reasoning process itself becomes a learning tool

The Psychology Behind Why CoT Works

You might wonder: Why does asking an AI to "think step by step" actually change its output? The answer lies in how these models are trained and how they generate text. LLMs are fundamentally prediction engines—they predict the next word based on patterns in their training data. When we ask for direct answers, they often predict the most common or plausible-sounding answer. But when we ask for reasoning steps, they activate different patterns in their training data—patterns that include logical reasoning, step-by-step explanations, and methodological thinking.

Consider this analogy: If I asked you to calculate 18 × 24 in your head, you might jump to an estimate. But if I asked you to "show your work," you'd naturally break it down: 18 × 20 = 360, plus 18 × 4 = 72, total 432. The Chain-of-Thought approach forces this same systematic breakdown in AI.

Basic Chain-of-Thought Techniques You Can Use Today

Let's move from theory to practice. Here are the fundamental Chain-of-Thought techniques that work with virtually any modern LLM, from ChatGPT and Claude to open-source models.

1. The Simple Step-by-Step Prompt

The most basic form of Chain-of-Thought is simply asking the model to think through a problem step by step. The magic phrase is often: "Let's think step by step."

Standard prompt (often fails): "If a bat and ball cost $1.10 total, and the bat costs $1.00 more than the ball, how much does the ball cost?"

Chain-of-Thought prompt (much better): "If a bat and ball cost $1.10 total, and the bat costs $1.00 more than the ball, how much does the ball cost? Let's think through this step by step."

With the standard prompt, many models quickly answer "10 cents" (which is incorrect—the correct answer is 5 cents). With the CoT prompt, the model is more likely to reason: "Let the ball cost x. Then the bat costs x + 1.00. Total cost is x + (x + 1.00) = 1.10. So 2x + 1.00 = 1.10, 2x = 0.10, x = 0.05. The ball costs 5 cents."

2. Few-Shot Chain-of-Thought Examples

For more complex problems, providing examples of step-by-step reasoning can dramatically improve results. This is called "few-shot" Chain-of-Thought prompting.

Example template:

"Q: A restaurant has 23 tables. Each table can seat 4 people. On Monday, 15 tables were full. On Tuesday, 18 tables were full. How many more people ate at the restaurant on Tuesday than Monday?"

"A: Let's think step by step.

Monday: 15 tables × 4 people per table = 60 people

Tuesday: 18 tables × 4 people per table = 72 people

Difference: 72 - 60 = 12 more people on Tuesday

So the answer is 12."

"Now solve this: [Your new problem here]"

By showing the model exactly what kind of reasoning you want, you're providing a clear template for it to follow. This technique is particularly powerful for business applications like data analysis, scheduling problems, or financial calculations.

3. Zero-Shot Chain-of-Thought

Some of the most capable modern models (like GPT-4 and Claude 3) can perform Chain-of-Thought reasoning even without examples, simply when prompted with phrases like:

- "Let's work this out step by step to make sure we have the right answer."

- "We should think about this logically."

- "First, let's break down what we know..."

This zero-shot capability is particularly valuable because it doesn't require crafting detailed examples for every type of problem. The model has learned the pattern of step-by-step reasoning from its training data.

Advanced Reasoning Techniques Beyond Basic CoT

While basic Chain-of-Thought is powerful, researchers have developed more sophisticated techniques that build on this foundation. These advanced methods can handle even more complex reasoning tasks.

Self-Consistency: The Wisdom of Multiple Paths

Self-Consistency is a technique that combines Chain-of-Thought with multiple reasoning paths. Instead of generating just one Chain-of-Thought, the model generates several different reasoning paths, then chooses the answer that appears most consistently.

How it works:

- Generate multiple Chain-of-Thought reasoning paths for the same problem (usually 5-10)

- Extract the final answer from each reasoning path

- Select the answer that appears most frequently

This approach mimics how humans often solve difficult problems—we consider multiple approaches, then go with the one that seems most reliable. Self-Consistency has been shown to improve accuracy on mathematical and logical reasoning tasks by another 10-20% over standard Chain-of-Thought.

Tree of Thoughts: Exploring Multiple Branches

Tree of Thoughts (ToT) is perhaps the most sophisticated reasoning technique currently available. Instead of a single chain of reasoning, ToT creates a tree structure where each node represents a partial solution, and branches represent different reasoning paths.

Imagine you're planning a complex project. You wouldn't just follow one linear plan—you'd consider multiple approaches, evaluate their pros and cons, and sometimes backtrack when a path doesn't work. Tree of Thoughts allows AI to do exactly this kind of exploratory reasoning.

Key components of Tree of Thoughts:

- Thought decomposition: Breaking the problem into intermediate steps

- State evaluation: Assessing how promising each partial solution is

- Search algorithm: Systematically exploring the tree (breadth-first or depth-first)

- Backtracking: Recognizing dead ends and trying alternative paths

While implementing full Tree of Thoughts requires more technical setup, you can approximate its benefits by prompting models to "consider multiple approaches" or "explore different ways to solve this problem."

Graph of Thoughts: Connecting Ideas in Networks

An emerging technique, Graph of Thoughts (GoT), takes the tree concept further by allowing thoughts to connect in any pattern, not just hierarchical trees. This mirrors how human thinking often works—ideas connect in complex networks, with circular reasoning, side connections, and synthesis of multiple concepts.

Graph of Thoughts is particularly promising for creative tasks, strategic planning, and problems where the solution emerges from the interplay of multiple considerations rather than linear logic.

Practical Applications: Where Chain-of-Thought Creates Real Value

Understanding these techniques is valuable, but seeing where they create practical business and personal value makes them essential. Here are real-world applications where reasoning techniques transform AI from helpful to indispensable.

Business Decision Support

Consider a business evaluating whether to launch a new product. A standard AI analysis might list pros and cons. A Chain-of-Thought analysis would:

- Break down market size estimation step by step

- Calculate development costs with transparent assumptions

- Project revenue based on logical pricing and adoption curves

- Consider competitive response scenarios systematically

- Weight different factors based on their importance

The result isn't just an answer—it's a reasoning trail that business leaders can examine, challenge, and refine. This transparency builds trust in AI recommendations and helps teams understand the "why" behind suggestions.

Complex Problem Solving in Engineering and Science

In technical fields, the reasoning process is often as valuable as the answer. A materials scientist developing a new alloy needs to understand not just what composition works, but why it works. Chain-of-Thought prompting can guide AI through:

- Physical property calculations with shown formulas

- Trade-off analysis between different material characteristics

- Historical comparison with known successful alloys

- Manufacturing feasibility considerations

This approach turns AI from a black-box suggestion engine into a collaborative thinking partner that shows its work.

Educational Tutoring and Learning

One of the most powerful applications of Chain-of-Thought is in education. When students learn from AI, seeing the reasoning steps is far more valuable than just getting answers. A math tutor AI using CoT doesn't just give the solution to an equation—it demonstrates the solving process, explains why each step works, and can even generate similar problems for practice.

This application aligns perfectly with pedagogical best practices and transforms AI from a homework cheating tool into a legitimate educational resource.

Comparing Reasoning Techniques: When to Use Each Approach

With multiple reasoning techniques available, how do you choose the right one for your task? This comparison table will help you decide:

Standard Prompting:

Best for: Simple facts, definitions, creative writing

Strength: Fast, concise answers

Weakness: Prone to reasoning errors on complex problems

Example use: "What is the capital of France?"

Basic Chain-of-Thought:

Best for: Mathematical problems, logical puzzles, step-by-step processes

Strength: Transparent reasoning, better accuracy on complex tasks

Weakness: Can be verbose, not always necessary for simple tasks

Example use: "Calculate the return on investment for this business scenario"

Few-Shot CoT:

Best for: Tasks requiring specific format, complex multi-step problems

Strength: Very reliable when good examples are provided

Weakness: Requires crafting good examples, more tokens used

Example use: "Analyze this legal contract for potential issues"

Self-Consistency:

Best for: High-stakes decisions, problems where accuracy is critical

Strength: Most reliable answer through consensus

Weakness: Computationally expensive, slower response

Example use: "Medical diagnosis support where accuracy is vital"

Tree of Thoughts:

Best for: Strategic planning, creative brainstorming, problems with multiple valid paths

Strength: Explores alternatives systematically, finds innovative solutions

Weakness: Complex to implement, resource intensive

Example use: "Develop a marketing strategy for entering a new market"

Troubleshooting: When Chain-of-Thought Doesn't Work

Like any technique, Chain-of-Thought prompting isn't a magic bullet. Here are common problems and how to address them:

Problem 1: The Model Gets Stuck in a Reasoning Loop

Symptom: The AI keeps repeating the same reasoning steps without progressing.

Solution: Add constraints like "Think through this in exactly 5 steps" or provide more structure: "First, identify the key variables. Second, establish relationships..."

Problem 2: The Reasoning Is Illogical or Contradictory

Symptom: The step-by-step explanation contains logical errors or contradicts itself.

Solution: Use verification prompts: "After each reasoning step, check if it logically follows from the previous step." Or try Self-Consistency to generate multiple reasoning paths and compare them.

Problem 3: The Model Summarizes Instead of Reasoning

Symptom: Instead of showing work, the AI gives a condensed explanation.

Solution: Be more explicit: "Show every calculation step" or "Write out the complete reasoning process without skipping any steps."

Problem 4: The Response Is Too Verbose

Symptom: The Chain-of-Thought becomes unnecessarily long and detailed.

Solution: Add constraints: "Be concise in your reasoning" or "Use bullet points for each major step."

The Future of Reasoning in AI

Chain-of-Thought and related techniques represent just the beginning of making AI reasoning more human-like and reliable. Several exciting developments are on the horizon:

Integrated Reasoning in Model Architecture

Future LLMs may have reasoning capabilities built directly into their architecture rather than requiring special prompting. Some experimental models already include "reasoning layers" or dedicated modules for logical processing. This could make sophisticated reasoning as easy as asking a simple question.

Specialized Reasoning Models

Just as we have models specialized for coding (Code LLMs) or science, we may see models specifically optimized for different types of reasoning—mathematical, logical, spatial, or ethical reasoning.

Multi-Modal Reasoning

Current reasoning techniques focus primarily on text, but future systems will reason across modalities—connecting visual information with textual reasoning, or understanding spatial relationships described in text. This multi-modal reasoning will enable AI to solve more real-world problems.

Explainable AI Integration

Chain-of-Thought naturally supports explainable AI (XAI) goals by making reasoning transparent. Future systems might automatically generate reasoning trails for all significant conclusions, building trust and enabling human oversight.

Getting Started: Your Chain-of-Thought Action Plan

Ready to apply these techniques? Here's a practical action plan:

- Start simple: Add "Let's think step by step" to your next complex query

- Observe the difference: Compare responses with and without CoT prompts

- Experiment with few-shot: Create 2-3 examples for a recurring task type

- Try Self-Consistency: For important decisions, ask for multiple reasoning paths

- Document what works: Keep a prompt library of successful reasoning patterns

- Share and collaborate: Reasoning techniques improve when teams share best practices

Conclusion: Reasoning as a Collaborative Interface

Chain-of-Thought and advanced reasoning techniques represent more than just improved AI performance—they represent a shift toward more collaborative, transparent human-AI interaction. When AI shows its work, we can engage with it as thinking partners rather than oracle machines. We can spot errors in the reasoning process, suggest alternative approaches, and build on partial solutions.

This transparency is particularly crucial as AI systems take on more significant roles in business, education, and decision-making. The ability to trace how an AI arrived at a conclusion builds trust, enables oversight, and turns AI from a black box into a glass box where we can see the gears turning.

The most exciting aspect of these developments isn't just that AI gets better at reasoning—it's that we get better at understanding and working with AI. By learning to prompt for reasoning, we're learning to communicate more effectively with these systems, asking not just for answers but for understanding.

As you experiment with these techniques, remember that the goal isn't to make AI perfectly logical—it's to create more productive partnerships between human intuition and AI's pattern recognition capabilities. The future of AI isn't about machines that think perfectly, but about systems that think transparently enough for humans to collaborate with them effectively.

Further Reading

Share

What's Your Reaction?

Like

1420

Like

1420

Dislike

15

Dislike

15

Love

320

Love

320

Funny

85

Funny

85

Angry

8

Angry

8

Sad

3

Sad

3

Wow

210

Wow

210

I've shared this article with my entire team. We're implementing AI across our organization, and understanding reasoning techniques is essential for getting value from our investment.

The ethical implications of transparent AI reasoning are worth exploring further. If we can see an AI's reasoning, we can identify and correct biases in its thinking process.

Excellent point, Caiden. Transparent reasoning is a key component of <a href="/ethical-ai-explained-why-fairness-and-bias-matter">ethical AI</a>. When we can see the reasoning steps, we can audit for bias, questionable assumptions, or flawed logic. This transparency is crucial for building trustworthy AI systems.

As a software developer, I can see immediate applications for debugging code with CoT. Asking AI to explain its reasoning when suggesting fixes would save so much time compared to trial and error.

The comparison between standard response and CoT response in the article's examples clearly shows why this matters. It's not just about getting the right answer - it's about building trust through transparency.

How do these techniques work with smaller, open-source models? I'm using Llama 2 7B for cost reasons - will CoT still provide benefits with less capable models?

Good question, Levi. Smaller models do benefit from CoT, but the improvement is less dramatic than with larger models. Basic CoT still helps, but advanced techniques like Tree of Thoughts may be beyond their capabilities. Focus on clear, simple step-by-step prompts and provide more explicit structure for smaller models.

The action plan at the end is practical and actionable. Too many articles leave you with theory but no clear next steps. I've already started my prompt library as suggested.