Chain-of-Thought Prompts: When and How to Use Them

Chain-of-Thought prompting represents one of the most significant breakthroughs in getting AI systems to reason more like humans. This comprehensive guide explains exactly what Chain-of-Thought prompting is, why it dramatically improves AI reasoning on complex problems, and most importantly—when you should use it versus standard prompting. You'll learn practical templates for different use cases, understand the cost implications, discover industry-specific applications from healthcare to finance, and master troubleshooting techniques for when CoT doesn't work as expected. Whether you're a beginner looking to improve your AI interactions or a professional seeking to implement reliable reasoning systems, this guide provides the complete framework for leveraging Chain-of-Thought prompting effectively.

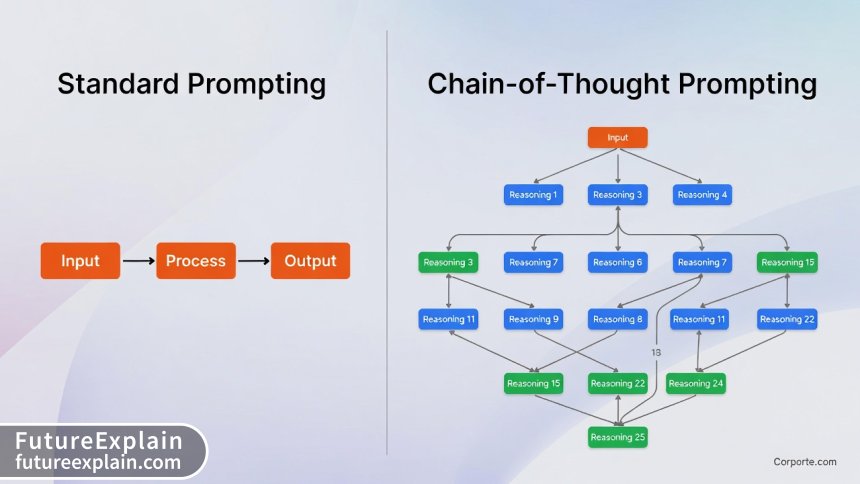

If you've ever asked an AI a complex question and received an answer that seemed to jump straight from problem to solution without showing its work, you've experienced the limitation of standard prompting. The AI might get the answer right sometimes, but when it's wrong, you have no insight into where the reasoning failed. This is exactly the problem that Chain-of-Thought (CoT) prompting solves.

Chain-of-Thought prompting represents one of the most important breakthroughs in how we interact with large language models. Unlike standard prompting that asks for a direct answer, CoT prompts the AI to "think out loud" by showing its reasoning step by step, much like how a human would work through a complex problem on paper. This simple but powerful technique has been shown to dramatically improve performance on mathematical problems, logical reasoning, complex planning, and multi-step decision making.

In this comprehensive guide, we'll explore exactly what Chain-of-Thought prompting is, why it works so effectively, and most importantly—when you should use it versus standard prompting techniques. We'll provide practical templates you can use immediately, analyze the cost implications, showcase real-world applications across different industries, and equip you with troubleshooting strategies for when CoT doesn't perform as expected.

What Exactly Is Chain-of-Thought Prompting?

At its core, Chain-of-Thought prompting is a technique where you explicitly ask the AI model to show its reasoning process step by step before providing a final answer. The term was first introduced in a 2022 research paper by Google researchers who discovered that when language models are prompted to generate a "chain of thought," their ability to solve complex reasoning problems improves significantly.

The fundamental insight is simple but profound: By forcing the model to articulate its intermediate reasoning steps, we're essentially giving it more "thinking space" and reducing the cognitive load of jumping directly from question to answer. This is particularly important for problems that require multiple logical steps, mathematical operations, or sequential decision-making.

Here's a basic comparison:

Standard Prompt: "If a bookstore has 120 books and sells 30 each day, how many books remain after 3 days?"

Chain-of-Thought Prompt: "Let's think through this step by step. A bookstore starts with 120 books. It sells 30 books each day. After day 1: 120 - 30 = 90 books remain. After day 2: 90 - 30 = 60 books remain. After day 3: 60 - 30 = 30 books remain. So after 3 days, 30 books remain."

The CoT approach doesn't just provide the answer—it shows the mathematical reasoning that leads to the answer. This might seem trivial for simple problems, but for complex scenarios with multiple variables, dependencies, and logical operations, this step-by-step reasoning becomes crucial.

Why Chain-of-Thought Works: The Psychology and Mechanics

Understanding why Chain-of-Thought prompting works requires looking at both the psychological principles it leverages and the technical mechanics of how language models process information.

The Psychological Foundation

From a cognitive psychology perspective, Chain-of-Thought prompting mirrors several well-established learning and problem-solving strategies:

- Working Memory Optimization: Breaking complex problems into smaller steps reduces cognitive load on working memory, making it easier to process each component correctly.

- Error Detection and Correction: When reasoning is made explicit, errors in logic or calculation become visible at intermediate steps rather than being buried in a final wrong answer.

- Metacognition: The process of explaining one's reasoning encourages metacognitive awareness—thinking about thinking—which improves problem-solving accuracy.

- Scaffolding: Step-by-step reasoning provides a scaffold that guides the problem-solving process, similar to how teachers scaffold complex concepts for students.

The Technical Mechanics

From a technical perspective, Chain-of-Thought prompting works because of how transformer-based language models process sequential information:

- Attention Mechanism Alignment: When generating step-by-step reasoning, the model's attention mechanism can focus more precisely on relevant parts of the problem at each stage.

- Context Window Utilization: CoT makes better use of the context window by distributing the "cognitive work" across multiple tokens rather than attempting to compute everything simultaneously.

- Pattern Completion vs. Computation: Language models are fundamentally pattern completers, not calculators. By framing mathematical and logical problems as sequential text patterns, CoT aligns better with the model's core capabilities.

- Error Propagation Reduction: In standard prompting, a single error early in the reasoning process contaminates the entire response. With CoT, errors are more contained to specific steps.

Research from Anthropic and Google has shown that Chain-of-Thought prompting can improve accuracy on certain reasoning tasks by 20-40% compared to standard prompting, particularly for problems requiring multiple steps or complex logical operations.

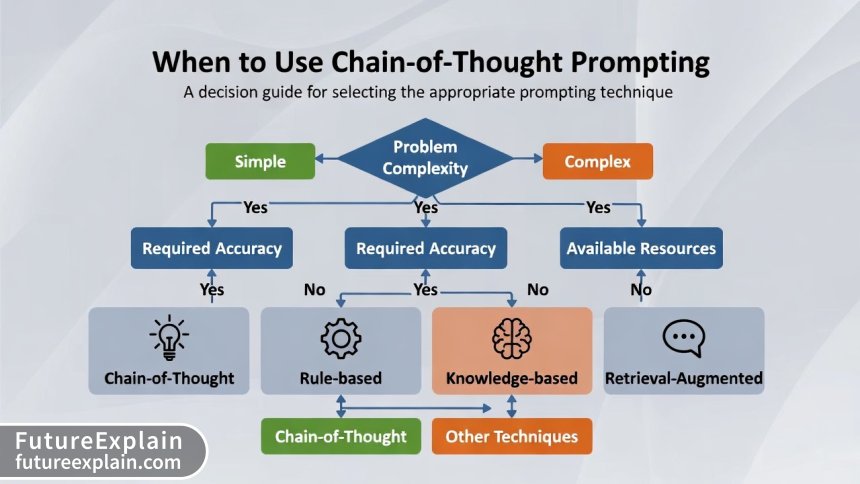

When to Use Chain-of-Thought Prompting: The Decision Framework

Not every prompt needs Chain-of-Thought reasoning. Using CoT unnecessarily can increase token usage and response time without providing meaningful benefits. Here's a practical decision framework to determine when Chain-of-Thought prompting is appropriate.

Definitely Use CoT When:

- Mathematical Calculations: Any problem involving arithmetic, algebra, statistics, or quantitative analysis

- Multi-Step Logical Reasoning: Problems requiring sequential logical deductions or conditional reasoning

- Planning and Scheduling: Tasks involving sequencing, resource allocation, or timeline planning

- Complex Decision Making: Scenarios with multiple variables, trade-offs, or competing considerations

- Error Analysis and Debugging: When you need to understand why something went wrong

- Educational Explanations: When the learning process is as important as the answer itself

Consider Standard Prompting When:

- Simple Fact Retrieval: Questions with direct, factual answers

- Creative Generation: Writing stories, poems, or creative content where spontaneous flow is preferred

- Brief Summarization: Condensing information without detailed analysis

- Low-Stakes Decisions: Simple choices without complex consequences

- Token-Limited Scenarios: When response length is severely constrained

- Real-Time Applications: Where latency is more critical than reasoning transparency

The Complexity-Transparency Tradeoff Matrix

To make this decision more systematic, consider where your task falls on these two dimensions:

1. Problem Complexity:

- Low: Single-step problems, direct lookups

- Medium: 2-3 step reasoning, simple calculations

- High: 4+ step reasoning, multiple variables, interdependent decisions

2. Need for Transparency:

- Low: Answer correctness is sufficient, process doesn't matter

- Medium: Some understanding of reasoning is helpful but not critical

- High: Must audit or verify the reasoning process

As a rule of thumb, use CoT when either dimension is "High," or when both are at least "Medium."

Practical Chain-of-Thought Templates and Examples

Now let's look at practical templates you can adapt for different scenarios. These templates include both the prompt structure and example applications.

Template 1: The Mathematical Reasoning Template

Structure: "Let's solve this step by step. [Restate problem]. First, [step 1]. Then, [step 2]. Next, [step 3]. Finally, [combine steps to reach conclusion]. Therefore, [answer]."

Example Application - Business Financials:

Prompt: "Let's calculate the projected quarterly revenue step by step. Our SaaS company has 1,200 customers paying $50/month. We expect 15% customer growth this quarter with a 5% churn rate. First, calculate starting monthly revenue: 1,200 × $50 = $60,000. Next, calculate new customers: 1,200 × 15% = 180 new customers. Then, calculate lost customers: 1,200 × 5% = 60 lost customers. Now, ending customers: 1,200 + 180 - 60 = 1,320 customers. Final monthly revenue: 1,320 × $50 = $66,000. Quarterly revenue: $66,000 × 3 = $198,000. Therefore, projected quarterly revenue is $198,000."

Template 2: The Decision Analysis Template

Structure: "We need to evaluate [decision] by considering [criteria 1], [criteria 2], and [criteria 3]. For criteria 1: [analysis]. For criteria 2: [analysis]. For criteria 3: [analysis]. Comparing trade-offs: [comparison]. Based on this analysis, [recommendation]."

Example Application - Technology Purchase:

Prompt: "Let's evaluate whether to purchase Software A or Software B for our team by considering cost, features, and integration. For cost: Software A is $50/user/month, Software B is $35/user/month but has a $500 setup fee. For 10 users annually: A = $6,000, B = $4,200 + $500 = $4,700. For features: Software A has advanced analytics missing from B, but B has better collaboration tools. For integration: Both integrate with our current stack, but A requires additional middleware. Comparing trade-offs: B saves $1,300 annually but lacks analytics our team needs. Based on this analysis, if analytics are critical, choose A despite higher cost; otherwise choose B for better value."

Template 3: The Troubleshooting Template

Structure: "To diagnose [problem], let's work through possible causes systematically. Could it be [cause 1]? Check by [test 1]. Result: [result]. Could it be [cause 2]? Check by [test 2]. Result: [result]. Could it be [cause 3]? Check by [test 3]. Result: [result]. Based on these tests, the most likely cause is [identified cause] because [reasoning]. Recommended fix: [solution]."

Template 4: The Learning and Explanation Template

Structure: "To understand [concept], let's build up from foundational principles. First, [basic principle 1]. This matters because [reason]. Next, [basic principle 2]. This connects because [connection]. Building on these, [advanced concept]. Putting it all together: [synthesis]. Key takeaway: [main insight]."

Industry-Specific Applications of Chain-of-Thought

Chain-of-Thought prompting isn't just an academic curiosity—it has practical applications across industries. Here's how different sectors are leveraging this technique.

Healthcare: Diagnostic Reasoning

In healthcare applications, CoT enables more transparent diagnostic assistance:

Example Prompt: "Analyze this patient case step by step. Patient presents with fever (102°F), cough for 5 days, and fatigue. First, consider common causes: viral infection (likely given cough duration), bacterial infection (less likely without specific indicators). Check for red flags: no shortness of breath, oxygen saturation normal. Review risk factors: patient is 35, non-smoker, no chronic conditions. Compare symptom patterns: matches viral upper respiratory infection more than pneumonia or COVID-19 (no loss of taste/smell). Recommended: symptomatic treatment, rest, return if symptoms worsen. Caution: monitor for bacterial superinfection."

Why CoT Matters Here: Medical decisions require traceable reasoning for safety and regulatory compliance. CoT provides an audit trail that standard prompting cannot.

Finance: Investment Analysis

Financial institutions use CoT for transparent investment reasoning:

Example Prompt: "Evaluate this investment opportunity systematically. Company XYZ has P/E ratio of 15, debt/equity of 0.3, and 10% annual growth. Step 1: Compare P/E to industry average of 18 → undervalued. Step 2: Debt ratio is conservative → low financial risk. Step 3: Growth exceeds industry average of 7% → strong performance. Step 4: Consider macroeconomic factors: rising interest rates may pressure valuation. Step 5: Risk assessment: medium risk due to market conditions. Conclusion: Fundamentally strong but wait for better entry point if interest rates continue rising."

Education: Step-by-Step Tutoring

Educational platforms leverage CoT for personalized learning:

Example Prompt: "Explain how to solve quadratic equations step by step. First, recognize standard form: ax² + bx + c = 0. Example: 2x² + 5x - 3 = 0. Step 1: Identify a=2, b=5, c=-3. Step 2: Calculate discriminant: b² - 4ac = 25 - 4(2)(-3) = 25 + 24 = 49. Step 3: Since discriminant positive (49), two real solutions. Step 4: Apply quadratic formula: x = [-b ± √(b²-4ac)] / 2a. Step 5: Calculate: x = [-5 ± √49] / 4 = [-5 ± 7] / 4. Step 6: Two solutions: x = (-5+7)/4 = 2/4 = 0.5, and x = (-5-7)/4 = -12/4 = -3. Therefore, solutions are x = 0.5 and x = -3."

Legal: Case Analysis and Precedent Evaluation

Legal professionals use CoT for structured case analysis:

Example Prompt: "Analyze whether this case qualifies for summary judgment step by step. First element: no genuine dispute of material fact. Plaintiff claims breach occurred on June 1; defendant admits this date. Second element: moving party entitled to judgment as matter of law. Plaintiff must show all elements of breach: contract existence (established), performance (plaintiff performed), breach (defendant admits), damages (plaintiff claims $50k loss). Third element: opposing party cannot prevail. Defendant's defense of impossibility requires showing unforeseeable event made performance impossible—not established here. Conclusion: summary judgment likely granted for plaintiff on liability, damages to be determined."

The Cost-Benefit Analysis of Chain-of-Thought

While Chain-of-Thought prompting offers significant benefits, it's important to understand the trade-offs, particularly regarding cost and performance.

Token Usage and Cost Implications

Chain-of-Thought prompts typically use 2-5x more tokens than standard prompts due to the detailed reasoning steps. Let's analyze the cost implications:

- Standard Prompt (example): "Calculate quarterly revenue for 1,200 customers at $50/month with 15% growth." (10 tokens)

- CoT Prompt (same problem): "Let's calculate step by step: Starting customers = 1,200. Monthly fee = $50. Monthly revenue = 1,200 × 50 = $60,000. Quarterly revenue = $60,000 × 3 = $180,000. Growth = 15% = 0.15. New customers = 1,200 × 0.15 = 180. Total customers after growth = 1,200 + 180 = 1,380. New quarterly revenue = 1,380 × 50 × 3 = $207,000." (Approximately 60 tokens)

Cost Calculation (using GPT-4 pricing):

- Standard: 10 input tokens + 5 output tokens = 15 tokens ≈ $0.00045

- CoT: 60 input tokens + 40 output tokens = 100 tokens ≈ $0.003

- CoT is approximately 6.7x more expensive per query

However, this simple cost comparison misses crucial factors:

- Accuracy Improvement: If CoT improves accuracy from 70% to 90%, you need fewer retries and corrections

- Error Detection Value: When errors occur with CoT, they're easier to identify and correct, saving downstream costs

- Process Transparency: For regulated industries or high-stakes decisions, the audit trail has inherent value

- Learning and Improvement: CoT outputs can train junior staff or improve processes

Performance Considerations

Beyond cost, consider these performance factors:

Latency: CoT responses take longer to generate due to more tokens. For real-time applications, this might be unacceptable.

Model Compatibility: Not all models respond equally well to CoT prompting. Larger, more capable models (GPT-4, Claude 2) show significant improvements with CoT, while smaller models may not benefit as much or might generate nonsensical reasoning chains.

Task Dependency: The benefits of CoT vary by task type. Mathematical and logical problems show the greatest improvement, while creative tasks might actually suffer from over-structured thinking.

Advanced Chain-of-Thought Techniques

Once you've mastered basic Chain-of-Thought prompting, several advanced techniques can further enhance performance.

1. Few-Shot Chain-of-Thought

Instead of just instructing the model to "think step by step," provide examples of complete CoT reasoning:

Example:

"Q: A pizza has 8 slices. If 3 people eat 2 slices each, how many slices remain? A: Let's think step by step. Total slices: 8. Person 1 eats 2 slices → 8-2=6 left. Person 2 eats 2 slices → 6-2=4 left. Person 3 eats 2 slices → 4-2=2 left. So 2 slices remain.

Q: A bookstore has 150 books. It sells 20 books on Monday and 30 on Tuesday. How many books remain? A: Let's think step by step..."

This technique is particularly effective for complex or domain-specific problems where the reasoning pattern isn't obvious.

2. Self-Consistency with Chain-of-Thought

Generate multiple CoT reasoning paths for the same problem, then choose the most consistent answer:

- Generate 3-5 different CoT responses to the same query

- Extract the final answer from each

- Select the answer that appears most frequently (majority vote)

Research shows this technique can improve accuracy by another 5-10% over single CoT, though it multiplies token costs.

3. Step-Back Prompting

Combine CoT with higher-level abstraction: First do CoT reasoning, then take a "step back" to extract general principles or verify consistency:

"Think through this problem step by step. [Detailed CoT]. Now, step back: What general principle does this illustrate? Does the reasoning follow logical consistency? Are there any hidden assumptions?"

4. Iterative Refinement with CoT

Use CoT not just for the final answer, but for iterative improvement:

- Generate initial CoT solution

- Critique the reasoning chain for errors or gaps

- Generate improved CoT addressing the critique

- Repeat until satisfactory

Common Pitfalls and Troubleshooting

Even with Chain-of-Thought prompting, things can go wrong. Here are common issues and how to address them.

Problem 1: The Model "Short Circuits" Reasoning

Symptom: The model jumps to conclusions without proper intermediate steps.

Solution: Strengthen the CoT instruction and add explicit step requirements:

Weak: "Think through this step by step."

Stronger: "Show ALL intermediate calculations and reasoning steps. Do not skip any steps. Label each step clearly (Step 1, Step 2, etc.)."

Problem 2: Verbose but Incorrect Reasoning

Symptom: The model generates detailed but logically flawed reasoning.

Solution: Add verification steps and consistency checks:

"Think step by step, then verify: Does each step follow logically from the previous? Are the mathematical operations correct? Does the conclusion match all given information?"

Problem 3: Inconsistent Step Detail

Symptom: Some steps are overly detailed while others are vague.

Solution: Provide structural templates or examples:

"Use this exact structure: Step 1: [Objective]. Step 2: [Data extraction]. Step 3: [Calculation/analysis]. Step 4: [Interpretation]. Step 5: [Conclusion]."

Problem 4: The Model Gets "Stuck" in Reasoning Loops

Symptom: The reasoning repeats or cycles without progressing.

Solution: Add progression requirements and iteration limits:

"Think step by step, ensuring each step moves closer to the solution. If stuck after 3 steps, try a different approach."

Measuring Chain-of-Thought Effectiveness

How do you know if Chain-of-Thought prompting is actually helping? Here are key metrics to track:

1. Accuracy Improvement

Compare answer correctness with vs. without CoT on a representative test set. Measure both final answer accuracy and reasoning step accuracy.

2. Error Detectability

When answers are wrong, can you identify where the reasoning failed? CoT should make error localization easier.

3. Consistency Across Variations

Test whether CoT produces more consistent answers across slightly rephrased queries or different input formats.

4. User Confidence and Trust

Measure whether users report higher confidence in CoT-generated answers compared to standard responses.

5. Cost-Adjusted Performance

Calculate (Accuracy Improvement %) / (Cost Increase %) to get cost-effectiveness ratio. A ratio > 1 means CoT provides value beyond its extra cost.

Future Developments and Trends

Chain-of-Thought prompting is rapidly evolving. Here are key trends to watch:

1. Automated CoT Optimization

Tools that automatically determine when to use CoT and optimize the prompting strategy based on problem type and desired outcome.

2. Specialized CoT for Different Domains

Domain-specific CoT templates for medicine, law, engineering, etc., incorporating industry-standard reasoning patterns.

3. Integration with External Tools

CoT systems that can call calculators, databases, or verification tools at specific reasoning steps.

4. Multi-Modal Chain-of-Thought

Extending CoT to visual reasoning, where models explain their step-by-step analysis of images, diagrams, or videos.

5. Real-Time Collaborative CoT

Systems where humans and AI collaborate on reasoning chains, with each contributing different steps based on their strengths.

Getting Started: Practical Implementation Checklist

Ready to implement Chain-of-Thought prompting? Follow this checklist:

- Identify High-Value Use Cases: Start with problems where accuracy matters and current error rates are unacceptable.

- Start with Simple Templates: Use the basic templates provided earlier before experimenting with advanced techniques.

- Establish Baselines: Measure current performance without CoT to quantify improvement.

- Test Across Models: Different models respond differently to CoT—test your preferred model first.

- Monitor Costs: Track token usage and ensure CoT provides sufficient value to justify increased costs.

- Gather User Feedback: Do users find CoT outputs more trustworthy and useful?

- Iterate and Refine: Adjust prompts based on performance data and user feedback.

- Document Best Practices: Create internal guidelines for when and how to use CoT in your specific context.

Conclusion: Thinking Step by Step Towards Better AI Interactions

Chain-of-Thought prompting represents more than just a technical trick—it's a fundamental shift in how we approach human-AI collaboration. By encouraging AI systems to articulate their reasoning transparently, we gain several crucial advantages: improved accuracy on complex problems, better error detection and correction, enhanced trust through transparency, and valuable insights into how AI "thinks."

The key insight is that Chain-of-Thought isn't always the right choice, but when applied judiciously to appropriate problems, it can dramatically enhance AI performance and reliability. As AI systems become more integrated into critical decision-making processes across industries, techniques like CoT that promote transparency and rigorous reasoning will only grow in importance.

Start experimenting with Chain-of-Thought prompting today. Begin with simple mathematical or logical problems, use the templates provided, and pay attention to both the improvements in output quality and the increased understanding you gain into the AI's reasoning process. With practice, you'll develop an intuition for when CoT provides maximum value and how to structure prompts for different types of problems.

Remember: The goal isn't just to get correct answers, but to build AI systems whose reasoning we can understand, verify, and trust. Chain-of-Thought prompting is a powerful step in that direction.

Visuals Produced by AI

Further Reading

Share

What's Your Reaction?

Like

1543

Like

1543

Dislike

23

Dislike

23

Love

567

Love

567

Funny

89

Funny

89

Angry

12

Angry

12

Sad

8

Sad

8

Wow

423

Wow

423

The legal application examples are good but need more caution. Legal reasoning involves precedent, statute interpretation, and jurisdiction-specific nuances that AI might miss even with CoT.

Valid point, Rajiv. Legal AI should always be assistive, not determinative. CoT can help lawyers understand the AI's reasoning to spot gaps or errors, but final legal judgment requires human expertise.

This article has become required reading for our AI team. The practical focus bridges the gap between research papers and implementation.

How does CoT interact with other prompting techniques like few-shot learning or role-playing? Can they be combined?

Leo, absolutely combinable! Few-shot CoT (providing reasoning examples) is particularly powerful. Role-playing + CoT: "As a financial analyst, think step by step about this investment..." The techniques are orthogonal and often synergistic.

The comparison of transformer mechanics was illuminating. Understanding WHY CoT works helps with troubleshooting when it doesn't.

For educational applications, how do you prevent students from just copying the CoT reasoning without understanding it?

Ananya, we use variations: Generate CoT for a similar problem, not the exact one. Or generate CoT with one intentional error for students to find. Or generate CoT but ask students to explain it in their own words.

We've implemented the measurement framework from the article. Tracking cost-adjusted performance has helped us optimize which queries get CoT vs standard prompting.