Autonomous Systems: Overview of Safety and Controls

This comprehensive guide explains how autonomous systems—from self-driving cars to delivery drones and industrial robots—ensure safety through multiple layers of controls. We break down the technology behind perception, decision-making, and action systems that work together to prevent accidents. Learn about the different levels of autonomy, safety protocols across industries, ethical frameworks for unavoidable situations, and real-world safety records. Whether you're curious about autonomous vehicles or considering implementing autonomous systems in business, this beginner-friendly guide provides clear explanations of the safety measures that make autonomous technology reliable and trustworthy.

Autonomous Systems: Overview of Safety and Controls

Autonomous systems—self-driving cars, delivery drones, warehouse robots, and smart industrial equipment—represent one of the most exciting and transformative applications of artificial intelligence. However, their ability to operate safely without constant human supervision raises important questions. How do these systems ensure safety? What multiple layers of protection prevent accidents? And what ethical frameworks guide their decision-making in complex situations?

In this comprehensive guide, we'll explore the safety architecture of autonomous systems in simple, beginner-friendly language. Whether you're curious about how self-driving cars navigate busy streets or considering implementing autonomous technology in your business, understanding these safety principles is essential.

What Are Autonomous Systems?

Before diving into safety, let's clarify what we mean by autonomous systems. These are machines or software that can perform tasks and make decisions without continuous human control. They range from semi-autonomous systems (which require occasional human oversight) to fully autonomous systems (which operate completely independently within defined parameters).

The most familiar examples include:

- Self-driving vehicles: Cars, trucks, and shuttles that navigate roads

- Aerial drones: Delivery, surveillance, and agricultural drones

- Industrial robots: Manufacturing, warehouse, and logistics robots

- Service robots: Cleaning, security, and hospitality robots

- Maritime systems: Autonomous ships and underwater vehicles

Each of these systems operates in different environments with unique challenges, but they all share common safety principles we'll explore throughout this guide.

The Levels of Autonomy: Understanding the Spectrum

Not all autonomous systems are created equal. The Society of Automotive Engineers (SAE International) has developed a widely adopted classification system with six levels (0-5) that applies to vehicles but provides a useful framework for all autonomous systems:

- Level 0 (No Automation): The human performs all driving tasks

- Level 1 (Driver Assistance): The system can assist with either steering OR acceleration/braking

- Level 2 (Partial Automation): The system can control both steering AND acceleration/braking under specific conditions

- Level 3 (Conditional Automation): The system can handle all aspects of driving in certain conditions, but requires human intervention when alerted

- Level 4 (High Automation): The system can handle all driving in specific geographic areas or conditions without human intervention

- Level 5 (Full Automation): The system can handle all driving in all conditions that a human could

Most current autonomous systems operate at Levels 2-4, with Level 5 representing the ultimate goal of complete autonomy in all conditions.

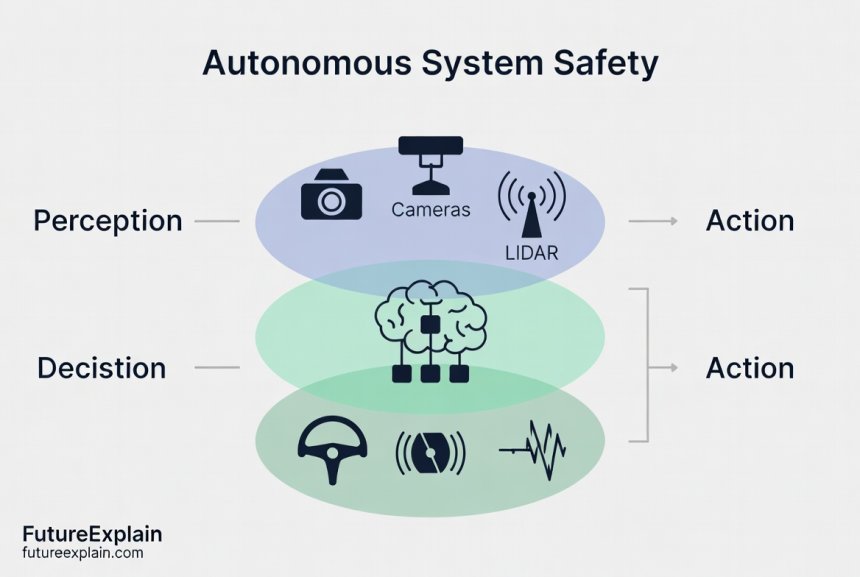

The Three-Layer Safety Architecture

Autonomous systems typically employ a three-layer safety architecture that mimics how humans perceive, decide, and act:

1. Perception Layer: Seeing and Understanding the World

The perception layer is how autonomous systems gather information about their environment. This involves multiple sensor types working together:

- Cameras: Provide visual information similar to human vision

- LiDAR (Light Detection and Ranging): Uses laser pulses to create precise 3D maps of surroundings

- Radar: Detects objects and measures their speed using radio waves

- Ultrasonic sensors: Short-range detection for parking and close maneuvers

- GPS and IMU (Inertial Measurement Units): Provide location and movement data

Safety Principle: Redundancy is key. If one sensor fails or provides unreliable data (say, a camera blinded by direct sunlight), other sensors can compensate. This sensor fusion combines data from multiple sources to create a more accurate and reliable understanding of the environment.

2. Decision Layer: Making Safe Choices

Once the system perceives its environment, it must decide what actions to take. This involves complex algorithms that:

- Predict the behavior of other objects (cars, pedestrians, etc.)

- Plan a safe path toward the destination

- Make real-time decisions about speed, direction, and responses to unexpected events

Modern autonomous systems use machine learning models trained on millions of miles of driving data (for vehicles) or operational data (for other systems) to make these decisions. However, they also incorporate rule-based systems for critical safety functions.

3. Action Layer: Executing Decisions Safely

The action layer translates decisions into physical movements or system responses. For a self-driving car, this means controlling:

- Steering

- Acceleration

- Braking

- Signaling

Safety here involves both precision control and redundancy. Critical systems often have backup components—if the primary braking system fails, a secondary system can take over.

Safety by Design: Fundamental Principles

Beyond the three-layer architecture, autonomous systems incorporate several fundamental safety principles:

Defensive Operation

Autonomous systems are typically programmed to operate more conservatively than human operators. They maintain greater following distances, obey speed limits strictly, and avoid maneuvers with even minimal risk. This conservative approach, while sometimes frustrating to human drivers behind them, significantly enhances safety.

Graceful Degradation

When systems encounter problems they can't handle, they don't simply stop working. Instead, they degrade gracefully—reducing functionality while maintaining basic safety. For example, an autonomous vehicle might:

- First try to resolve the issue internally

- If that fails, alert the human operator (if present)

- If no human response, move to a minimal risk condition (like pulling over safely)

- If unable to pull over, activate hazard lights and secure the vehicle

Fail-Operational vs. Fail-Safe

Different systems use different failure strategies:

- Fail-safe: When a failure occurs, the system shuts down safely

- Fail-operational: The system continues operating despite failures, using redundant components

Critical systems like aircraft autopilots and autonomous vehicles typically use fail-operational designs for essential functions.

Industry-Specific Safety Approaches

Autonomous Vehicles

Self-driving cars have received the most public attention and regulatory scrutiny. Companies like Waymo, Cruise, and Tesla approach safety with different philosophies:

Waymo's Safety Framework includes:

- Pre-defined operational design domains (where and when the vehicle can operate)

- Extensive simulation testing (billions of virtual miles)

- Progressive public road testing with safety drivers

- Detailed safety case documentation

Tesla's Approach focuses on:

- Gradual feature rollout to a massive fleet

- Continuous learning from real-world data

- Driver monitoring to ensure attention during assisted driving

Both approaches have strengths and limitations, highlighting that there's no single correct path to autonomous vehicle safety.

Delivery Drones

Autonomous drones face different safety challenges, primarily avoiding collisions and ensuring safe landings. Safety measures include:

- Geofencing to avoid restricted airspace

- Multiple redundant propulsion systems

- Parachute systems for critical failures

- Pre-defined emergency landing zones

- Real-time weather monitoring and avoidance

Industrial Robots

Factory and warehouse robots emphasize human-robot collaboration safety:

- Force-limiting technology that stops motion upon human contact

- Protected zones with laser scanners

- Speed and separation monitoring

- Emergency stop systems accessible throughout the workspace

The Ethical Dimension: Decision-Making in Unavoidable Situations

One of the most challenging aspects of autonomous system safety is ethical decision-making in unavoidable accident scenarios—often called the "trolley problem" for autonomous vehicles.

If an autonomous vehicle faces an unavoidable collision, how should it decide between options that might harm different people? While this scenario is statistically extremely rare, it raises important ethical questions that researchers and companies are addressing through:

Ethical Frameworks

Different ethical approaches inform programming decisions:

- Utilitarian: Minimize total harm

- Deontological: Follow rules (like "don't swerve into oncoming traffic")

- Virtue ethics: Act as a responsible driver would

- Legal compliance: Follow traffic laws strictly

In practice, most autonomous systems prioritize avoiding accidents altogether through defensive driving, making these extreme scenarios even rarer than with human drivers.

Transparency and Public Input

Many companies and researchers advocate for transparent ethical frameworks and public discussion about these decisions. The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has published extensive guidelines on this topic, emphasizing the need for transparent decision-making processes that can be audited and understood by the public.

Testing and Validation: Proving Safety Before Deployment

How do we know autonomous systems are safe before they're deployed? Extensive testing through multiple methods:

1. Simulation Testing

Billions of miles are driven in virtual environments that can test rare scenarios (like a child running into the street) that would be dangerous or impractical to test physically.

2. Closed-Course Testing

Purpose-built test tracks allow realistic testing in controlled environments with professional safety drivers ready to take over.

3. Public Road Testing with Safety Drivers

Gradual introduction to real-world conditions with trained safety operators who monitor the system and can intervene if needed.

4. Shadow Mode Testing

The autonomous system makes decisions but doesn't control the vehicle, allowing comparison between what the system would have done and what the human driver actually did.

5. Gradual Deployment

Starting with simple environments (suburban streets in good weather) and gradually expanding to more complex conditions as the system proves reliable.

Regulatory Framework and Standards

Safety doesn't exist in a vacuum—it's supported by regulations and standards. Key frameworks include:

International Standards

- ISO 26262: Functional safety for road vehicles

- ISO 21448 (SOTIF): Safety of the intended functionality

- UL 4600: Standard for evaluation of autonomous products

Government Regulations

Different countries approach regulation differently:

- United States: State-by-state regulations with federal guidelines from NHTSA

- European Union: Type-approval system with comprehensive safety requirements

- China: National standards with designated test zones

Human-Machine Interface: The Safety Critical Connection

For systems that require human oversight or intervention, the interface between human and machine is itself a safety-critical component. Effective interfaces must:

- Clearly communicate system status and capabilities

- Provide appropriate warnings with sufficient lead time

- Monitor human readiness to take over

- Prevent mode confusion (understanding what the system is currently doing)

Research shows that poorly designed interfaces can actually decrease safety by creating overreliance or confusion.

Cybersecurity: Protecting Autonomous Systems from Attack

Safety isn't just about preventing accidental failures—it's also about preventing malicious attacks. Autonomous systems face unique cybersecurity challenges:

- Sensor spoofing (tricking cameras or LiDAR)

- GPS jamming or spoofing

- Communication channel attacks

- Malicious software updates

Security measures include encrypted communications, secure boot processes, intrusion detection systems, and regular security updates—similar to best practices in other connected systems but with higher stakes due to safety implications.

Real-World Safety Performance: What the Data Shows

Despite media attention on accidents involving autonomous systems, the data shows promising safety records:

Autonomous Vehicle Accident Rates

According to Waymo's 2023 safety report, their autonomous vehicles have driven millions of miles with accident rates lower than human drivers in comparable conditions. However, direct comparison is challenging due to different driving environments and reporting standards.

Drone Safety Records

Commercial drone operations have maintained excellent safety records, with most incidents involving hobbyist drones rather than autonomous commercial systems.

Industrial Robot Safety

Modern collaborative robots (cobots) have safety records superior to traditional industrial robots, thanks to advanced safety systems that detect and respond to human presence.

The Future of Autonomous System Safety

Safety technology continues to evolve with several promising developments:

Vehicle-to-Everything (V2X) Communication

Future autonomous vehicles won't just rely on their own sensors—they'll communicate with other vehicles, infrastructure, and pedestrians to create a cooperative safety network.

Explainable AI

New AI techniques that can explain their decisions will improve safety by allowing human operators and regulators to understand why systems made particular choices.

Formal Verification

Mathematical proof techniques that can guarantee certain safety properties, moving beyond statistical confidence to provable safety for critical functions.

Adaptive Safety Systems

Systems that learn from near-misses and adapt their behavior to prevent similar situations in the future.

Practical Safety Considerations for Businesses

If you're considering implementing autonomous systems in your business, here are key safety considerations:

- Start with a safety assessment: Identify potential hazards specific to your application

- Choose appropriate autonomy levels: Higher isn't always better—match the autonomy level to the task and environment

- Implement graduated deployment: Start small, learn, and expand gradually

- Train your team: Ensure human operators understand system capabilities and limitations

- Establish maintenance protocols: Autonomous systems require regular maintenance and updates

- Plan for failures: Have clear procedures for when systems need human intervention

- Document everything: Maintain records of safety decisions, incidents, and improvements

Common Misconceptions About Autonomous System Safety

Let's address some common misunderstandings:

Myth: Autonomous systems must be perfect to be deployed.

Reality: They need to be safer than human operators in the same conditions, not perfect.

Myth: One accident proves the technology is unsafe.

Reality: Safety is statistical—what matters is the rate over millions of operations, not individual incidents.

Myth: Autonomous systems can handle any situation.

Reality: They operate within defined parameters and conditions (operational design domains).

Myth: Human oversight eliminates all risk.

Reality: Poorly designed human-machine interfaces can actually increase risk through mode confusion or overreliance.

Conclusion: Building Trust Through Transparent Safety

Autonomous system safety isn't a single feature or component—it's a comprehensive approach that permeates every aspect of design, testing, and operation. From redundant sensor systems to ethical decision frameworks, from simulation testing to real-world validation, safety requires multiple layers of protection.

The journey toward fully autonomous systems continues, with safety as the guiding principle at every step. As these technologies evolve, transparent safety practices, clear communication about capabilities and limitations, and ongoing public dialogue will be essential for building the trust needed for widespread adoption.

Whether you're a consumer curious about self-driving cars, a business considering automation, or simply someone interested in the future of technology, understanding these safety principles helps separate hype from reality and appreciate the careful engineering behind autonomous systems.

Further Reading

Share

What's Your Reaction?

Like

1450

Like

1450

Dislike

12

Dislike

12

Love

320

Love

320

Funny

45

Funny

45

Angry

8

Angry

8

Sad

5

Sad

5

Wow

210

Wow

210

What about accessibility? How do autonomous systems handle pedestrians with disabilities or unusual mobility devices?

Critical consideration, Ave. Companies are specifically testing with diverse pedestrian scenarios including wheelchair users, people with guide dogs, and children. Some use detailed classification to identify mobility aids and predict their movement patterns. It's an area of active research and improvement.

The adaptive safety systems concept is fascinating. Learning from near-misses could make systems continuously safer.

How do autonomous systems handle road debris or unexpected obstacles like fallen trees?

Ethan, perception systems are trained on diverse datasets including debris. The challenge is classification - is that a plastic bag (drive over) or a rock (avoid)? Conservative systems treat unknown objects as hazards until proven otherwise.

The misconceptions section is important. Media often focuses on rare failures without context about overall safety rates.

What about cost? All this redundancy and testing must make autonomous systems expensive. Will they ever be affordable for average consumers?

Logan, costs are dropping rapidly. LiDAR sensors that cost $75,000 a few years ago are now under $1,000. Mass production and technological advances are making autonomy increasingly affordable.

The explainable AI future is exciting. If we can understand why systems make decisions, we can trust them more and improve them faster.