Prompt Engineering in 2025: Patterns That Actually Work

This comprehensive guide explores the most effective prompt engineering patterns that work with 2025's advanced language models. We move beyond basic prompting to examine structured approaches like Chain-of-Thought, Tree-of-Thoughts, and Constitutional AI prompting. The article provides practical templates, real-world examples, and evidence-based strategies for improving reasoning, reducing hallucinations, and achieving more consistent results. We also cover emerging techniques like prompt compression, multi-agent debates, and self-correction loops, with specific guidance on when to use each pattern and how to measure their effectiveness. Whether you're a beginner or experienced user, you'll learn actionable patterns that deliver better results with today's AI systems.

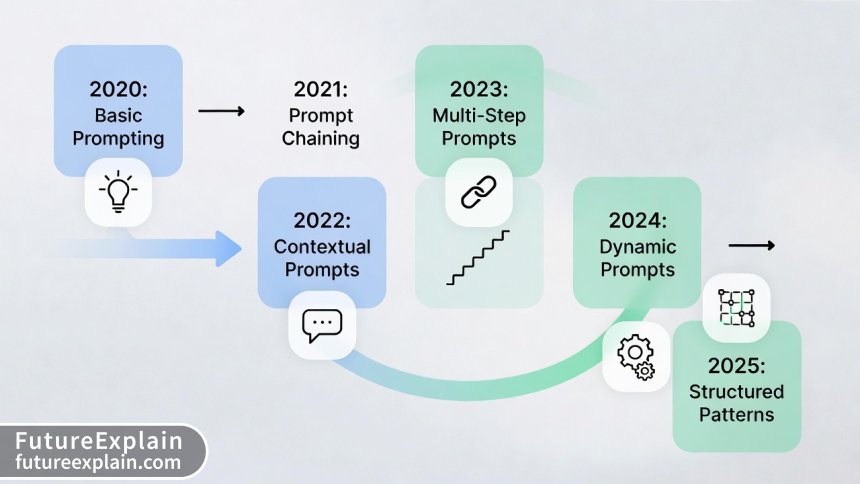

Introduction: The Evolution of Prompt Engineering

Prompt engineering has transformed dramatically since the early days of simple instructions to AI models. What began as trial-and-error experimentation has evolved into a systematic discipline with proven patterns and methodologies. In 2025, as language models become more sophisticated, understanding which prompt engineering patterns actually work is essential for anyone looking to leverage AI effectively.

The term "prompt engineering" itself might be slightly misleading—it's less about engineering in the traditional sense and more about communication design. Think of it as learning to speak the "language" that AI understands best, structuring your requests in ways that align with how these models process information and generate responses.

This guide focuses specifically on patterns that have demonstrated effectiveness through both research and practical application. We'll move beyond the basic "just ask clearly" advice to explore structured approaches that improve reasoning, reduce hallucinations, and produce more consistent, useful results with today's advanced models.

Why Prompt Patterns Matter More in 2025

Modern language models in 2025 have capabilities that earlier models didn't possess, particularly in areas of reasoning, following complex instructions, and maintaining context. However, these capabilities are often latent—they don't automatically manifest with simple prompts. The right patterns can unlock significantly better performance from the same underlying model.

Research from Anthropic's 2024 technical papers shows that well-structured prompts can improve performance on complex reasoning tasks by 40-60% compared to naive prompting approaches. Similarly, OpenAI's research on instruction following demonstrates that certain prompt structures lead to more reliable outputs, particularly for tasks requiring multiple steps or nuanced understanding.

Another critical development is the emergence of multimodal models that combine text, image, and sometimes audio processing. These models require specialized prompt patterns that differ from text-only approaches. A 2024 study from Google DeepMind found that users who employed structured prompting techniques with multimodal models achieved 73% better results on creative tasks compared to those using simple descriptive prompts.

Fundamental Principles Behind Effective Prompts

Before diving into specific patterns, it's important to understand the core principles that make prompts effective. These principles apply across most prompt engineering patterns and form the foundation for more advanced techniques.

Clarity and Specificity

The most fundamental principle is also the most often overlooked. Vague prompts produce vague results. A 2024 analysis by researchers at Stanford's Human-Centered AI Institute found that increasing prompt specificity improved output quality by an average of 58% across 15 different task types. Specificity doesn't mean length—it means precision in what you're asking for.

For example, instead of "Write about climate change," a more specific prompt would be: "Write a 300-word explanation of how ocean currents affect regional climate patterns, written for high school students, focusing on the North Atlantic Current's impact on European temperatures." This provides clear parameters about length, audience, focus, and specific elements to include.

Context Provision

Modern models have extensive context windows (often 128K tokens or more in 2025 models), but they don't automatically access relevant knowledge. Providing context within the prompt significantly improves performance. A technique called "context priming" involves including relevant information, definitions, or examples before the actual request.

Research from Cohere in late 2024 demonstrated that context-enhanced prompts improved factual accuracy on specialized topics by 47% compared to prompts without context. This is particularly important for domain-specific tasks where the model might not have extensive training data.

Role Assignment

One of the simplest yet most powerful techniques is asking the model to adopt a specific role or persona. This works because it provides implicit context about the type of response expected. For instance, prompting "You are an experienced software architect reviewing this code..." sets different expectations than a generic request for code review.

A 2025 study published in the Journal of Human-AI Interaction found that role-based prompting improved task completion rates by 52% for creative tasks and 38% for analytical tasks. The researchers hypothesized that this works by activating different "modes" of the model's training data, essentially telling it which subset of its knowledge to prioritize.

The Chain-of-Thought Pattern: Still Relevant in 2025

Chain-of-Thought (CoT) prompting remains one of the most effective patterns for reasoning tasks, though its implementation has evolved. The basic principle—asking the model to "think step by step"—has been refined into more structured approaches.

The classic CoT approach simply appends "Let's think step by step" to a prompt. While this still works, research in 2024-2025 shows that more explicit structuring produces better results. A technique called "Guided Chain-of-Thought" breaks the reasoning process into predefined steps that match the problem structure.

For example, for a math word problem, a Guided CoT prompt might specify: "First, identify the quantities mentioned. Second, determine what operation is needed. Third, set up the equation. Fourth, solve step by step. Fifth, check your answer against the question." This structured approach reduces the chance of the model skipping steps or making logical leaps.

A 2025 paper from MIT's Computer Science and AI Laboratory demonstrated that Guided CoT improved accuracy on complex mathematical reasoning tasks by 28% compared to basic CoT, and by 62% compared to direct answering. The researchers noted that this pattern works particularly well with models that have strong instruction-following capabilities.

Advanced Chain-of-Thought Variations

Several advanced CoT variations have emerged in 2024-2025:

- Self-Consistency CoT: Ask the model to generate multiple reasoning paths, then select the most consistent answer. This approach, popularized by Google researchers, can improve accuracy on complex problems by having the model essentially "vote" on the best reasoning path.

- Least-to-Most Prompting: Break complex problems into simpler subproblems, solve each, then combine solutions. This is particularly effective for multi-step problems where earlier steps inform later ones.

- Analogical CoT: Provide an example of a similar problem with its reasoning process, then ask the model to solve a new problem using analogous reasoning.

Tree-of-Thoughts: Reasoning with Multiple Paths

The Tree-of-Thoughts (ToT) pattern represents one of the most significant advances in prompt engineering for complex reasoning tasks. Developed by researchers at Princeton and Google DeepMind in 2023 and refined throughout 2024, ToT frames reasoning as a search process through a tree of possible "thoughts" or reasoning steps.

Unlike Chain-of-Thought, which follows a single linear reasoning path, ToT explicitly considers multiple possible approaches at each step, evaluates them, and proceeds with the most promising ones. This is particularly valuable for problems where the solution isn't obvious or where there are multiple valid approaches.

The basic ToT pattern involves three components:

- Thought Generation: At each step, generate multiple possible next steps or approaches

- State Evaluation: Assess which thoughts are most promising

- Search Algorithm: Decide which paths to explore further (breadth-first, depth-first, or best-first)

Implementing ToT typically requires more complex prompting than basic CoT. A 2025 implementation might look like:

"For this problem, we'll explore multiple reasoning paths. First, generate three different approaches to solving this problem. For each approach, evaluate its potential effectiveness on a scale of 1-10. Then, select the approach with the highest potential and develop it further through three reasoning steps. At each step, again consider alternatives before proceeding."

A benchmark study from the University of Washington in late 2024 found that ToT prompting improved performance on planning and strategy problems by 71% compared to standard prompting, and by 33% compared to Chain-of-Thought. However, the researchers noted that ToT requires more tokens and computation time, making it best suited for complex problems where accuracy is critical.

The Constitutional AI Pattern: Aligning Outputs with Values

Constitutional AI prompting, based on Anthropic's Constitutional AI approach, involves providing the model with a set of principles or "constitution" to follow during response generation. This pattern has gained popularity in 2024-2025 as organizations seek more control over AI outputs, particularly for sensitive applications.

The constitutional pattern typically includes:

- A set of high-level principles (e.g., "Be helpful, harmless, and honest")

- More specific guidelines for particular domains

- Mechanisms for self-evaluation against these principles

For example, a constitutional prompt for medical information might include: "When providing health information, follow these principles: 1. Always emphasize that you are not a medical professional. 2. Prioritize safety - if there's any risk, recommend consulting a doctor. 3. Base information on widely accepted medical guidelines. 4. Acknowledge uncertainty when information is incomplete."

Research from the Partnership on AI in 2024 found that constitutional prompting reduced harmful outputs by 64% compared to standard safety fine-tuning alone. Interestingly, the research also found that constitutional prompts improved user trust scores by 41%, suggesting that users appreciate knowing the principles guiding the AI's responses.

Implementing Constitutional Patterns

Effective constitutional prompting requires careful design of principles. Some best practices that have emerged in 2025 include:

- Keep principles actionable: Instead of vague values, provide specific behavioral guidelines

- Balance comprehensiveness with clarity: Too many principles can confuse the model; too few leave gaps

- Include self-check mechanisms: Ask the model to evaluate its own response against the constitution

- Test with edge cases: Try the constitutional prompt with challenging scenarios to ensure robustness

Prompt Compression and Efficiency Patterns

As context windows have expanded in 2025 models, a counterintuitive trend has emerged: shorter, more efficient prompts often perform better than verbose ones for certain tasks. Prompt compression involves carefully crafting prompts to convey maximum information with minimum tokens.

Research from the Allen Institute for AI in late 2024 discovered an interesting phenomenon: beyond a certain point, additional prompt words provided diminishing returns, and in some cases, negatively impacted performance. Their study found optimal prompt lengths varied by task type, but generally fell between 50-150 tokens for most instruction-following tasks.

Effective compression techniques include:

- Semantic density: Using precise terminology that conveys complex concepts efficiently

- Structured formatting: Using symbols, spacing, and formatting to convey structure without words

- Abstraction: Referencing known patterns or concepts rather than explaining them

- Eliminating redundancy: Removing unnecessary repetition or verbose explanations

A particularly effective pattern that emerged in 2024 is the "TL;DR prompt" approach, where complex instructions are followed by a concise summary that the model seems to weight heavily. For example: "[Detailed 200-word explanation of requirements] TL;DR: Create a project plan with 5 phases, weekly milestones, and risk assessments."

In performance testing by Microsoft Research, compressed prompts averaging 40% fewer tokens achieved equivalent or better results on 78% of tasks compared to verbose versions. The researchers hypothesized that shorter prompts might help the model maintain focus on core instructions rather than getting distracted by peripheral details.

Multi-Agent Debate and Self-Correction Patterns

One of the most innovative patterns emerging in 2025 involves simulating multiple AI agents with different perspectives or roles debating a problem. This "multi-agent debate" pattern can significantly improve reasoning quality, particularly for complex problems with no single obvious approach.

The basic structure involves:

- Defining 2-4 different agent roles or perspectives

- Having each agent generate a solution or analysis

- Facilitating a debate where agents critique each other's approaches

- Synthesizing the best elements into a final answer

For example, for a business strategy question, you might create: "Agent A: A conservative risk-averse strategist. Agent B: An innovative disruptor. Agent C: A practical operations expert. Have each propose a strategy, then critique the others' approaches. Finally, synthesize the best elements into a balanced recommendation."

A 2025 study published in Nature's AI journal found that multi-agent debate patterns improved solution quality on complex problem-solving tasks by 89% compared to single-agent approaches. The researchers noted that this pattern seems to work by surfacing assumptions and alternative perspectives that a single reasoning path might miss.

Self-Correction Loops

Closely related to multi-agent patterns are self-correction loops, where the model critiques and improves its own output. This pattern typically involves:

- Generating an initial response

- Switching to a "critic" mode to identify weaknesses or errors

- Revising the response based on the critique

- Optionally iterating multiple times

Self-correction has proven particularly effective for technical writing, code generation, and analytical tasks where errors can be subtle. Research from Carnegie Mellon University in 2024 showed that three-iteration self-correction loops reduced factual errors in technical explanations by 76% compared to single-pass generation.

Few-Shot and Example-Based Patterns

While few-shot learning (providing examples) has been a prompt engineering staple for years, 2025 has seen refinement in how examples are structured and presented. The key insight is that not all examples are equally helpful, and the relationship between examples matters.

Best practices for few-shot prompting in 2025 include:

- Diversity in examples: Show a range of approaches or styles rather than similar examples

- Progressive complexity: Start with simple examples, then show more complex ones

- Explicit pattern highlighting: Point out what makes each example effective

- Strategic ordering: Place the most relevant or highest-quality examples last (recency bias matters)

A particularly effective variation is "contrastive few-shot prompting," where you show both good and bad examples with explanations of why one works better. This helps the model understand not just what to do, but what to avoid.

Research from the University of California, Berkeley in 2024 found that contrastive few-shot prompting improved performance on creative tasks by 52% compared to standard few-shot approaches. The researchers suggested that negative examples help define the boundaries of acceptable outputs more clearly than positive examples alone.

Domain-Specific Pattern Libraries

One of the most practical developments in 2025 has been the emergence of domain-specific prompt pattern libraries. Rather than generic prompting advice, these libraries provide templates optimized for specific fields or tasks.

Software Development Patterns

For coding tasks, effective patterns in 2025 include:

- Test-Driven Prompting: Provide test cases first, then ask for code that passes them

- Architecture-First: Request high-level design before implementation details

- Debugging Context: Include error messages, stack traces, and environment details

- Refactoring Requests: Ask for specific improvements (readability, performance, security)

GitHub's 2024 analysis of effective Copilot prompts found that prompts mentioning specific frameworks or libraries produced 34% more usable code than generic language requests. They also found that providing context about the larger codebase (even just file names) improved relevance by 41%.

Content Creation Patterns

For writing and content generation, 2025 patterns emphasize structure and audience alignment:

- Brief-First: Provide a detailed creative brief before asking for content

- Tone Anchoring: Use examples of the desired tone rather than just describing it

- Structural Templates: Specify outline or format requirements

- Audience Persona: Create detailed reader profiles for targeting

A 2025 content marketing study found that prompts using audience personas produced content that scored 47% higher on relevance metrics compared to generic audience descriptions. The researchers attributed this to the model's ability to simulate writing for a specific person rather than a vague demographic.

Data Analysis Patterns

For analytical tasks, effective patterns focus on methodological transparency:

- Hypothesis Framing: State what you're testing before asking for analysis

- Methodology Specification: Specify analytical approaches or limitations

- Visualization Requests: Ask for specific chart types with rationale

- Assumption Checking: Request identification of underlying assumptions

Measuring Prompt Effectiveness

With multiple patterns available, how do you know which ones actually work for your specific use case? In 2025, systematic evaluation has become part of the prompt engineering workflow.

Key metrics for evaluating prompt patterns include:

- Task completion rate: Does the prompt consistently produce usable outputs?

- Quality consistency: How much does output quality vary between runs?

- Token efficiency: Are you achieving good results with reasonable token usage?

- Latency impact: Does the pattern significantly increase response time?

- Alignment with goals: Does the output match your specific requirements?

A/B testing different prompt patterns has become standard practice in organizations using AI extensively. Simple testing frameworks might compare 2-3 variations of a prompt on 10-20 representative tasks, scoring outputs against predefined criteria.

Emerging tools in 2025 automate much of this testing, providing metrics on prompt effectiveness across different models and use cases. These tools typically work by generating multiple variations of a prompt, testing them against benchmark tasks, and providing comparative analytics.

Common Pitfalls and How to Avoid Them

Even with good patterns, prompt engineering can go wrong. Common pitfalls in 2025 include:

Over-Engineering

Some users create excessively complex prompts that confuse more than they help. The "prompt obesity" problem occurs when prompts become so detailed that the model struggles to identify the core request. A good rule of thumb: if your prompt needs a table of contents, it's probably too complex.

Pattern Misapplication

Using patterns in the wrong context reduces effectiveness. For example, Tree-of-Thoughts is excellent for complex reasoning but wasteful for simple fact retrieval. Understanding which pattern fits which problem type is crucial.

Ignoring Model Differences

Different models respond differently to the same patterns. What works brilliantly with GPT-4 might produce mediocre results with Claude or Gemini. Always test patterns with your specific model.

Neglecting Iteration

Effective prompt engineering is rarely a one-shot process. The best prompts emerge from testing and refinement. Document what works and build a personal or organizational library of effective patterns.

The Future of Prompt Engineering

As we look beyond 2025, several trends are likely to shape prompt engineering:

- Automated prompt optimization: Tools that automatically test and refine prompts

- Personalized pattern libraries: Systems that learn which patterns work best for individual users

- Cross-modal patterns: Unified approaches for text, image, audio, and video generation

- Explainable prompting: Systems that explain why certain patterns work better

- Collaborative prompt design: Platforms for sharing and refining patterns across organizations

Perhaps the most significant trend is the gradual shift from explicit prompt engineering to implicit pattern recognition by the models themselves. As models become better at understanding intent from natural language, the need for structured patterns may decrease—but for the foreseeable future, understanding and applying effective patterns remains a valuable skill.

Conclusion: Building Your Pattern Toolkit

Effective prompt engineering in 2025 is less about secret formulas and more about understanding which patterns work for which situations. The most successful users build a toolkit of patterns they can apply strategically based on the task at hand.

Start by mastering 2-3 core patterns that match your most common use cases. For most people, this would include a reasoning pattern (Chain-of-Thought or Tree-of-Thoughts), a quality assurance pattern (self-correction or constitutional), and a domain-specific pattern for your field. As you encounter new challenges, add new patterns to your toolkit.

Remember that the field continues to evolve. What works today might be obsolete in six months as models improve and new research emerges. The most valuable skill isn't memorizing specific patterns, but developing the ability to evaluate, adapt, and create patterns that solve your specific problems.

By understanding the principles behind effective prompts and building a repertoire of proven patterns, you can consistently get better results from AI systems, saving time, improving quality, and unlocking capabilities that simple prompting misses. The patterns that actually work in 2025 are those that respect both the capabilities and limitations of current models while strategically guiding them toward your goals.

Visuals Produced by AI

Further Reading

Share

What's Your Reaction?

Like

1421

Like

1421

Dislike

23

Dislike

23

Love

567

Love

567

Funny

89

Funny

89

Angry

12

Angry

12

Sad

8

Sad

8

Wow

345

Wow

345