Privacy-preserving Embeddings: Techniques and Risks

Privacy-preserving embeddings are transforming how AI systems handle sensitive data while maintaining utility. This comprehensive guide explores the fundamental techniques including differential privacy, federated learning, homomorphic encryption, and secure multi-party computation. We break down complex concepts into beginner-friendly explanations with practical analogies and real-world examples. Learn about the trade-offs between privacy and utility, implementation challenges, and regulatory considerations. The article provides actionable guidance for developers and organizations looking to implement privacy-preserving embeddings, along with detailed risk assessment of current techniques. Discover how to balance data protection with model performance in today's privacy-conscious AI landscape.

Introduction: The Privacy Paradox in AI Embeddings

In today's data-driven world, embeddings have become the fundamental building blocks of modern AI systems. These numerical representations capture the semantic meaning of words, images, and other data types, enabling machines to understand relationships and make intelligent decisions. However, as embeddings increasingly handle sensitive information—from personal messages to medical records—a critical challenge emerges: how do we maintain the utility of these powerful representations while protecting individual privacy?

Privacy-preserving embeddings represent a groundbreaking approach to this dilemma. Unlike traditional embeddings that may inadvertently reveal sensitive information about the original data, privacy-preserving techniques transform these representations to protect confidentiality while retaining their usefulness for downstream tasks. This isn't just about adding encryption layers; it's about fundamentally rethinking how we create, store, and use embeddings in AI systems.

The importance of this field has grown exponentially with increasing privacy regulations like GDPR, CCPA, and emerging AI-specific legislation. Organizations that fail to implement proper privacy measures in their embedding pipelines risk not only regulatory penalties but also loss of user trust and potential data breaches. This comprehensive guide will walk you through the key techniques, practical implementations, and critical risks associated with privacy-preserving embeddings.

Understanding Embeddings: The Foundation

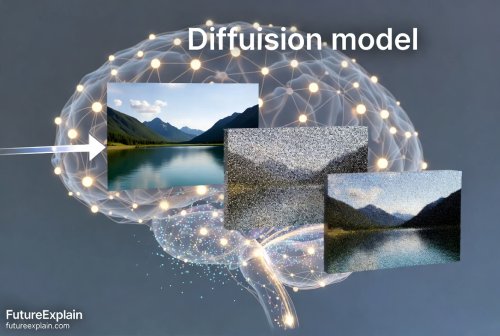

Before diving into privacy-preserving techniques, let's establish what embeddings are and why they need protection. In simple terms, an embedding is a mathematical representation that translates high-dimensional, complex data (like text or images) into a lower-dimensional numerical vector. These vectors capture semantic relationships—words with similar meanings end up close together in the embedding space.

Traditional embeddings, such as Word2Vec, GloVe, or BERT embeddings, work by analyzing patterns in large datasets. For example, a word embedding might place "doctor" and "nurse" close together because they frequently appear in similar contexts. The problem emerges when these embeddings are created from sensitive data: they can inadvertently encode private information that might be reconstructed or inferred.

Research has shown that embeddings can be surprisingly revealing. Studies have demonstrated that given enough embedding vectors and some auxiliary information, attackers can sometimes reconstruct original text or infer sensitive attributes about individuals. This vulnerability creates what security experts call an "attack surface"—opportunities for malicious actors to extract private information from what seems like anonymous numerical data.

Core Techniques for Privacy-Preserving Embeddings

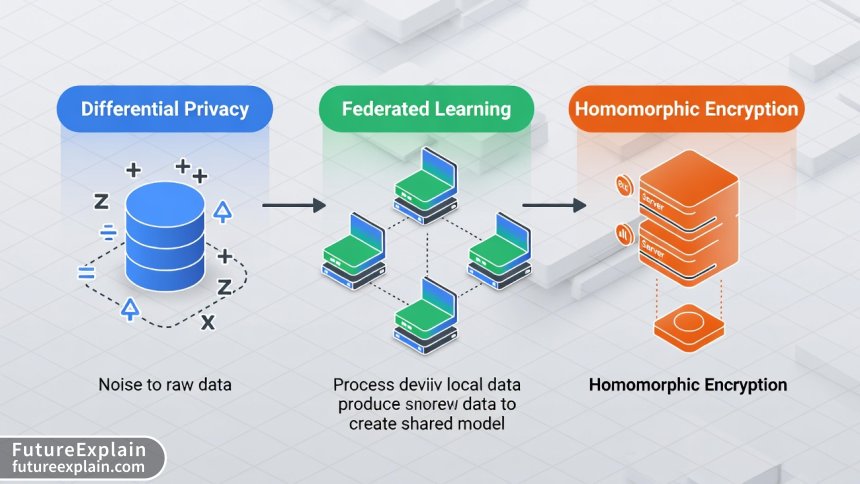

Differential Privacy: Adding Mathematical Noise

Differential privacy has emerged as one of the most rigorous mathematical frameworks for privacy protection. At its core, differential privacy ensures that the inclusion or exclusion of any single individual's data doesn't significantly affect the output of an analysis. For embeddings, this means adding carefully calibrated noise during the training process or to the resulting embedding vectors themselves.

The implementation involves two key parameters: epsilon (ε) and delta (δ). Epsilon controls the privacy budget—lower values mean stronger privacy guarantees but potentially reduced utility. Delta represents the probability of privacy failure. Finding the right balance between these parameters is both an art and a science, requiring careful consideration of your specific use case and privacy requirements.

Practical implementation of differentially private embeddings typically involves:

- Adding Gaussian or Laplace noise to gradients during training

- Clipping gradient norms to bound sensitivity

- Carefully tracking and managing the privacy budget across multiple queries

- Using privacy amplification techniques like sub-sampling

Major tech companies including Google, Apple, and Microsoft have adopted differential privacy for various applications, proving its scalability and effectiveness in real-world scenarios.

Federated Learning: Training Without Centralized Data

Federated learning takes a fundamentally different approach to privacy preservation. Instead of collecting all data in a central location for training, the model (including embeddings) is trained across multiple devices or servers, with only model updates—never raw data—being shared with a central coordinator.

For embeddings, federated learning enables creating representations that benefit from diverse data sources without actually seeing the individual data points. This is particularly valuable for applications like keyboard prediction, healthcare analytics, or financial services where data cannot leave local devices due to privacy regulations or organizational policies.

The federated learning process for embeddings involves:

- Initializing a global embedding model on a central server

- Distributing the model to participating devices

- Training locally on each device using local data

- Sending only model updates (not data) back to the server

- Aggregating updates using techniques like Federated Averaging

- Iterating until the model converges

Recent advances in federated learning have addressed challenges like statistical heterogeneity (non-IID data across devices) and communication efficiency, making it increasingly practical for embedding generation at scale.

Visuals Produced by AI

Homomorphic Encryption: Computation on Encrypted Data

Homomorphic encryption represents perhaps the most mathematically elegant approach to privacy-preserving computation. This technique allows computations to be performed directly on encrypted data, producing an encrypted result that, when decrypted, matches the result of operations performed on the plaintext.

For embeddings, homomorphic encryption enables scenarios where sensitive data remains encrypted throughout the entire pipeline—during embedding generation, storage, and even when using embeddings for downstream tasks like similarity search or classification. The data owner maintains control of the encryption keys, ensuring that service providers never access the plaintext data.

Current implementations typically use schemes like:

- BFV (Brakerski-Fan-Vercauteren) for integer arithmetic

- CKKS (Cheon-Kim-Kim-Song) for approximate arithmetic on real numbers

- BGV (Brakerski-Gentry-Vaikuntanathan) for leveled homomorphic encryption

While homomorphic encryption provides strong theoretical guarantees, practical implementation faces challenges including computational overhead (operations can be 1000x slower than plaintext), ciphertext expansion (encrypted data is much larger), and complexity of implementation. Recent hardware accelerators and algorithmic improvements are gradually making this technique more practical for real-world embedding applications.

Secure Multi-Party Computation (MPC)

Secure Multi-Party Computation enables multiple parties to jointly compute a function over their inputs while keeping those inputs private. For embeddings, MPC allows different organizations to collaboratively train embedding models on their combined datasets without revealing their individual data to each other.

The classic example is the "Millionaire's Problem": two millionaires want to know who is richer without revealing their actual wealth. MPC protocols solve this through cryptographic techniques that distribute the computation across parties. For embeddings, this enables privacy-preserving collaborative AI while maintaining data sovereignty.

Common MPC techniques applied to embeddings include:

- Garbled circuits for secure function evaluation

- Secret sharing to distribute data across parties

- Oblivious transfer for private information retrieval

- Zero-knowledge proofs for verification without revelation

MPC is particularly valuable for cross-organizational collaborations where legal or competitive concerns prevent data sharing, such as in healthcare research, financial fraud detection, or supply chain optimization.

Hybrid Approaches: Combining Techniques for Optimal Results

In practice, the most effective privacy-preserving embedding systems often combine multiple techniques to balance privacy, utility, and efficiency. These hybrid approaches leverage the strengths of different methods while mitigating their individual weaknesses.

Some common hybrid patterns include:

- Federated Learning + Differential Privacy: Adding noise to local model updates before aggregation provides an additional privacy guarantee against curious servers or other participants.

- Homomorphic Encryption + Secure MPC: Using homomorphic encryption for certain operations within a larger MPC protocol can improve efficiency for specific computation patterns.

- Differential Privacy + Synthetic Data: Generating differentially private synthetic data that can then be used to train embeddings without privacy concerns.

The choice of hybrid approach depends on your specific threat model, performance requirements, and regulatory environment. For example, healthcare applications might prioritize strong cryptographic guarantees (homomorphic encryption) while consumer applications might focus on scalability (federated learning with differential privacy).

Implementation Considerations and Best Practices

Assessing Your Privacy Requirements

Before implementing any privacy-preserving technique, you must clearly define your privacy requirements. This involves:

- Identifying what constitutes sensitive information in your context

- Understanding regulatory requirements (GDPR, HIPAA, etc.)

- Defining your threat model: who are potential attackers and what are their capabilities?

- Establishing clear privacy budgets and risk tolerance levels

Different applications have vastly different privacy needs. A movie recommendation system has different requirements than a mental health chatbot. Documenting these requirements upfront will guide your technical choices and implementation strategy.

Performance Trade-offs and Optimization

All privacy-preserving techniques involve trade-offs between privacy, utility, and performance. Understanding these trade-offs is crucial for practical implementation:

- Differential Privacy: Privacy budget (ε) inversely correlates with model accuracy. Careful noise calibration and privacy accounting are essential.

- Federated Learning: Communication overhead and statistical heterogeneity can impact convergence. Techniques like client selection and adaptive optimization help mitigate these issues.

- Homomorphic Encryption: Computational overhead and ciphertext expansion require specialized hardware or optimized algorithms. Recent advances in GPU acceleration and specialized hardware (like Intel's HE-accelerator) are improving practical performance.

- Secure MPC: Communication complexity grows with the number of parties and complexity of computation. Protocol selection and network optimization are critical.

Tooling and Frameworks

The ecosystem for privacy-preserving embeddings has matured significantly in recent years. Key tools and frameworks include:

- TensorFlow Privacy: Library for implementing differential privacy in TensorFlow models

- PySyft/PyGrid: Frameworks for federated learning and secure computation

- Microsoft SEAL: Library for homomorphic encryption implementations

- OpenMined: Community and tools for privacy-preserving machine learning

- IBM Federated Learning: Enterprise-grade federated learning platform

- TF Encrypted: Framework for privacy-preserving machine learning in TensorFlow

Choosing the right tools depends on your technical stack, team expertise, and specific requirements. Many organizations start with simpler approaches (like differential privacy) and gradually incorporate more complex techniques as their needs evolve.

Visuals Produced by AI

Critical Risks and Limitations

Privacy-Utility Trade-off: The Fundamental Challenge

The most fundamental risk in privacy-preserving embeddings is the inevitable trade-off between privacy protection and model utility. As you increase privacy guarantees, you typically decrease the accuracy and usefulness of the resulting embeddings. This isn't a technical limitation but a mathematical reality—strong privacy necessarily involves losing some information about individual data points.

Managing this trade-off requires:

- Careful calibration of privacy parameters based on specific use cases

- Establishing clear thresholds for acceptable utility loss

- Developing metrics to measure both privacy guarantees and utility preservation

- Creating fallback mechanisms for when privacy constraints make embeddings unusable for certain tasks

Implementation Vulnerabilities

Even theoretically sound privacy-preserving techniques can fail due to implementation errors. Common vulnerabilities include:

- Side-channel attacks: Exploiting timing, power consumption, or memory usage patterns to infer sensitive information

- Model inversion attacks: Reconstructing training data from model parameters or embeddings

- Membership inference attacks: Determining whether specific data points were in the training set

- Reconstruction attacks: Attempting to reconstruct original data from embeddings or model outputs

These attacks have been demonstrated against various privacy-preserving systems, highlighting the importance of defense-in-depth strategies and regular security audits.

Regulatory and Compliance Risks

Privacy regulations are evolving rapidly, and techniques that are compliant today might not meet future requirements. Key regulatory risks include:

- Changing legal interpretations: Regulatory bodies may update their guidance on what constitutes adequate privacy protection

- Cross-border data transfers: Privacy-preserving techniques might not satisfy requirements for international data transfers

- Right to explanation: Some techniques (like certain homomorphic encryption schemes) might make it difficult to provide explanations required by regulations like GDPR

- Certification challenges: Proving compliance with privacy certifications can be technically complex for advanced privacy-preserving systems

Emerging Attack Vectors

As privacy-preserving techniques become more widespread, attackers develop increasingly sophisticated methods to bypass protections. Emerging threats include:

- Adaptive attacks: Attacks that learn and adapt to specific privacy mechanisms

- Composite attacks: Combining multiple attack vectors to overcome layered defenses

- Hardware-based attacks: Exploiting vulnerabilities in specialized privacy-preserving hardware

- Protocol-level attacks: Targeting weaknesses in communication protocols rather than the cryptographic primitives themselves

Staying ahead of these threats requires continuous monitoring of the security research landscape and proactive updates to defense mechanisms.

Case Studies: Real-World Applications

Healthcare: Medical Record Embeddings

In healthcare, embeddings are used to represent medical concepts, patient records, and clinical notes. Privacy-preserving techniques enable collaborative research across institutions while protecting patient confidentiality. For example, the NIH's All of Us research program uses federated learning with differential privacy to analyze health data across multiple medical centers without centralizing sensitive patient information.

Key implementation details include:

- Using federated learning to train embeddings on local EHR systems

- Applying differential privacy to aggregate updates

- Implementing secure multi-party computation for specific cross-institutional queries

- Regular privacy audits and penetration testing

Finance: Fraud Detection Systems

Financial institutions use embeddings to represent transaction patterns, customer behavior, and risk factors. Privacy-preserving techniques allow banks to collaborate on fraud detection without sharing sensitive customer data. Major payment processors have implemented federated learning systems that train embedding models across multiple banks to identify emerging fraud patterns while maintaining data sovereignty.

The financial case study highlights:

- The use of homomorphic encryption for sensitive computations

- Secure multi-party computation for collaborative model training

- Differential privacy for published risk scores and fraud indicators

- Regulatory compliance with financial privacy regulations

Natural Language Processing: Privacy-Preserving Language Models

Large language models rely heavily on embeddings, but training data often contains sensitive information. Privacy-preserving techniques enable the development of language models that respect user privacy. Apple's implementation of differential privacy for keyboard suggestions represents a successful large-scale deployment, protecting user typing patterns while maintaining useful autocorrect and prediction features.

This application demonstrates:

- Practical implementation of local differential privacy

- Privacy-utility trade-offs in consumer applications

- Scalability challenges and solutions

- User experience considerations

Future Directions and Emerging Trends

Hardware Acceleration for Privacy-Preserving Computation

Specialized hardware is emerging to accelerate privacy-preserving computations. Intel's Software Guard Extensions (SGX), Google's Titan chips, and various academic prototypes demonstrate how hardware can enhance both performance and security for privacy-preserving embeddings. These developments promise to reduce the performance overhead that currently limits widespread adoption.

Quantum-Resistant Privacy Techniques

With quantum computing on the horizon, researchers are developing quantum-resistant privacy-preserving techniques. Post-quantum cryptography standards are being integrated into privacy-preserving frameworks, ensuring that today's implementations remain secure in a future with quantum computers.

Automated Privacy Parameter Tuning

Machine learning approaches to automatically tune privacy parameters are emerging. These systems use reinforcement learning or Bayesian optimization to find optimal trade-offs between privacy and utility for specific tasks and datasets, reducing the expertise required for implementation.

Standardization and Certification

Industry groups and standards bodies are working to establish benchmarks, certifications, and best practices for privacy-preserving AI. These efforts will make it easier for organizations to select appropriate techniques and prove compliance with regulatory requirements.

Getting Started: Practical Recommendations

For organizations beginning their journey with privacy-preserving embeddings, we recommend:

- Start with a clear assessment: Document your specific privacy requirements, threat model, and regulatory constraints before selecting techniques.

- Begin with simpler approaches: Differential privacy is often the most accessible starting point, with mature tooling and relatively straightforward implementation.

- Conduct pilot projects: Test privacy-preserving techniques on non-critical applications before scaling to sensitive use cases.

- Engage with the community: The privacy-preserving machine learning community is active and collaborative. Open-source projects and research papers provide valuable resources.

- Plan for evolution: Privacy techniques and regulations will continue to evolve. Design your systems with flexibility and upgradability in mind.

- Consider hybrid approaches: Most real-world applications benefit from combining multiple techniques to address different aspects of privacy protection.

- Implement defense in depth: Layer multiple privacy protections rather than relying on a single technique.

- Regularly audit and update: Continuously monitor for new vulnerabilities and update your implementations accordingly.

Conclusion: Balancing Innovation and Protection

Privacy-preserving embeddings represent a crucial evolution in responsible AI development. As embeddings become increasingly central to AI systems across industries, the techniques discussed in this article provide pathways to harness the power of data while respecting individual privacy rights.

The field continues to advance rapidly, with new techniques, improved implementations, and better understanding of risks emerging regularly. Success requires balancing multiple considerations: mathematical privacy guarantees, practical utility, performance requirements, regulatory compliance, and user trust.

Organizations that invest in privacy-preserving embeddings today position themselves not only for regulatory compliance but also for sustainable innovation. As users become more privacy-conscious and regulations more stringent, privacy-preserving techniques will transition from optional enhancements to fundamental requirements for ethical AI systems.

The journey toward fully privacy-preserving AI is ongoing, but the tools and techniques for privacy-preserving embeddings provide a solid foundation. By implementing these approaches thoughtfully and continuously, we can build AI systems that are both powerful and respectful of the privacy rights that form the bedrock of trust in the digital age.

Further Reading

Share

What's Your Reaction?

Like

15210

Like

15210

Dislike

185

Dislike

185

Love

1234

Love

1234

Funny

456

Funny

456

Angry

92

Angry

92

Sad

65

Sad

65

Wow

1190

Wow

1190

Excellent article! The clear explanations make complex topics accessible. I especially appreciated the analogies in the introduction - they really helped me understand why embeddings need privacy protection in the first place.

I've been working with embeddings for NLP applications and the privacy concerns have been worrying me. This article gives me concrete steps to address those concerns. The hybrid approaches section gave me several ideas for our next project iteration.

Thank you for this comprehensive guide. The section on implementation vulnerabilities was particularly important - it's easy to think that once you've implemented a privacy technique, you're done. Security is an ongoing process.

This article perfectly explains why our organization needs to prioritize privacy-preserving techniques. The regulatory risks alone are compelling, but the ethical imperative is even stronger. Sharing this with my entire team!

The tooling section was super helpful! We're evaluating different frameworks for our project and this gave us a great starting point. Has anyone here used PySyft in production? I'd love to hear about real-world experiences.

Very informative article! I'm wondering about the future directions section - how soon do you think quantum-resistant techniques will become necessary for production systems?

Good question, Olga! While large-scale quantum computers that can break current encryption are likely still years away, the 'harvest now, decrypt later' threat is real. Sensitive data with long-term value should already be considering post-quantum cryptography. NIST is finalizing standards, and we expect to see adoption in critical systems within 2-3 years.