Open-Source LLMs Compared: Which to Use and When

This comprehensive guide compares the leading open-source large language models available in 2025, helping you make informed decisions about which model to use for different applications. We analyze Llama 3, Mistral AI models, Google's Gemma, Microsoft's Phi series, and other emerging options across key dimensions: performance, hardware requirements, licensing, fine-tuning capabilities, and real-world deployment considerations. You'll learn practical decision frameworks, cost analysis for various deployment scenarios, and implementation checklists for different use cases including chat applications, coding assistants, content generation, and specialized business applications. The guide also covers licensing considerations for commercial use and provides troubleshooting guidance for common deployment challenges.

Open-Source LLMs Compared: Which to Use and When

The landscape of open-source large language models (LLMs) has exploded in recent years, creating both opportunities and confusion for developers, businesses, and researchers. With dozens of models available—each claiming unique advantages—making the right choice can feel overwhelming. This comprehensive guide cuts through the noise to provide clear, practical comparisons of the leading open-source LLMs in 2025, complete with decision frameworks to help you select the right model for your specific needs.

Unlike proprietary models locked behind API walls, open-source LLMs offer transparency, customization, and cost control. However, their diversity comes with complexity: different architectures, licensing terms, hardware requirements, and performance characteristics mean that no single model is "best" for all situations. The right choice depends entirely on your use case, resources, and technical constraints.

The Open-Source LLM Ecosystem in 2025

The open-source LLM space has matured significantly since the early days of GPT-2's release. Today's ecosystem features models specialized for different domains, ranging from general-purpose chatbots to code-generation specialists and multilingual processors. The major players have consolidated around a few key families, each with distinct characteristics and strategic directions.

Meta's Llama series continues to be a dominant force, with Llama 3 representing their latest generation. Mistral AI has emerged as a formidable European competitor, focusing on efficient architectures and permissive licensing. Google's Gemma models provide enterprise-friendly options with strong safety features, while Microsoft's Phi series targets educational and research applications with small but capable models. Various community-driven projects and research institutions contribute specialized models for niche applications.

Key Comparison Dimensions

Before diving into specific models, it's essential to understand the key dimensions along which LLMs differ. These factors will form the basis of our comparison framework:

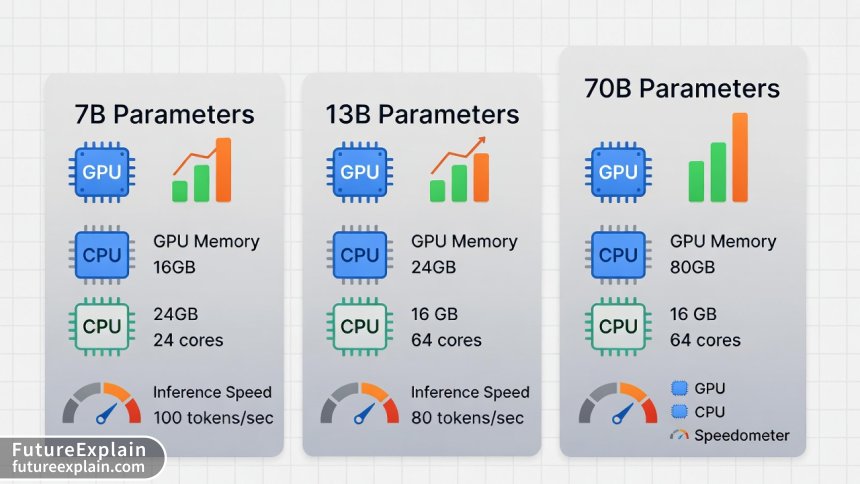

- Model Size and Architecture: Parameter count (7B, 13B, 70B, etc.), attention mechanisms, and overall design

- Performance Metrics: Benchmarks for reasoning, coding, mathematics, and language understanding

- Hardware Requirements: GPU memory, CPU, and RAM needed for inference and training

- Licensing and Commercial Use: Usage restrictions, attribution requirements, and commercial permissions

- Fine-tuning Capabilities: Support for different fine-tuning methods and available adapters

- Inference Speed and Cost: Tokens per second, latency, and computational costs

- Specialized Capabilities: Coding, multilingual processing, mathematical reasoning, etc.

Major Model Families Detailed Comparison

Meta Llama 3 Series

The Llama 3 family represents Meta's third-generation open LLMs, featuring significant improvements in reasoning, coding, and instruction-following capabilities. Available in 8B and 70B parameter versions (with a 400B variant rumored), Llama 3 models use Grouped Query Attention (GQA) for efficient inference and have a 128K token context window.

When to use Llama 3:

- General-purpose chatbot applications requiring strong reasoning

- Enterprise deployments needing stable, well-supported models

- Research projects benefiting from extensive documentation and community

- Applications requiring strong coding assistance alongside general chat

When to avoid Llama 3:

- Resource-constrained environments (the 70B model requires significant GPU memory)

- Applications requiring extremely fast inference on consumer hardware

- Projects with strict requirements for fully permissive licensing (Llama 3 has some use restrictions)

Licensing Considerations: Llama 3 uses Meta's custom license which allows commercial use but prohibits certain applications (large-scale model training, competing products, certain high-risk uses). For most business applications, it's permissible, but review the license carefully if you're building a competing AI service or have specific compliance requirements.

Hardware Requirements: The 8B model runs comfortably on a single 16GB GPU with 4-bit quantization, while the 70B model requires multiple high-end GPUs or significant quantization for practical deployment. Inference speed on the 8B model averages 30-50 tokens/second on an RTX 4090.

Mistral AI Models

Mistral AI has disrupted the market with highly efficient models that often outperform larger competitors. Their flagship Mixtral 8x7B uses a mixture-of-experts architecture that activates only a subset of parameters per token, achieving 70B-class performance with 13B-level resource requirements.

When to use Mistral models:

- Applications requiring strong performance on limited hardware

- European deployments or projects with data sovereignty requirements

- Real-time applications needing fast inference speeds

- Projects benefiting from Apache 2.0 licensing (most permissive option)

When to avoid Mistral models:

- Applications requiring extremely large context windows (standard is 32K)

- Projects needing extensive fine-tuning documentation and community examples

- Enterprise environments requiring vendor support contracts

Specialized Variants: Mistral offers several specialized models including Codestral (for coding) and a newly released multilingual variant. These maintain the efficiency advantages while excelling in specific domains.

Google Gemma Series

Google's Gemma models represent their entry into the open-weight LLM space, derived from the same technology as Gemini but with different training approaches. Available in 2B, 7B, and 27B sizes, Gemma models emphasize safety, responsibility, and ease of deployment through integration with Google Cloud services.

When to use Gemma:

- Enterprise applications requiring strong safety and responsibility features

- Google Cloud deployments with tight integration requirements

- Educational applications needing well-documented responsible AI practices

- Applications benefiting from Google's research on model safety and alignment

When to avoid Gemma:

- Projects requiring the absolute highest performance on standard benchmarks

- Applications needing extensive fine-tuning flexibility (more restricted than alternatives)

- Environments without Google Cloud infrastructure or preference

Safety Features: Gemma includes built-in safety filters, detailed usage guidelines, and extensive documentation on responsible deployment—making it particularly suitable for applications in regulated industries or public-facing services.

Microsoft Phi Series

Microsoft's Phi models take a different approach: smaller models (1.3B to 3.8B parameters) trained on high-quality "textbook-quality" data. The philosophy is that smaller models trained on excellent data can outperform larger models trained on lower-quality data for many practical applications.

When to use Phi models:

- Mobile and edge device deployments with strict resource constraints

- Educational and research applications where model simplicity is valuable

- Applications requiring extremely fast inference on CPU-only hardware

- Projects benefiting from MIT licensing (extremely permissive)

When to avoid Phi models:

- Applications requiring complex reasoning or advanced coding assistance

- Chat applications needing strong conversational abilities

- Projects where maximum performance outweighs efficiency concerns

Specialized and Niche Models

Coding-Focused Models

Several models specialize in code generation and understanding:

- CodeLlama: Meta's code-specialized Llama variant with support for multiple programming languages

- WizardCoder: Fine-tuned models excelling at instruction-following for coding tasks

- DeepSeek-Coder: Strong performance on coding benchmarks with good multilingual support

For coding applications, consider your primary programming languages,是否需要集成开发 environment features, and whether you need only code generation or also code explanation and debugging assistance.

Multilingual Models

While most major models support multiple languages, some specialize in non-English applications:

- BLOOM and BLOOMZ: 176B parameter model trained on 46 natural languages and 13 programming languages

- XGLM: Microsoft's multilingual series with strong performance across 30+ languages

- Aya: Covering 101 languages with emphasis on under-resourced languages

Small but Capable Models

For resource-constrained environments, several small models deliver impressive performance:

- Qwen-1.8B: Alibaba's tiny but capable model running on almost any hardware

- TinyLlama: Community project demonstrating what's possible with 1.1B parameters

- StableLM-Zephyr: Stability AI's efficient 3B parameter model

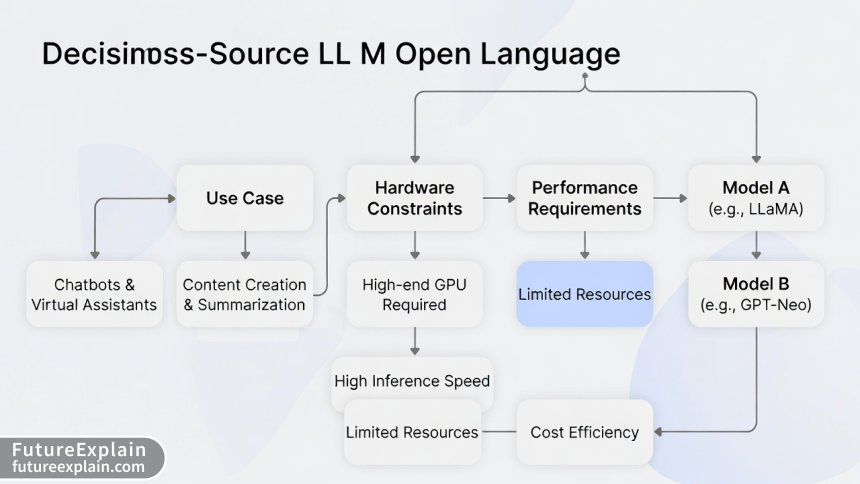

Decision Framework: Which Model When?

Choosing the right model requires considering multiple factors simultaneously. Here's a practical decision framework:

Step 1: Define Your Primary Use Case

Different models excel at different tasks. First categorize your application:

- General Chat/Assistant: Llama 3 8B, Mistral 7B, Gemma 7B

- Coding/Development: CodeLlama 34B, DeepSeek-Coder 33B, WizardCoder 34B

- Content Generation: Llama 3 70B (for quality), Mixtral 8x7B (for efficiency)

- Research/Experimentation: Phi-2 (for simplicity), Llama 3 (for comparability)

- Edge/Mobile Deployment: Phi-2, Qwen-1.8B, TinyLlama

- Enterprise/Production: Llama 3 70B, Gemma 27B, Mixtral 8x7B

Step 2: Assess Your Hardware Constraints

Your available hardware dramatically narrows the options:

Consumer GPU (8-16GB VRAM):

- 7B-8B models with 4-bit quantization: All run comfortably

- 13B models with aggressive quantization: Possible but slower

- 70B models: Not feasible without external services

Single High-End GPU (24GB VRAM):

- 13B models at 8-bit: Good performance

- 34B models with 4-bit quantization: Possible

- Mixtral 8x7B: Runs well with quantization

Multiple GPUs or Cloud Instances:

- 70B models: Feasible with model parallelism

- Mixture-of-experts models: Efficient scaling

- Multiple concurrent models: For A/B testing

Step 3: Consider Licensing Requirements

Licensing can be a deciding factor for commercial projects:

- Most Permissive (Apache 2.0/MIT): Mistral models, Phi series, many community models

- Commercial with Restrictions: Llama 3 (Meta license), Gemma (Google license)

- Research-Only: Some academic models, check carefully

- Special Requirements: Some models restrict certain applications (competing services, military use, etc.)

Step 4: Evaluate Performance Needs

Benchmark scores tell part of the story, but real-world performance matters more:

- Reasoning Tasks: MMLU, HellaSwag benchmarks

- Coding Ability: HumanEval, MBPP scores

- Multilingual: MGSM, XCOPA for non-English

- Real-world Testing: Try your actual prompts with different models

Implementation Considerations

Deployment Options

How you deploy the model affects which one to choose:

Local Deployment:

- Requires managing hardware, drivers, and optimization

- Best for: Data privacy, cost control, offline operation

- Recommended models: Smaller models (7B-13B) or efficiently architected models

Cloud Hosting:

- Services like Replicate, Together AI, Hugging Face Inference Endpoints

- Best for: Scalability, no hardware management, experimentation

- Recommended models: Any model supported by your provider

Hybrid Approaches:

- Local for privacy-sensitive parts, cloud for heavy lifting

- Best for: Balancing cost, privacy, and performance

Fine-tuning Strategies

Most models benefit from fine-tuning for specific applications:

Full Fine-tuning: Resource-intensive but most effective for major domain shifts

LoRA/QLoRA: Efficient fine-tuning that adds small adapters, preserving base model

Prompt Tuning: Learning continuous prompt embeddings, extremely efficient

Consider which models have extensive fine-tuning support and community recipes for your use case.

Cost Analysis and ROI Considerations

Total cost of ownership varies significantly between models and deployment strategies:

Hardware Costs

- Consumer GPU setup: $1,500-$3,000 upfront

- Cloud GPU instances: $0.50-$4.00/hour depending on model size

- Optimization savings: Efficient models can reduce hardware costs by 50-70%

Inference Cost per 1K Tokens

- 7B models (local): $0.0001-$0.0005 (electricity cost)

- 7B models (cloud): $0.0003-$0.0010

- 70B models (cloud): $0.003-$0.010

- Mixtral 8x7B: $0.0015-$0.0040 (70B-class performance at lower cost)

Development and Maintenance

- Model switching costs if you need to change later

- Fine-tuning and optimization time

- Monitoring and updating as new versions release

Performance Benchmarks: Beyond the Numbers

While benchmark scores provide helpful comparisons, they don't tell the whole story. Consider these real-world performance factors:

Inference Speed vs. Quality Trade-off

Smaller models generate tokens faster but may require more tokens to accomplish the same task. Measure effective throughput (quality-adjusted tokens per second) rather than raw token generation speed.

Context Window Utilization

Models with larger context windows (like Llama 3's 128K) can process more information but often struggle with accuracy in the middle of long contexts. Test your specific use case with different context lengths.

Specialized Task Performance

Some models excel at specific tasks despite mediocre benchmark averages. For example, certain smaller models perform exceptionally well on classification tasks even if they struggle with creative writing.

Case Studies: Real-World Selections

Case Study 1: Startup Building a Writing Assistant

Requirements: High-quality content generation, affordable cloud hosting, commercial licensing

Selection: Started with Mixtral 8x7B for its balance of quality and efficiency, later tested Llama 3 70B for premium tier

Result: Mixtral provided 85% of Llama 3's quality at 40% of the cost for their specific writing tasks

Case Study 2: Enterprise Internal Knowledge Assistant

Requirements: Data privacy (on-premises deployment), integration with existing systems, strong document understanding

Selection: Llama 3 8B fine-tuned with QLoRA on internal documents

Result: Successfully deployed on existing GPU servers, 90% accuracy on internal Q&A tasks

Case Study 3: Educational Coding Platform

Requirements: Multiple programming languages, student-friendly explanations, free tier available

Selection: CodeLlama 7B for basic tier, WizardCoder 34B for premium features

Result: Balanced cost and performance across different student skill levels

Future-Proofing Your Choice

The LLM landscape evolves rapidly. Strategies to future-proof your model selection:

- Choose models with active development communities rather than one-off releases

- Prefer standardized formats (GGUF, Safetensors) over proprietary ones

- Architect for model swapping with abstraction layers in your code

- Monitor emerging trends like mixture-of-experts, MoE-dense hybrids, and new attention mechanisms

Troubleshooting Common Issues

Out-of-Memory Errors

Even with quantization, some models exceed available memory:

- Solution: Try a smaller model or more aggressive quantization

- Alternative: Use model offloading techniques or CPU+GPU hybrid inference

- Prevention: Calculate memory requirements before deployment (rule of thumb: 2GB per 1B parameters for 16-bit, 0.5GB for 4-bit)

Slow Inference Speed

Models running slower than expected:

- Check: GPU utilization, memory bandwidth bottlenecks, CPU-GPU transfer overhead

- Optimize: Batch sizes, context length, attention implementation

- Consider: Switching to a more efficiently architected model (like Mistral's models)

Quality Issues with Quantized Models

Quantization reduces model size but can impact quality:

- Test different quantization methods: GPTQ, AWQ, GGUF (different trade-offs)

- Try higher precision quantization: 8-bit often preserves quality better than 4-bit

- Consider quantization-aware training if fine-tuning

Implementation Checklist

Before finalizing your model choice, complete this checklist:

- ✅ Define primary use cases and success metrics

- ✅ Inventory available hardware and budget constraints

- ✅ Review licensing requirements for your application

- ✅ Test 2-3 candidate models with your actual data/prompts

- ✅ Calculate total cost of ownership (hardware, cloud, electricity)

- ✅ Plan for scaling (will your choice still work at 10x volume?)

- ✅ Consider maintenance and update requirements

- ✅ Evaluate fine-tuning needs and available resources

- ✅ Check community support and documentation

- ✅ Create a rollback plan if the model doesn't perform as expected

Conclusion

Selecting the right open-source LLM involves balancing multiple factors: performance requirements, hardware constraints, licensing considerations, and future scalability needs. In 2025, we're fortunate to have excellent options across different categories—from the balanced capabilities of Llama 3 to the efficiency of Mistral's models and the specialization of coding-focused variants.

The key insight is that model selection isn't a one-time decision but an ongoing process. Start with the model that best matches your current constraints, but architect your system to allow for model swapping as needs evolve. Test candidates with your actual data rather than relying solely on benchmarks, and consider both immediate needs and long-term maintenance.

Remember that the "best" model is the one that delivers the required performance within your constraints—not necessarily the one with the highest benchmark scores. By following the decision frameworks and considerations outlined in this guide, you can make an informed choice that serves your application well both now and as it grows.

Visuals Produced by AI

Further Reading

Share

What's Your Reaction?

Like

1250

Like

1250

Dislike

12

Dislike

12

Love

342

Love

342

Funny

45

Funny

45

Angry

8

Angry

8

Sad

3

Sad

3

Wow

189

Wow

189

How do you handle model evaluation when benchmarks don't match your use case?

Create your own evaluation dataset! Sample real user queries, have humans rate outputs, track key metrics over time. Benchmarks are starting points, not endpoints.

What about security considerations? Some models might have been trained on malicious data or have backdoors.

Security is critical! Recommendations: 1) Use models from reputable sources (Meta, Google, Mistral, Hugging Face official), 2) Scan model weights for anomalies, 3) Implement input/output sanitization, 4) Use safety fine-tuned versions when available, 5) Monitor for prompt injection attacks. Open-source allows inspection but requires due diligence.

The troubleshooting section saved us last week! We were getting out-of-memory errors and the quantization advice fixed it.

How do these models compare for creative writing versus factual Q&A? We need both for different parts of our application.

For creative writing: larger models (70B) generally better, look for models trained on diverse creative content. For factual Q&A: retrieval-augmented generation (RAG) matters more than model size. You might use different models for different tasks.

What's the current state of model distillation? Can we get similar performance from smaller distilled models?

Model distillation has made good progress! Distilled models can achieve 80-90% of teacher model performance at 1/10th the size. Look for DistilLlama, MiniCPM, or other distilled versions. The trade-off: slightly reduced capabilities but much faster inference. Best for latency-sensitive applications where perfect quality isn't critical.

Great article! Shared it with my entire team. The comparison table format is especially useful for decision meetings.