Open-Source LLMs: Which Model Should You Choose?

This comprehensive guide helps beginners navigate the complex world of open-source large language models. We compare popular models like LLaMA 2, Mistral AI, Falcon, BLOOM, and others across key dimensions including performance, licensing, hardware requirements, and use cases. You'll learn practical selection criteria, understand the trade-offs between different model families, and get clear recommendations for common scenarios like chatbots, coding assistance, content generation, and research. The article also covers deployment considerations, cost implications, and future trends in open-source AI development.

Open-Source LLMs: Which Model Should You Choose?

The world of open-source large language models (LLMs) has exploded in recent years, offering an overwhelming array of options for developers, researchers, and businesses. From Meta's LLaMA family to Mistral AI's efficient models, and from Falcon to BLOOM, each model brings different strengths, licensing terms, and technical requirements. Choosing the right open-source LLM can feel daunting, especially for beginners navigating this rapidly evolving landscape.

This comprehensive guide will walk you through everything you need to know to make an informed decision. We'll compare the major open-source LLM families, examine key selection criteria, and provide practical recommendations based on different use cases. Whether you're building a chatbot, creating a coding assistant, generating content, or conducting research, you'll find clear guidance here.

Understanding the Open-Source LLM Ecosystem

Before diving into specific models, it's important to understand what "open-source" means in the context of large language models. Unlike proprietary models like GPT-4 or Claude, open-source LLMs provide varying levels of access to their weights, architecture, and training data. However, the licensing terms vary significantly—some are truly open for commercial use, while others have restrictions.

The open-source LLM movement began gaining momentum in 2022-2023 as organizations recognized the strategic importance of having transparent, customizable AI models. Today, the ecosystem includes models from major tech companies (Meta, Google), research organizations (Hugging Face, EleutherAI), and specialized AI startups (Mistral AI, Together Computer).

Key Selection Criteria for Choosing an LLM

When evaluating open-source LLMs, consider these eight critical factors:

- Performance & Capabilities: How well does the model perform on your specific tasks?

- Model Size & Parameters: From 7B to 70B+ parameters—what can your hardware handle?

- Licensing & Commercial Use: Can you use it commercially? What are the restrictions?

- Hardware Requirements: GPU memory, inference speed, and quantization options

- Community & Support: Size of community, documentation quality, and update frequency

- Fine-tuning Support: How easy is it to customize for your specific needs?

- Inference Cost: Both cloud hosting and self-hosting expenses

- Specialized Features: Multilingual support, coding capabilities, long context windows

Major Open-Source LLM Families Compared

Let's examine the leading open-source LLM families in detail, starting with the most influential ones in the ecosystem.

Meta's LLaMA Family

The LLaMA (Large Language Model Meta AI) family, particularly LLaMA 2, represents one of the most significant contributions to open-source AI. Released in July 2023, LLaMA 2 comes in parameter sizes of 7B, 13B, 34B, and 70B, with both base and chat-tuned versions available.

Strengths: - Strong overall performance across diverse benchmarks - Excellent documentation and widespread community adoption - Relatively permissive commercial license (with some restrictions) - Multiple quantization options available for efficient deployment

Considerations: - Requires submitting a request form for access (though usually approved) - Largest 70B model requires significant hardware (multiple high-end GPUs) - Some usage restrictions in the license agreement

Best for: General-purpose applications, research, educational projects, and commercial applications where licensing terms are acceptable.

Mistral AI Models

Mistral AI, a French startup, has made waves with its highly efficient models that often outperform larger counterparts. Their flagship model, Mistral 7B, demonstrates that parameter count isn't everything.

Strengths: - Exceptional performance per parameter (smaller but smarter) - Apache 2.0 license—truly open for commercial use - Efficient architecture requiring less computational resources - Strong multilingual capabilities, particularly in European languages

Considerations: - Smaller model family compared to LLaMA - Newer ecosystem with less extensive tooling - Limited parameter size options (primarily 7B)

Best for: Applications requiring efficiency, commercial projects needing permissive licensing, and multilingual European language support.

Falcon Models

Developed by the Technology Innovation Institute in Abu Dhabi, the Falcon family includes models ranging from 7B to 180B parameters, with Falcon 40B being particularly notable for its performance.

Strengths: - Apache 2.0 license with minimal restrictions - Strong performance on reasoning and technical tasks - Efficient training architecture (RefinedWeb dataset approach) - Good balance of performance and resource requirements

Considerations: - Smaller community compared to LLaMA - Less extensive fine-tuning ecosystem - Some quality variations across different task types

Best for: Commercial applications needing permissive licensing, technical/coding tasks, and research institutions.

BLOOM & BLOOMZ

BLOOM (BigScience Large Open-science Open-access Multilingual Language Model) represents one of the most ambitious open-science projects, created by over 1,000 researchers across 70+ countries.

Strengths: - Truly open-source with Responsible AI License - Exceptional multilingual capabilities (46 languages) - Transparent development process and documentation - 176B parameter version available for large-scale applications

Considerations: - Resource-intensive, especially the 176B version - Some performance trade-offs compared to more recent models - Less optimized for chat/conversational applications

Best for: Multilingual applications, research in language diversity, educational purposes, and projects prioritizing transparency.

Specialized and Niche Models

Beyond the major families, several specialized models excel in particular domains:

Code-Focused Models

For development and coding applications, consider these specialized models:

- Code Llama: Meta's code-specialized variant of LLaMA 2, available in 7B, 13B, and 34B parameter sizes with Python-specific and instruction-following variants

- WizardCoder: Evol-Instruct trained models that excel at code generation and explanation

- StarCoder: 15.5B parameter model trained on 80+ programming languages with permissive license

Instruction-Tuned Models

For conversational applications, these instruction-tuned models are particularly effective:

- Vicuna: Fine-tuned from LLaMA with impressive conversational abilities

- Alpaca: Stanford's instruction-following LLaMA model (though with non-commercial license)

- OpenAssistant: Community-driven conversational AI model

Performance Benchmarks: What the Numbers Really Mean

When comparing LLMs, you'll encounter various benchmarks like MMLU, HellaSwag, TruthfulQA, and GSM8K. Understanding what these measure is crucial for selecting the right model for your needs.

MMLU (Massive Multitask Language Understanding): Measures knowledge across 57 subjects including humanities, STEM, and social sciences. Higher scores indicate broader knowledge.

HellaSwag: Tests commonsense reasoning about physical situations. Important for applications requiring real-world understanding.

TruthfulQA: Evaluates truthfulness and tendency to generate misinformation. Critical for factual applications.

GSM8K: Grade school math problems testing mathematical reasoning. Important for technical and analytical tasks.

HumanEval: Coding problem-solving ability. Essential for development tools.

Most comparison tables show aggregate scores, but you should examine performance on specific benchmarks relevant to your use case. For instance, a chatbot for customer support might prioritize TruthfulQA and MMLU scores, while a coding assistant should focus on HumanEval performance. Also note that benchmark results can vary based on prompting techniques and evaluation methodologies, so consider them as guidelines rather than absolute measures.

Hardware Requirements and Deployment Considerations

One of the most practical aspects of choosing an LLM is determining what hardware you need. This depends on whether you're running inference (using the model) or training/fine-tuning.

Memory Requirements

As a rough guideline for inference:

- 7B parameter models: ~14GB FP16, ~4GB 4-bit quantized

- 13B parameter models: ~26GB FP16, ~7GB 4-bit quantized

- 34B parameter models: ~68GB FP16, ~18GB 4-bit quantized

- 70B parameter models: ~140GB FP16, ~35GB 4-bit quantized

Quantization techniques (reducing precision from 16-bit to 8-bit, 4-bit, or even lower) can dramatically reduce memory requirements with minimal quality loss for many applications.

GPU Recommendations

Based on model size and quantization:

- Consumer GPUs (RTX 3090/4090, 24GB): Can run 7B-13B models at full precision or 34B models with quantization

- Professional GPUs (A100 40/80GB): Can handle 34B-70B models with appropriate quantization

- Multi-GPU setups: Required for larger models or batch processing

Cloud Deployment Options

If self-hosting isn't feasible, consider these cloud options:

- Hugging Face Inference Endpoints: Easy deployment with pay-per-use pricing

- Replicate: Simple API for popular open-source models

- AWS SageMaker, Google Vertex AI, Azure ML: Enterprise-grade deployment with more control

- Together AI, Anyscale: Specialized LLM hosting services

Licensing Deep Dive: What You Can and Cannot Do

Licensing is one of the most confusing aspects of open-source LLMs. Here's a clear breakdown of common license types:

Apache 2.0 License

Examples: Mistral 7B, Falcon, many fine-tuned variants

Permissions: Commercial use, modification, distribution

Conditions: Provide attribution, state changes

Best for: Commercial products, startups, any project needing flexibility

LLaMA 2 Community License

Examples: LLaMA 2 models

Permissions: Commercial use with restrictions

Conditions: Cannot use to improve other LLMs, monthly active user limit for largest companies

Best for: Most commercial applications except very large-scale deployments by major tech companies

Non-Commercial/Research Licenses

Examples: Original LLaMA, Alpaca

Permissions: Research and non-commercial use only

Conditions: No commercial deployment

Best for: Academic research, personal projects, experimentation

Responsible AI Licenses

Examples: BLOOM

Permissions Conditions: Cannot use for harmful applications, must share modifications

Best for: Organizations prioritizing ethical AI, transparent projects

Fine-Tuning and Customization Options

One major advantage of open-source LLMs is the ability to fine-tune them on your specific data. Different models have varying levels of fine-tuning support:

Full Fine-Tuning

Training all model parameters on your dataset. Requires significant computational resources but yields the most customized results. Best for organizations with large, unique datasets.

Parameter-Efficient Fine-Tuning (PEFT)

Techniques like LoRA (Low-Rank Adaptation) train only a small subset of parameters. Much more efficient and often achieves similar results to full fine-tuning. Ideal for most practical applications.

Instruction Tuning

Training the model to follow instructions better. Most open-source models now provide instruction-tuned variants, but you can further customize for your specific instruction format.

Quantization-Aware Training

Fine-tuning models that will be quantized for deployment. Helps maintain quality after reducing precision.

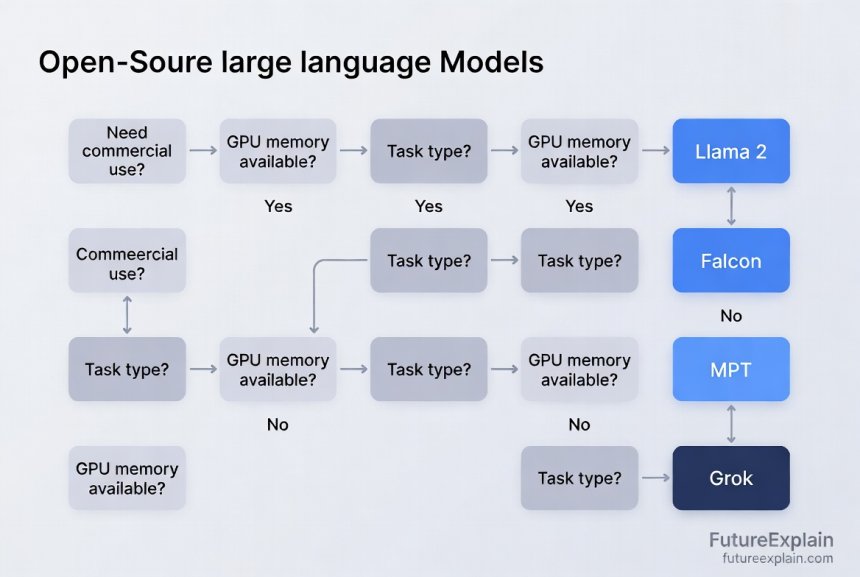

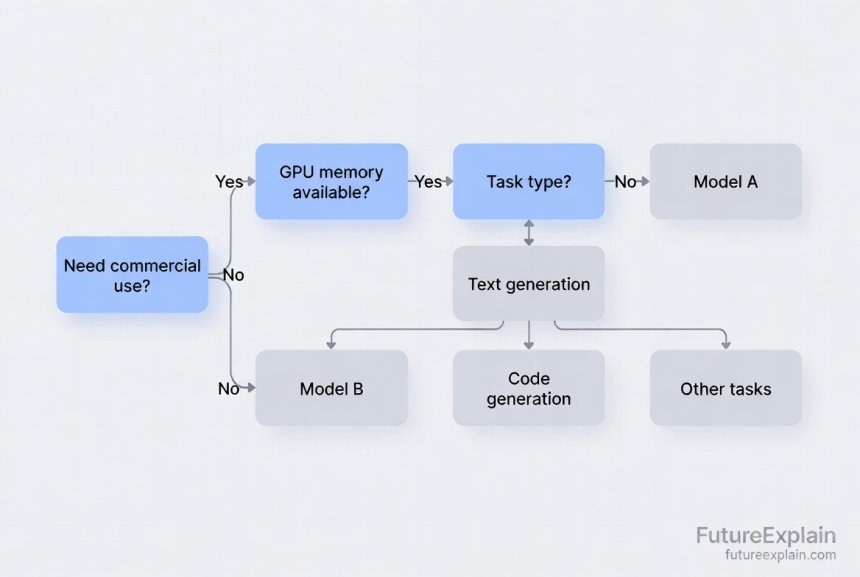

Practical Decision Framework

Based on the factors we've discussed, here's a step-by-step framework for choosing your open-source LLM:

Step 1: Define Your Use Case Clearly

- What specific tasks will the model perform?

- What quality level is acceptable?

- What latency requirements do you have?

- What's your budget for deployment?

Step 2: Assess Licensing Requirements

- Do you need commercial usage rights?

- Are you subject to any industry regulations?

- Do you plan to modify and redistribute the model?

Step 3: Evaluate Hardware Constraints

- What GPU memory is available?

- Can you use quantization techniques?

- Will you need to scale in the future?

Step 4: Check Performance on Relevant Tasks

- Find benchmark results for your specific task type

- Test candidate models with your own evaluation set

- Consider specialized models if they exist for your domain

Step 5: Consider Ecosystem Factors

- Is there good documentation and community support?

- Are there fine-tuned variants available?

- What tools exist for deployment and monitoring?

Recommended Models for Common Use Cases

Based on current (mid-2024) models and performance:

General-Purpose Chatbot

Primary Choice: LLaMA 2 13B Chat

Why: Good balance of performance and resource requirements, strong conversational abilities

Alternative: Mistral 7B Instruct (if licensing is critical)

Deployment: 4-bit quantization on 24GB GPU or cloud endpoint

Coding Assistant

Primary Choice: Code Llama 13B Python

Why: Specialized for code, strong performance on programming tasks

Alternative: WizardCoder 15B (if larger context needed)

Deployment: 8-bit quantization for best quality/speed balance

Content Generation & Writing

Primary Choice: Mistral 7B Instruct

Why: High quality writing, efficient, permissive license

Alternative: LLaMA 2 7B Chat (if more parameter-heavy tasks)

Deployment: Can run efficiently on consumer hardware

Multilingual Applications

Primary Choice: BLOOMZ 7B

Why: Best multilingual coverage, transparent development

Alternative: LLaMA 2 with multilingual fine-tuning

Deployment: Moderate resource requirements, good quantization support

Research & Experimentation

Primary Choice: Depends on research focus

Why: Choose based on specific research questions

Alternative: Consider smaller models for faster iteration

Deployment: Local development environment preferred

Future Trends in Open-Source LLMs

The open-source LLM landscape is evolving rapidly. Here are trends to watch:

Mixture of Experts (MoE) Architectures

Models like Mixtral 8x7B (from Mistral AI) use multiple expert networks that activate differently based on the input. This allows for larger effective parameter counts with lower inference costs.

Longer Context Windows

New models and techniques are pushing context windows from 4K tokens to 32K, 64K, and even 128K+ tokens, enabling processing of entire documents or long conversations.

Specialization vs. Generalization

The ecosystem is bifurcating into highly specialized models (for coding, medicine, law) and more capable general-purpose models. Choosing between them depends on your specific needs.

Efficiency Improvements

Continued advances in quantization, pruning, and distillation are making larger models accessible on more modest hardware.

Regulatory Developments

As AI regulation evolves globally, licensing and usage terms may change, particularly for models with commercial restrictions.

Getting Started: Your First Open-Source LLM Deployment

Ready to try an open-source LLM? Here's a simple path to get started:

- Start with a small model: Begin with a 7B parameter model like Mistral 7B or LLaMA 2 7B to understand the basics.

- Use a pre-configured tool: Tools like Ollama, LM Studio, or Text Generation WebUI make it easy to run models locally without deep technical knowledge.

- Experiment with quantization: Try different quantization levels (Q4, Q8) to see the trade-offs between quality and speed/memory.

- Test with your use case: Create a small evaluation set specific to your needs and test multiple models.

- Consider cloud options: If local hardware is limiting, try cloud services like Hugging Face Inference Endpoints for easier scaling.

Common Pitfalls to Avoid

When working with open-source LLMs, watch out for these common mistakes:

- Ignoring licensing terms: Always verify you can use the model for your intended purpose

- Underestimating hardware requirements: Test memory usage with your specific deployment configuration

- Overlooking inference costs: Cloud hosting costs can add up quickly at scale

- Not evaluating on your specific tasks: Benchmark scores don't always reflect real-world performance

- Neglecting safety considerations: Even open-source models can generate harmful content without proper safeguards

Further Reading and Resources

To continue your exploration of open-source LLMs:

- Open-Source LLMs: Which Model Should You Choose? (this article)

- How Does Machine Learning Work? Explained Simply

- Fine-Tuning vs. Prompting: Practical Pros and Cons

- Benchmarking LLMs: What Metrics Matter?

The world of open-source LLMs offers incredible opportunities for innovation and customization. By understanding the trade-offs between different models and following a systematic selection process, you can choose the right tool for your specific needs. Remember that this field evolves rapidly, so stay engaged with the community and be prepared to re-evaluate your choices as new models and techniques emerge.

Share

What's Your Reaction?

Like

1420

Like

1420

Dislike

15

Dislike

15

Love

320

Love

320

Funny

45

Funny

45

Angry

8

Angry

8

Sad

3

Sad

3

Wow

210

Wow

210

I appreciate the balanced tone. So many AI articles are either overly hype or excessively skeptical. This gives practical information without sensationalism.

The multilingual section is too brief in my opinion. As someone working with non-English content daily, I need more detail on which models perform best for specific languages.

Could you do a follow-up comparing the actual implementation difficulty? Some models have much better documentation and community support than others when it comes to deployment.

That's an excellent suggestion, Zariyah! Implementation difficulty and ecosystem maturity are crucial practical considerations. We're working on an article about LLM deployment pipelines that will cover exactly this - comparing the ease of implementation, documentation quality, and community support for different models and deployment approaches.

I've been using LLaMA 2 7B for a personal project and the performance has been impressive for its size. The recommendations here match my experience - it's a great starting point for most applications.

The future trends section is insightful. I'm particularly interested in the longer context windows - being able to process entire documents could revolutionize how we use LLMs for research.

This is the most comprehensive guide I've seen on this topic. I particularly appreciate how you didn't just list models but provided a framework for decision-making based on actual use cases.