Open Data & Licenses: Where to Source Training Data

Finding the right data to train agricultural AI models is a major hurdle. This guide demystifies the world of open and public datasets for beginners. You will learn where to find high-quality, legal training data from sources like NASA, the UN, and leading research labs. We explain common data licenses in simple terms, show you how to build a step-by-step sourcing strategy, and provide a curated list of key agricultural datasets for disease detection, yield prediction, and more. The article also covers when to supplement public data with custom collection and how to navigate the legal landscape to use data responsibly and effectively.

Introduction: Data – The Fuel for Agricultural AI

Imagine trying to teach someone to identify a diseased tomato plant if they had never seen one. You would need pictures, descriptions, and examples. Training an artificial intelligence model is remarkably similar. The "knowledge" you give it comes in the form of data: thousands of images of crops, terabytes of soil sensor readings, decades of weather patterns, and satellite maps of fields. Without this fuel, even the most sophisticated AI algorithm is powerless.

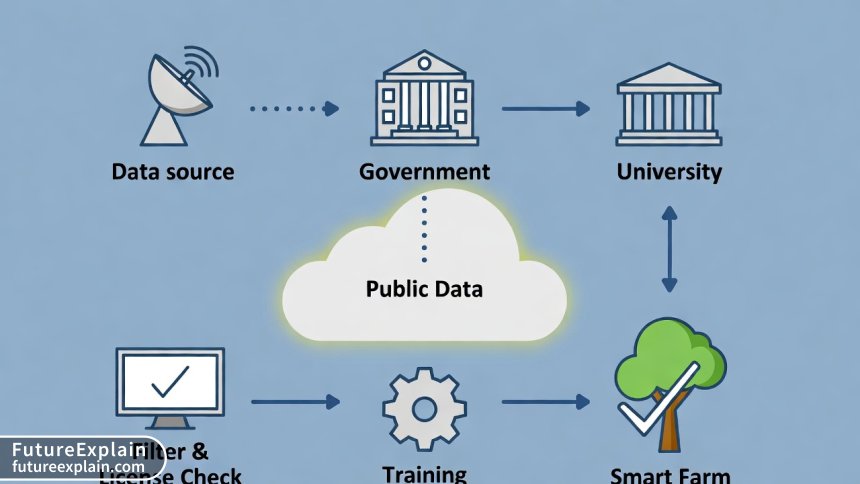

For anyone looking to build AI solutions in agriculture—whether for predicting yields, spotting pests, or optimizing irrigation—sourcing this training data is the first and often most daunting challenge. Collecting it all yourself is prohibitively expensive and time-consuming. This is where the world of open data and public datasets becomes your greatest ally. These are pre-existing collections of information, made freely available by governments, research institutions, and international organizations for anyone to use.

However, "free" doesn't mean "without rules." Every dataset comes with a license, a legal document that tells you exactly how you can and cannot use the data. Ignoring this step can lead to serious legal and ethical problems down the road. This guide is designed for beginners, farmers, agronomists, and tech enthusiasts. We will walk you through where to find the best agricultural data, how to understand the licenses that govern them, and how to build a practical, responsible strategy for sourcing your AI training data.

What is "Open Data" in Agriculture?

In the simplest terms, open data is information that is made available to the public to be freely used, reused, and redistributed. In agriculture, this spans an incredibly diverse range of information types that are crucial for building intelligent systems.

- Visual Data: This includes images and videos. Think of photos of healthy and diseased plant leaves from a dataset like PlantVillage, aerial images from drones, or satellite imagery from agencies like the European Space Agency [citation:1]. This data trains computer vision models to "see" and identify problems.

- Environmental & Sensor Data: This is numerical data about the physical world. Examples include global soil moisture levels from NASA's SMAP mission, historical weather patterns, real-time temperature from field sensors, and soil composition databases [citation:1]. This data feeds models that predict outcomes like drought risk or fertilizer needs.

- Geospatial Data: This is data tied to specific locations on Earth. The USDA's Cropland Data Layer is a prime example, showing what crop is planted on every field in the United States [citation:3]. This is essential for mapping, logistics, and regional analysis.

- Statistical & Economic Data: This includes numbers on crop production, market prices, land use, and trade. The UN's Food and Agriculture Organization (FAO) provides a massive statistical database (FAOSTAT) covering these topics for over 245 countries [citation:2]. This data helps analyze trends and make economic forecasts.

The common thread is that these datasets are created by public bodies or research communities for the public good. Using them allows you to stand on the shoulders of giants, leveraging millions of dollars worth of publicly funded data collection for your own projects.

Key Sources for Agricultural Training Data

Knowing where to look is half the battle. High-quality data is scattered across platforms maintained by different types of organizations. Here is a roadmap to the most important sources.

1. International & Governmental Organizations (The Gold Standard)

These sources are typically the most authoritative and well-documented. Their data is collected using standardized methods, making it highly reliable.

- Food and Agriculture Organization of the United Nations (FAO): The FAO is a primary global source. Its FAOSTAT portal offers free access to food and agriculture statistics for over 245 countries, covering production, trade, and land use from 1961 onward [citation:2]. Furthermore, the FAO encourages the reuse of its data and databases for research and scientific purposes, providing clear terms of use [citation:6].

- U.S. Department of Agriculture (USDA): The USDA's National Agricultural Statistics Service (NASS) is a powerhouse of U.S.-focused data. Its Quick Stats database is a comprehensive tool for finding agricultural statistics for every state and county in the United States [citation:3]. They also provide sophisticated geospatial data services like VegScape for monitoring crop conditions [citation:3].

- Space Agencies (NASA & ESA): For a global, bird's-eye view, satellite data is indispensable. NASA provides soil moisture data via its Soil Moisture Active Passive (SMAP) mission [citation:1]. The European Space Agency's Sentinel-2 satellite provides free, high-resolution multispectral imagery of the entire planet, perfect for monitoring crop health and land use [citation:1].

2. Academic & Research Institutions

Universities and research labs are on the cutting edge and often publish the datasets they create to advance science. These are fantastic for specific, novel problems.

- Curated Repositories: Platforms like Kaggle and Zenodo host thousands of datasets uploaded by researchers. For example, the "Crop Yield Prediction Dataset" on Kaggle combines historical yields, weather, and soil data [citation:1].

- Project-Specific Hubs: Many major research projects release their data. The Agriculture-Vision Dataset, from a university consortium, contains over 94,000 annotated aerial field images for anomaly detection [citation:1]. The CGIAR Big Data Platform offers open datasets focused on agriculture in developing countries [citation:1].

- Community Lists: Passionate individuals often create curated lists. The GitHub repository "ricber/digital-agriculture-datasets" is an excellent example, gathering publicly available datasets for AI and robotics in agriculture, organized by task [citation:10].

3. Private Sector & Data Marketplaces

While this article focuses on free and open data, it's important to be aware of the broader ecosystem. Sometimes, the perfect dataset isn't freely available.

- Data Annotation Services: Companies like Keymakr specialize in creating custom, labeled training data for agricultural AI, handling tasks from ripeness monitoring to disease detection [citation:9]. This is a paid service for when you need very specific, high-quality annotations.

- Emerging Licensing Markets: As seen in recent lawsuits (like The New York Times v. OpenAI), the legal landscape for using data is evolving [citation:8]. This is driving the creation of formal data licensing markets, where companies can license premium datasets (e.g., specialized image libraries) for training, often under subscription models [citation:8].

Understanding Data Licenses: The Essential Rules of the Road

You've found a perfect dataset. Before you download it, you must check its license. A license is a set of permissions and restrictions that the data owner grants you. Ignoring it can invalidate your project or lead to legal action.

Common Open Licenses (And What They Mean)

Creative Commons (CC) licenses are the most common standard for open data. Here is what the key versions mean for your AI project:

- CC BY (Attribution): This is a very permissive license. You can use, modify, and distribute the data, even commercially, as long as you give credit to the original creator. The FAO uses a variant (CC BY-NC-SA 3.0 IGO) for its FAOLEX database, requiring attribution, non-commercial use, and sharing adaptations under the same terms [citation:6].

- CC BY-SA (Attribution-ShareAlike): You can remix and build upon the data, but any new work you create must be licensed under the identical terms. This "copyleft" clause ensures the data ecosystem stays open.

- CC BY-NC (Attribution-NonCommercial): You can use and adapt the data, but not for commercial purposes. This is common for academic datasets.

- Public Domain (CC0): The creator has waived all rights. You can use the data for any purpose with no requirements. Data from many U.S. government agencies (like much of the USDA's data) is effectively in the public domain.

- Custom/Institutional Licenses: Always read the specific "Terms of Use" on a website. The FAO, for instance, has its own Statistical Database Terms of Use that accompany its open data [citation:6].

A Critical Gap: Unlicensed or Ambiguous Data

A major, under-discussed problem in the open-source world is datasets with no clear license. As highlighted in a GitHub issue for an AI project, the lack of a license file in a training data repository creates significant uncertainty for developers who want to build upon that work [citation:4]. If there is no license, you do not have legal permission to use the data. Always contact the maintainer to request clarification or a license before proceeding.

The Legal Landscape: Lawsuits Shaping Data Use

The rules are being written in real-time. Several high-profile lawsuits are defining how copyrighted material can be used to train AI. While these often involve text and images from publishers, the principles affect all data.

- In Kramer v. Meta (2025), a judge ruled that using copyrighted books for training could be considered "fair use" in that specific case [citation:8].

- However, in The New York Times v. OpenAI, claims proceeded because the AI was allegedly reproducing articles verbatim [citation:8].

- The key takeaway is that transformation is key. Using data to train a model that creates new insights is more defensible than simply repackaging the original data. Sourcing data from legitimate, licensed providers is the safest path [citation:8].

A Practical Guide: Your Data Sourcing Strategy

With the sources and rules in mind, let's build a step-by-step action plan for sourcing your training data responsibly and effectively.

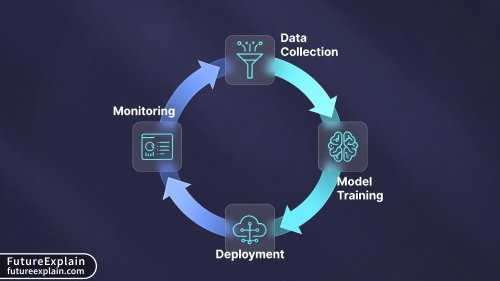

Phase 1: Define and Discover

- Pinpoint Your Need: Be specific. Do you need 10,000 images of corn blight? Hourly soil temperature data from the Midwest? Global wheat price history?

- Search Strategically: Start with the authoritative sources listed above. Use the curated list on the "digital-agriculture-datasets" GitHub repo as a jumping-off point [citation:10]. Search Kaggle and Zenodo for your specific crop or problem.

- Document Your Sources: Create a spreadsheet. List the dataset name, a link, the provider, the license (e.g., CC BY 4.0), and any specific requirements (like attribution text).

Phase 2: Evaluate and Comply

- License Check: For every dataset, find the license. It's usually in a file named `LICENSE`, `README`, or on the download page. Understand the terms completely.

- Quality Assessment: Look at the data. Are the images clear and well-lit? Is the sensor data complete, or are there gaps? Read any accompanying papers or documentation to understand how the data was collected.

- Plan for Compliance: If the license is CC BY, decide how you will provide attribution in your project documentation or application interface.

Phase 3: Prepare and Process

Open data is rarely "ready-to-train." It requires cleaning and preparation, which is a normal part of the workflow. As noted in guides on using AgTech data, public datasets can be messy and were often created for a different original purpose, so they need to be adapted to your specific training goals [citation:5].

- Clean the Data: This involves handling missing values, removing duplicates, correcting errors, and formatting everything consistently [citation:5].

- Annotate/Label: For image data, this might mean drawing bounding boxes around weeds or classifying leaves as healthy/diseased. While some datasets come pre-labeled (like PlantVillage), others may require this labor-intensive step. This is where a platform or service can be valuable [citation:9].

- Reformat: Convert data into the format your machine learning framework expects (e.g., specific image sizes, CSV layouts).

When Open Data Isn't Enough: The Case for Custom Data

Public datasets are a phenomenal starting point, but they have limits. They may not cover your specific local crop variety, the unique weed species in your region, or the exact sensor setup on your farm. As one analysis puts it, to achieve true precision agriculture, you will eventually need to create specific datasets tailored to your unique use case [citation:5].

Think of open data as the foundation. You can build a good, general-purpose model with it. To build a great, specialized model that operates with high accuracy in your specific context, you will likely need to supplement it with your own custom data. This involves collecting images from your own fields, logging data from your own sensors, and then annotating that new data. This combination of public and private data is the most powerful strategy [citation:5].

Conclusion: Building on a Foundation of Open Knowledge

The journey to building effective agricultural AI begins with data. The global community has already provided a rich foundation through countless open datasets from trusted scientific and governmental sources. Your role is to become a savvy navigator of this landscape: knowing where to find this data, understanding the licenses that enable its use, and skillfully preparing it for your unique goals.

Start small. Pick one problem, find one relevant dataset (like the PlantVillage dataset for disease detection), and work through the process of downloading, checking the license, and exploring the data. This hands-on experience is invaluable. By responsibly leveraging open data, you are not just building a model—you are participating in a global movement to apply shared knowledge to solve some of agriculture's most pressing challenges.

Visuals Produced by AI

Further Reading

Share

What's Your Reaction?

Like

1420

Like

1420

Dislike

23

Dislike

23

Love

310

Love

310

Funny

45

Funny

45

Angry

12

Angry

12

Sad

8

Sad

8

Wow

195

Wow

195

As a software developer new to agriculture, the categorization of data types (visual, environmental, geospatial) was incredibly clarifying. It helped me structure my search. Thank you for a practical, no-hype resource.

I work for a non-profit promoting sustainable farming. We want to create an open-source tool for smallholders. The strategy of combining big public data (like weather) with their own local field data is exactly the model we're exploring. This article provides the perfect framework to explain it to our team and funders.

From Jordan here. The focus on global sources like ESA Sentinel is helpful for those of us outside the US and Europe. Do you know of similar open satellite data initiatives focused on arid region agriculture or desertification?

Great question, Fatima. Sentinel data itself is global and excellent for monitoring vegetation health in any region. For arid regions specifically, I'd recommend looking at NASA's MODIS and VIIRS sensors for broader land cover trends. Also, the International Center for Agricultural Research in the Dry Areas (ICARDA), part of CGIAR, likely has curated datasets relevant to dryland farming. Their data portal would be an excellent next stop.

The link to the GitHub repo "digital-agriculture-datasets" is a treasure trove. I've already found three relevant datasets I didn't know about. This article should be required reading for anyone entering the AgriML space.

Great article, but I think it undersells the effort of "cleaning and preparation." We downloaded a satellite imagery dataset for a project, and 30% of the images were useless due to cloud cover. The "Phase 3" section is real—it can take longer than the actual model building.

You are 100% right, Thomas. The "data wrangling" phase is almost always the most time-consuming and unexpected part for newcomers. Your point about cloud cover is a perfect, real-world example. This is why checking a small sample of any dataset first is a crucial step. Thanks for adding that vital practical note.

I'm a data librarian at a university. This is one of the clearest public-facing explanations of data provenance and licensing I've seen. May I have permission to adapt part of the "Common Open Licenses" section into a handout for our researchers? (With full attribution, of course!).

Absolutely, Kendall! Please feel free to use and adapt it. That's exactly the spirit of open knowledge we want to promote. I'm delighted to hear it will be used to educate researchers.